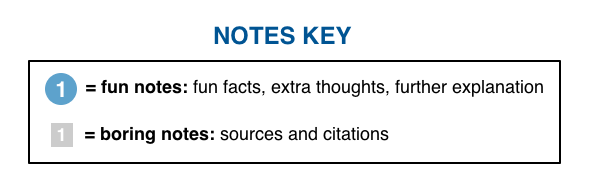

Note: If you want to print this post or read it offline, the PDF is probably the way to go. You can buy it here.

And here’s a G-rated version of the post, appropriate for all ages.

_______________________

Last month, I got a phone call.

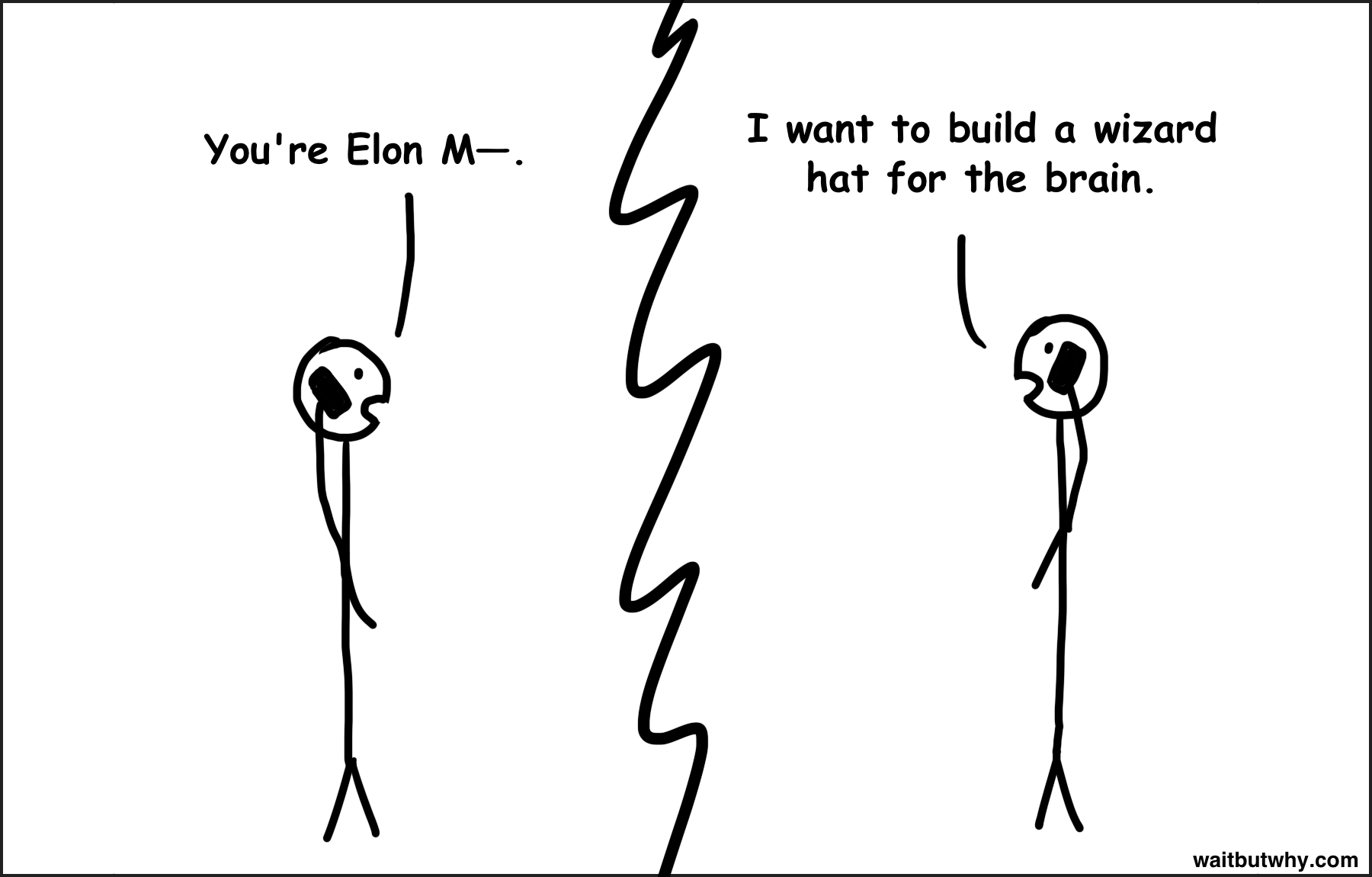

Okay maybe that’s not exactly how it happened, and maybe those weren’t his exact words. But after learning about the new company Elon Musk was starting, I’ve come to realize that that’s exactly what he’s trying to do.

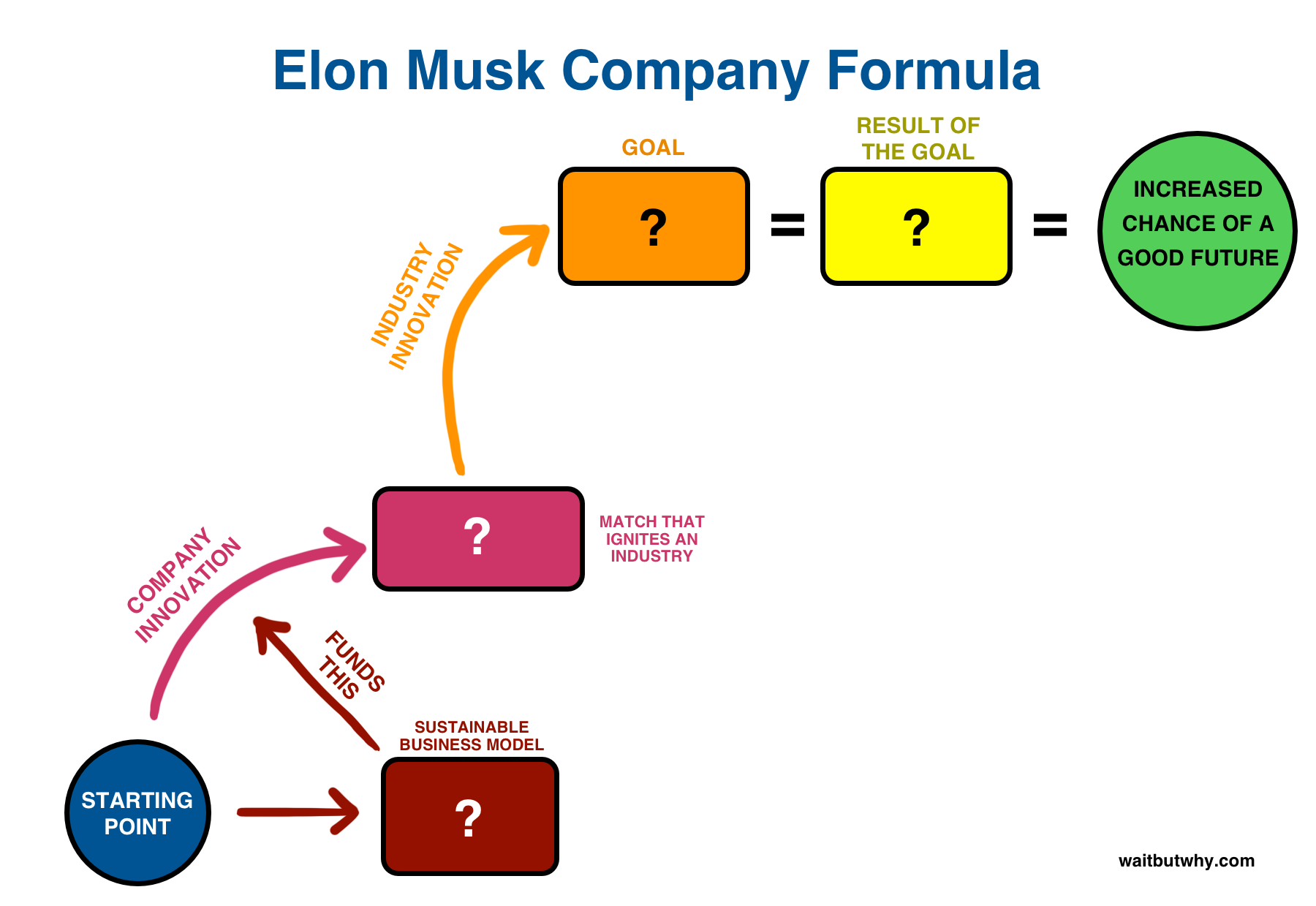

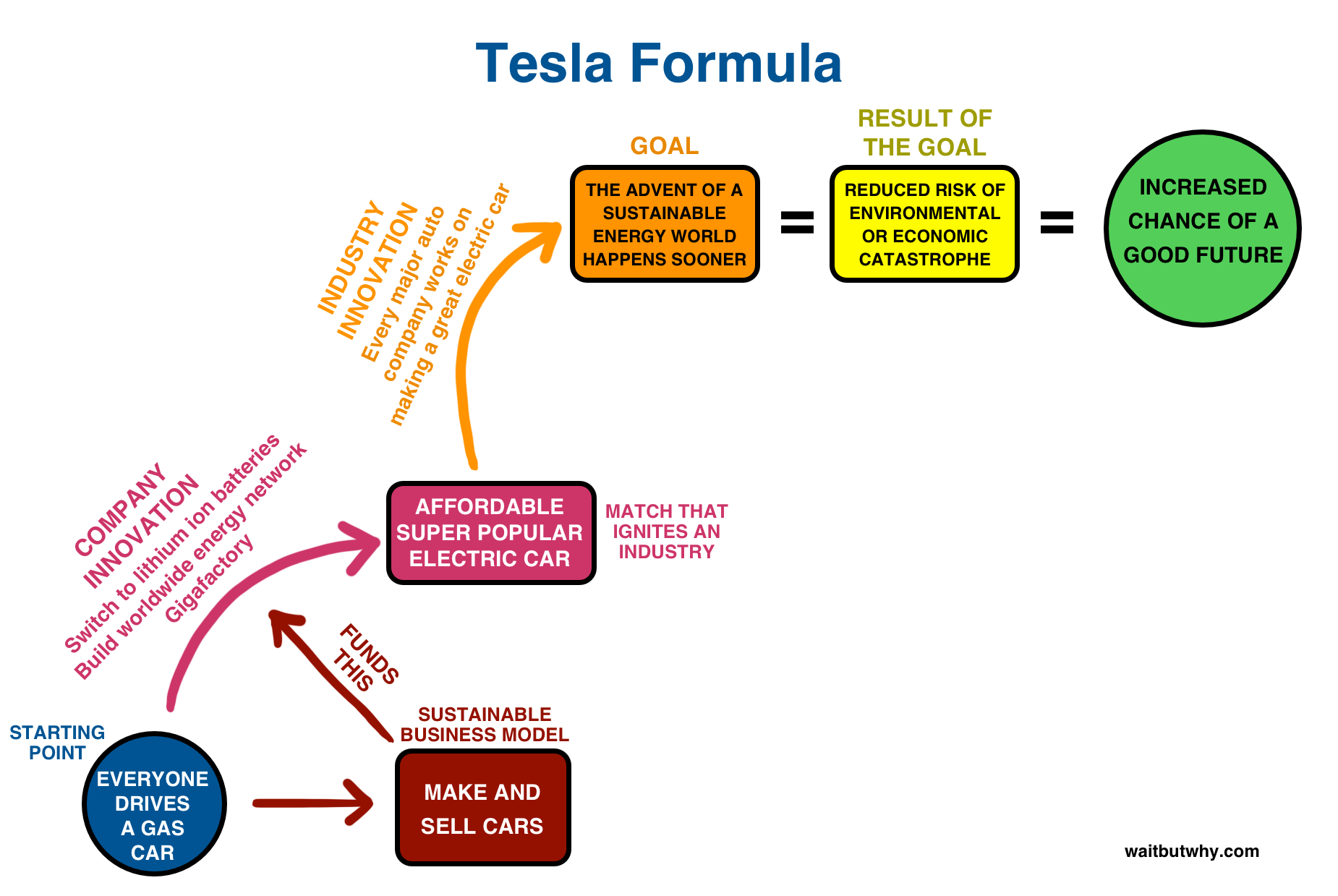

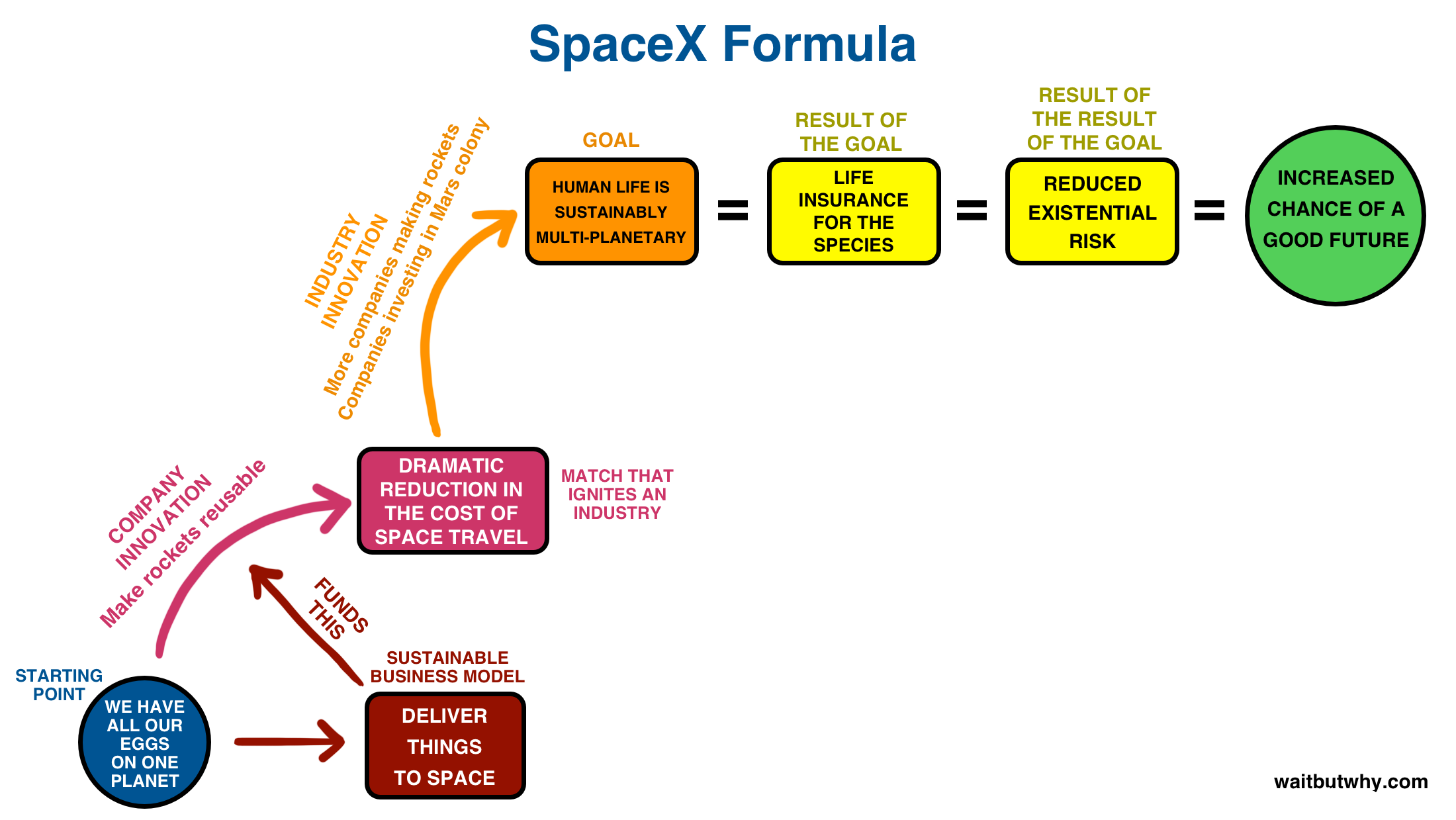

When I wrote about Tesla and SpaceX, I learned that you can only fully wrap your head around certain companies by zooming both way, way in and way, way out. In, on the technical challenges facing the engineers, out on the existential challenges facing our species. In on a snapshot of the world right now, out on the big story of how we got to this moment and what our far future could look like.

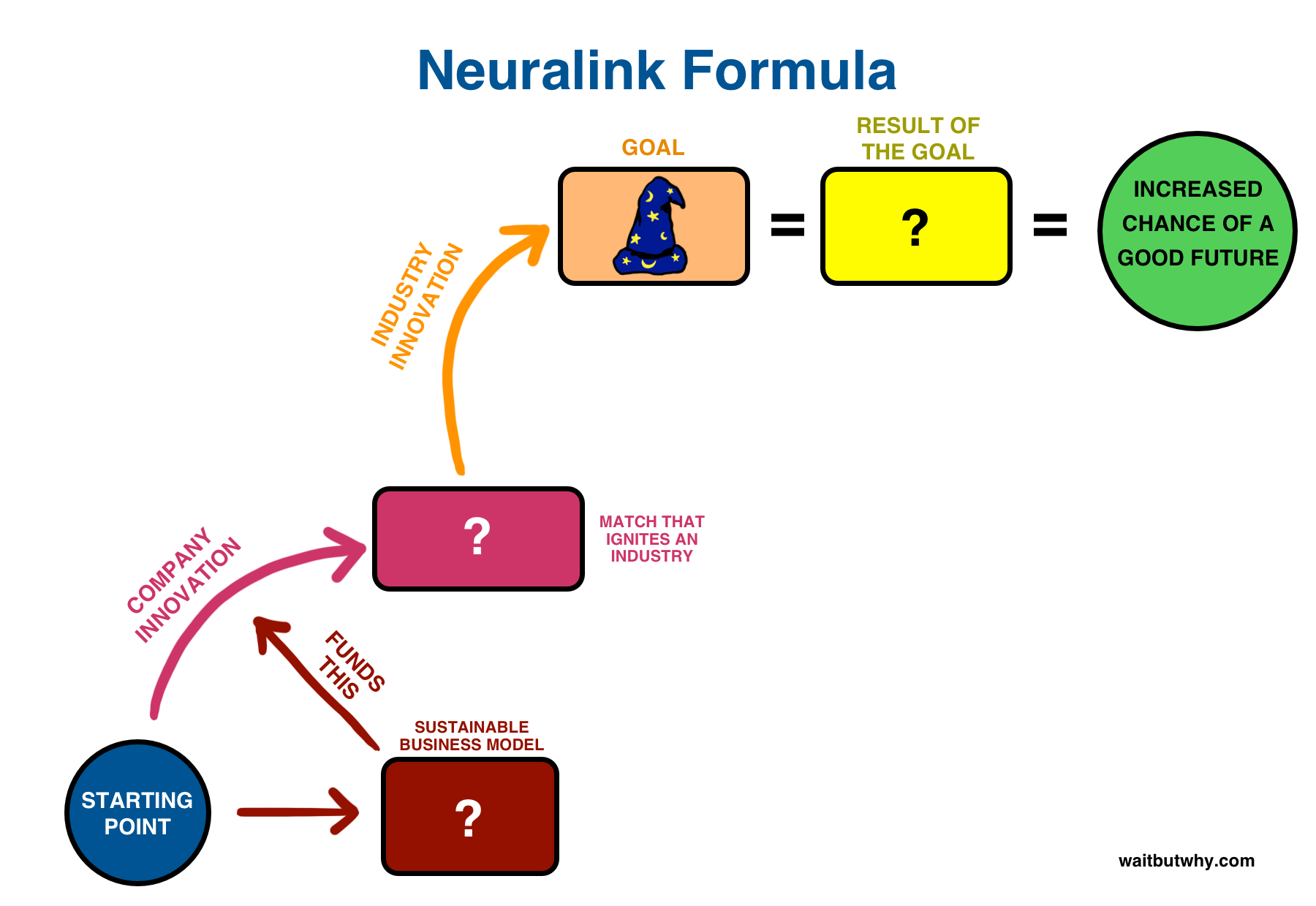

Not only is Elon’s new venture—Neuralink—the same type of deal, but six weeks after first learning about the company, I’m convinced that it somehow manages to eclipse Tesla and SpaceX in both the boldness of its engineering undertaking and the grandeur of its mission. The other two companies aim to redefine what future humans will do—Neuralink wants to redefine what future humans will be.

The mind-bending bigness of Neuralink’s mission, combined with the labyrinth of impossible complexity that is the human brain, made this the hardest set of concepts yet to fully wrap my head around—but it also made it the most exhilarating when, with enough time spent zoomed on both ends, it all finally clicked. I feel like I took a time machine to the future, and I’m here to tell you that it’s even weirder than we expect.

But before I can bring you in the time machine to show you what I found, we need to get in our zoom machine—because as I learned the hard way, Elon’s wizard hat plans cannot be properly understood until your head’s in the right place.

So wipe your brain clean of what it thinks it knows about itself and its future, put on soft clothes, and let’s jump into the vortex.

___________

Contents

Part 3: Brain-Machine Interfaces

Part 1: The Human Colossus

600 million years ago, no one really did anything, ever.

The problem is that no one had any nerves. Without nerves, you can’t move, or think, or process information of any kind. So you just had to kind of exist and wait there until you died.

But then came the jellyfish.

The jellyfish was the first animal to figure out that nerves were an obvious thing to make sure you had, and it had the world’s first nervous system—a nerve net.

The jellyfish’s nerve net allowed it to collect important information from the world around it—like where there were objects, predators, or food—and pass that information along, through a big game of telephone, to all parts of its body. Being able to receive and process information meant that the jellyfish could actually react to changes in its environment in order to increase the odds of life going well, rather than just floating aimlessly and hoping for the best.

A little later, a new animal came around who had an even cooler idea.

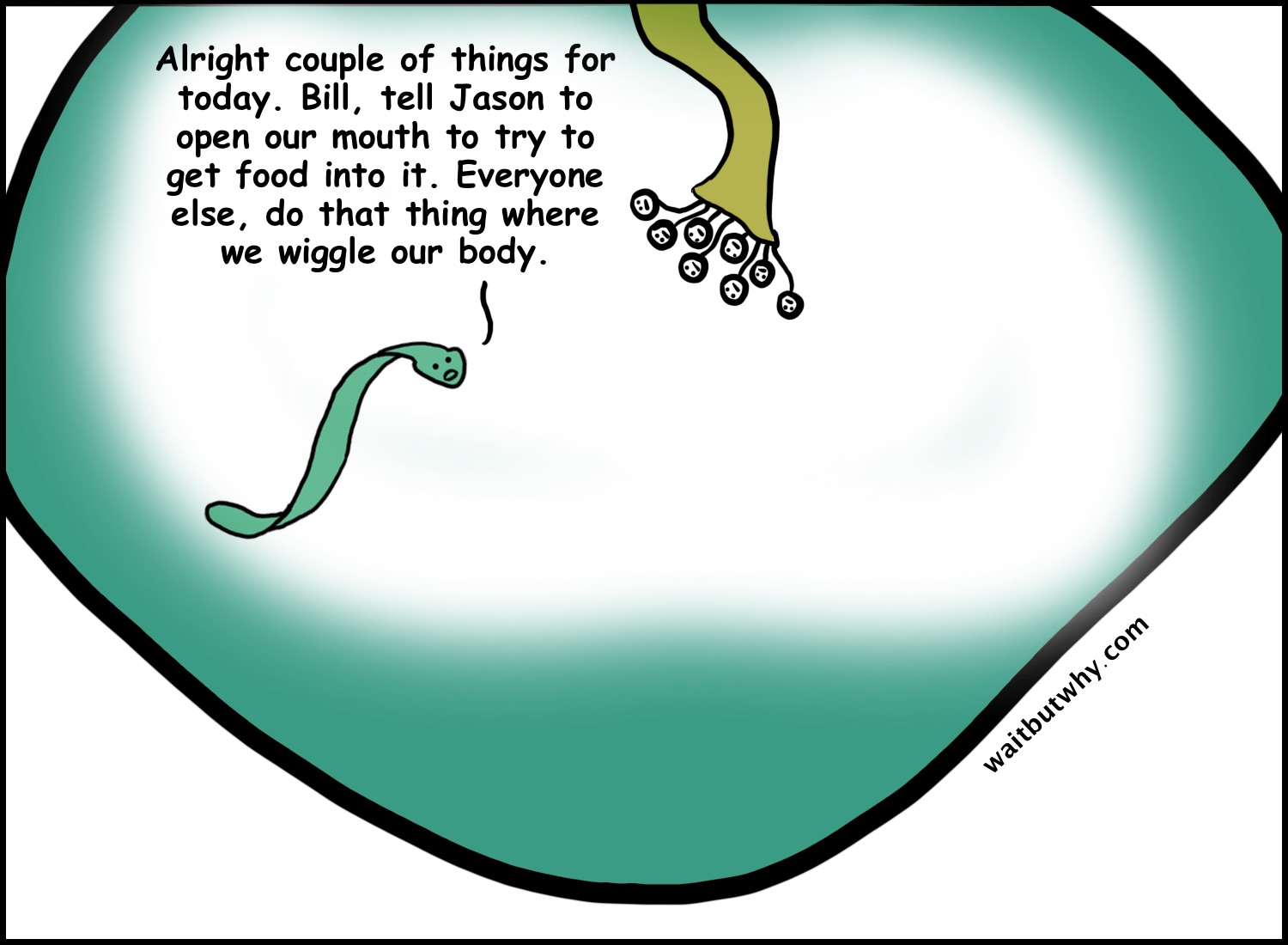

The flatworm figured out that you could get a lot more done if there was someone in the nervous system who was in charge of everything—a nervous system boss. The boss lived in the flatworm’s head and had a rule that all nerves in the body had to report any new information directly to him. So instead of arranging themselves in a net shape, the flatworm’s nervous system all revolved around a central highway of messenger nerves that would pass messages back and forth between the boss and everyone else:

The flatworm’s boss-highway system was the world’s first central nervous system, and the boss in the flatworm’s head was the world’s first brain.

The idea of a nervous system boss quickly caught on with others, and soon, there were thousands of species on Earth with brains.

As time passed and Earth’s animals started inventing intricate new body systems, the bosses got busier.

A little while later came the arrival of mammals. For the Millennials of the Animal Kingdom, life was complicated. Yes, their hearts needed to beat and their lungs needed to breathe, but mammals were about a lot more than survival functions—they were in touch with complex feelings like love, anger, and fear.

For the reptilian brain, which had only had to deal with reptiles and other simpler creatures so far, mammals were just…a lot. So a second boss developed in mammals to pair up with the reptilian brain and take care of all of these new needs—the world’s first limbic system.

Over the next 100 million years, the lives of mammals grew more and more complex, and one day, the two bosses noticed a new resident in the cockpit with them.

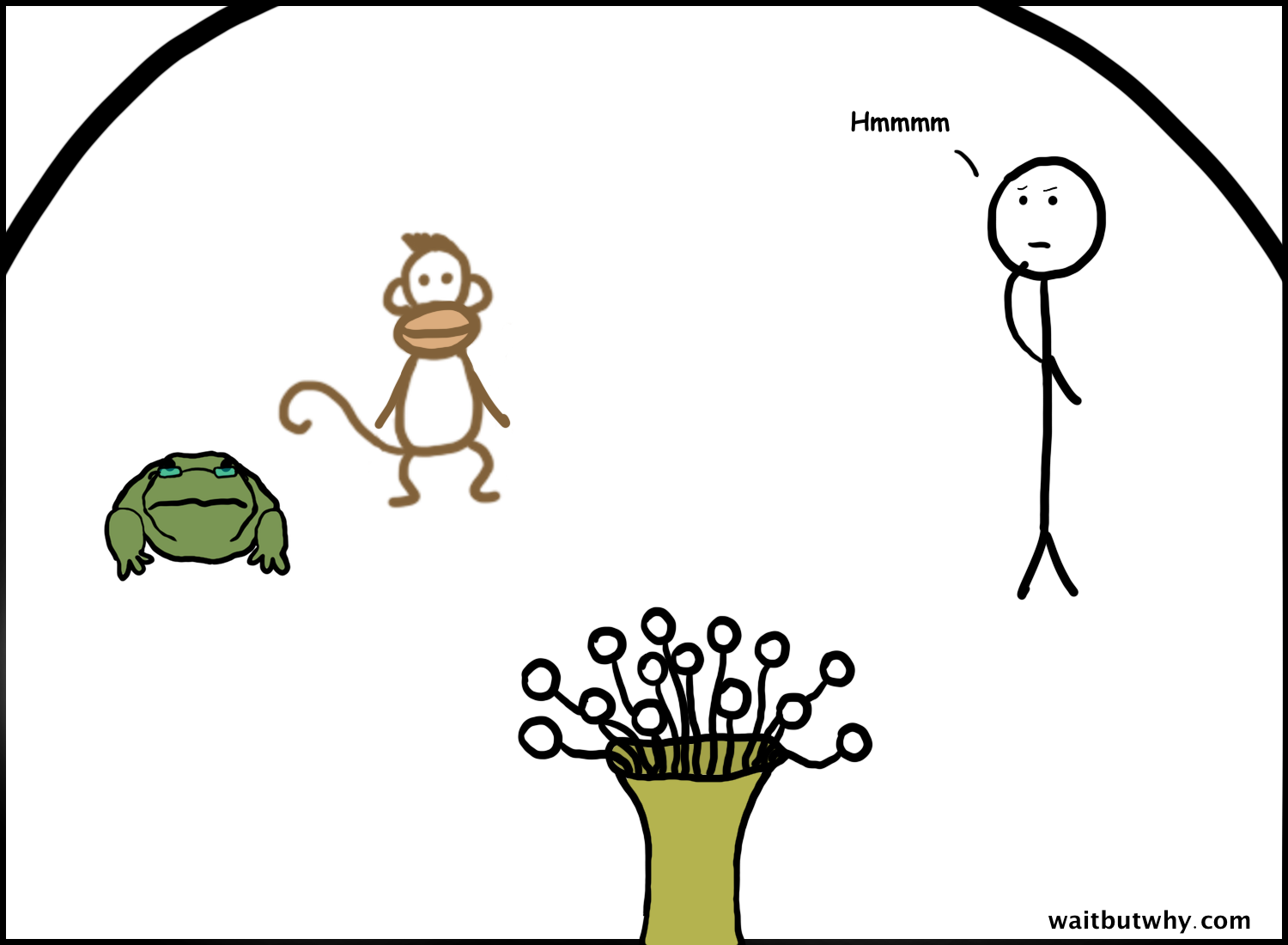

What appeared to be a random infant was actually the early version of the neocortex, and though he didn’t say much at first, as evolution gave rise to primates and then great apes and then early hominids, this new boss grew from a baby into a child and eventually into a teenager with his own idea of how things should be run.

The new boss’s ideas turned out to be really helpful, and he became the hominid’s go-to boss for things like tool-making, hunting strategy, and cooperation with other hominids.

Over the next few million years, the new boss grew older and wiser, and his ideas kept getting better. He figured out how to not be naked. He figured out how to control fire. He learned how to make a spear.

But his coolest trick was thinking. He turned each human’s head into a little world of its own, making humans the first animal that could think complex thoughts, reason through decisions, and make long-term plans.

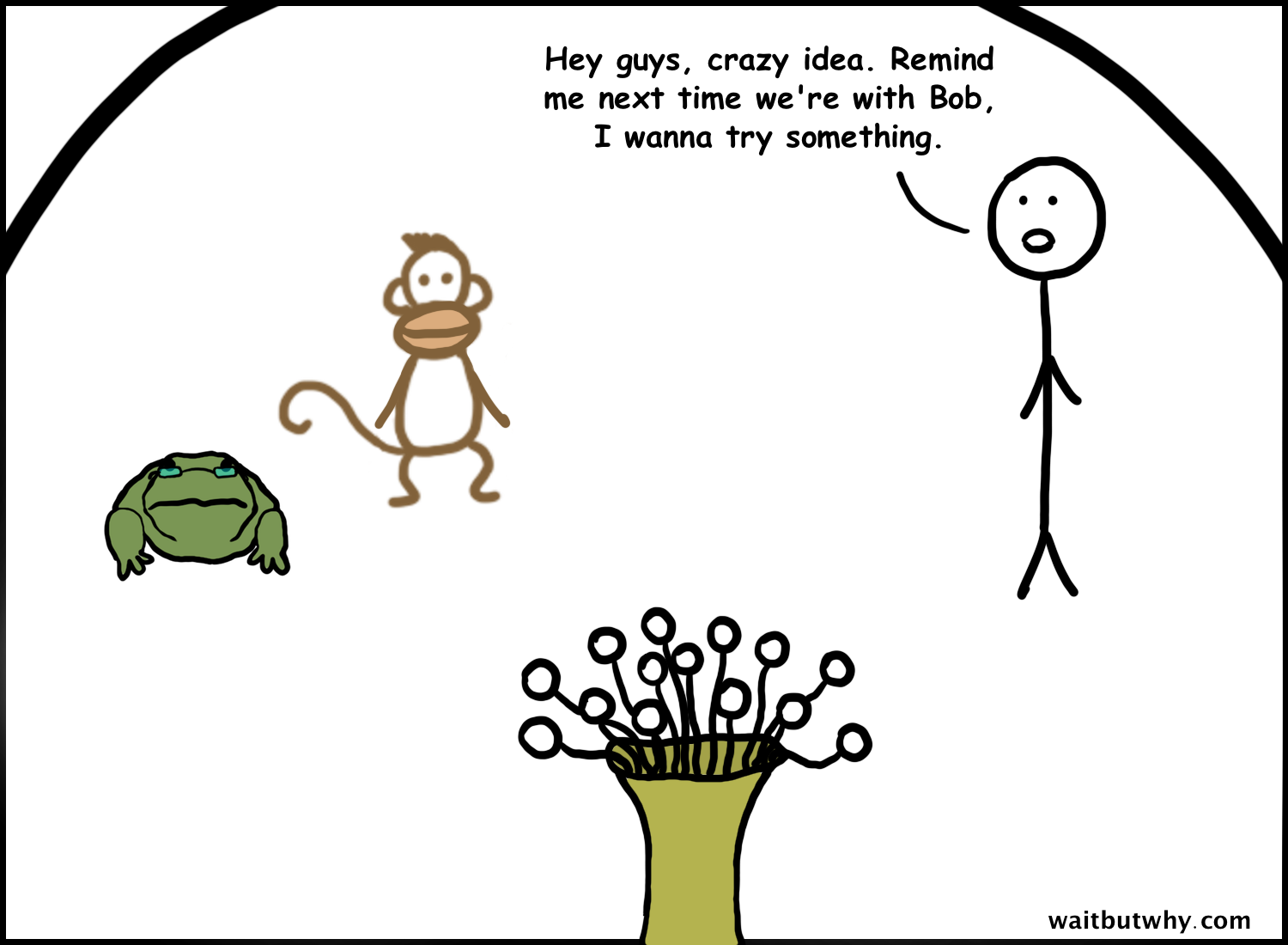

And then, maybe about 100,000 years ago, he came up with a breakthrough.

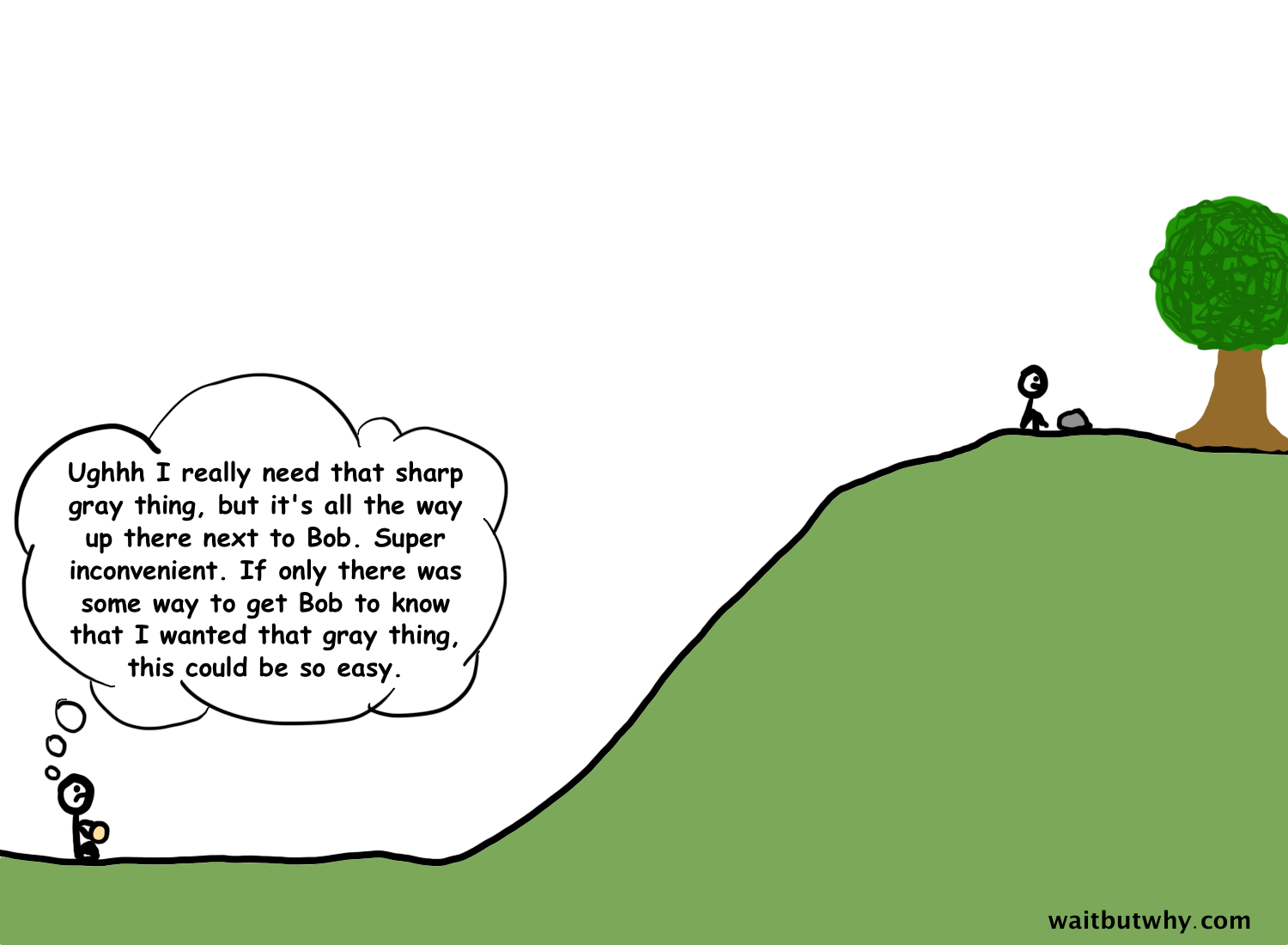

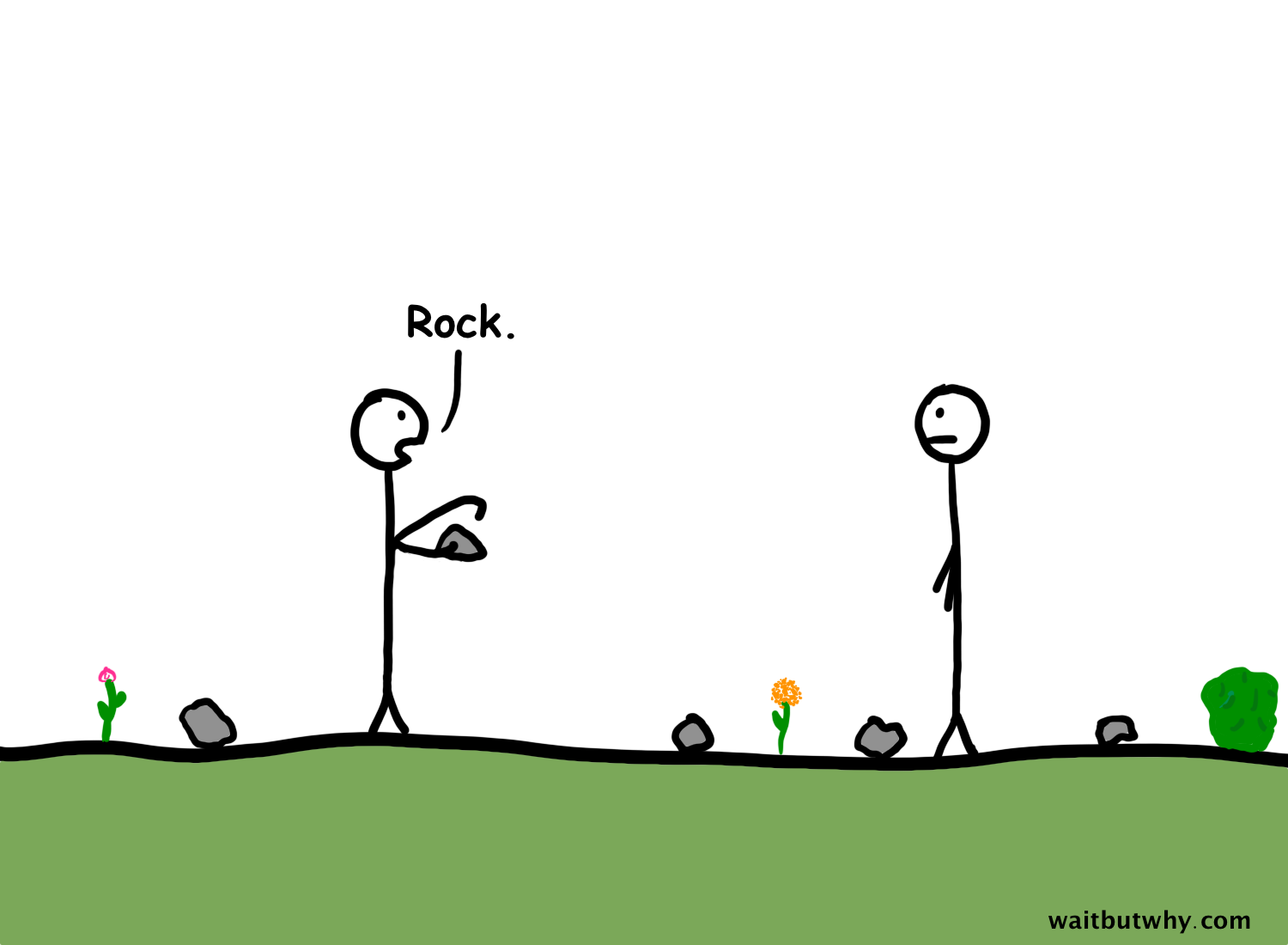

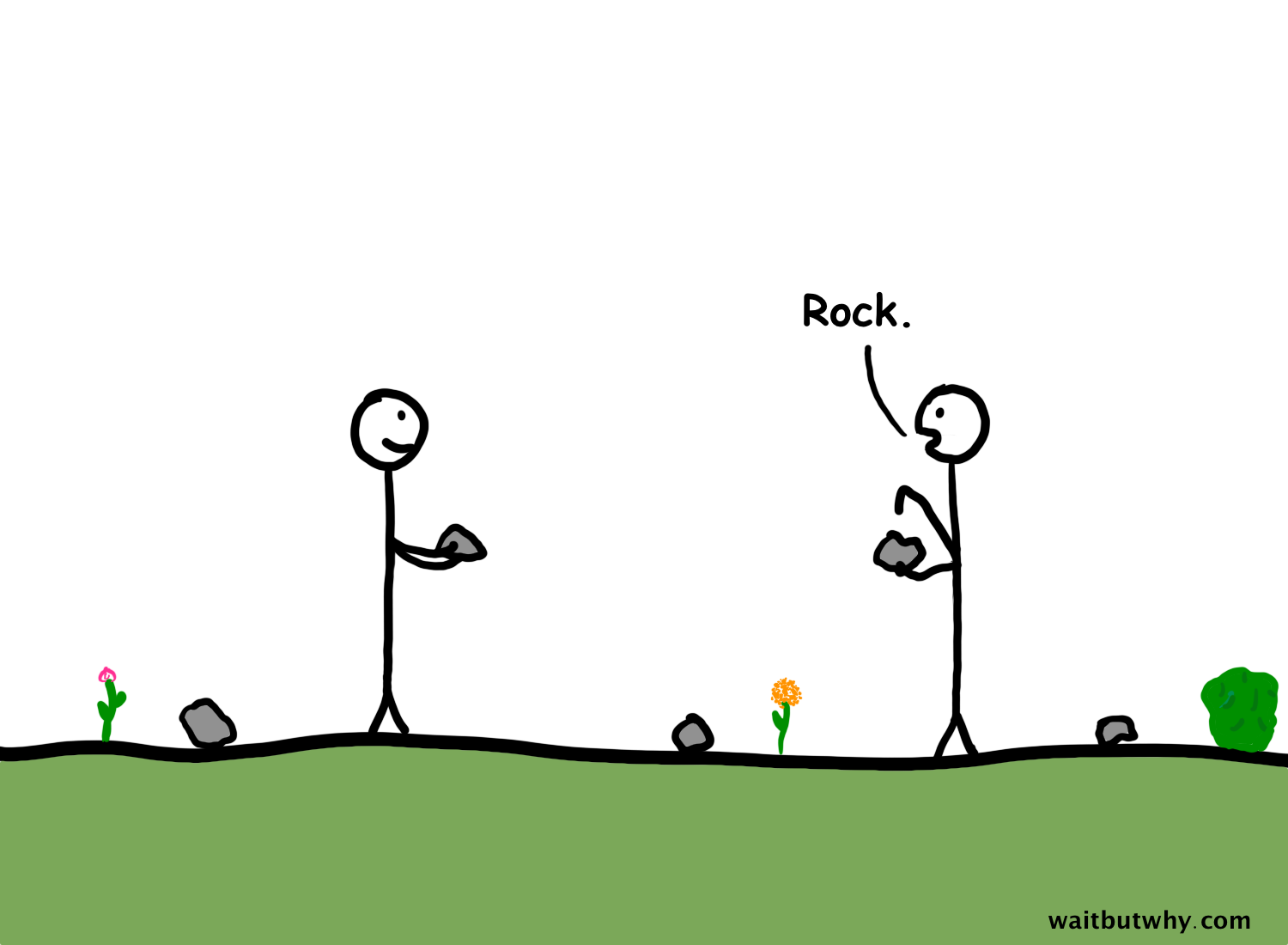

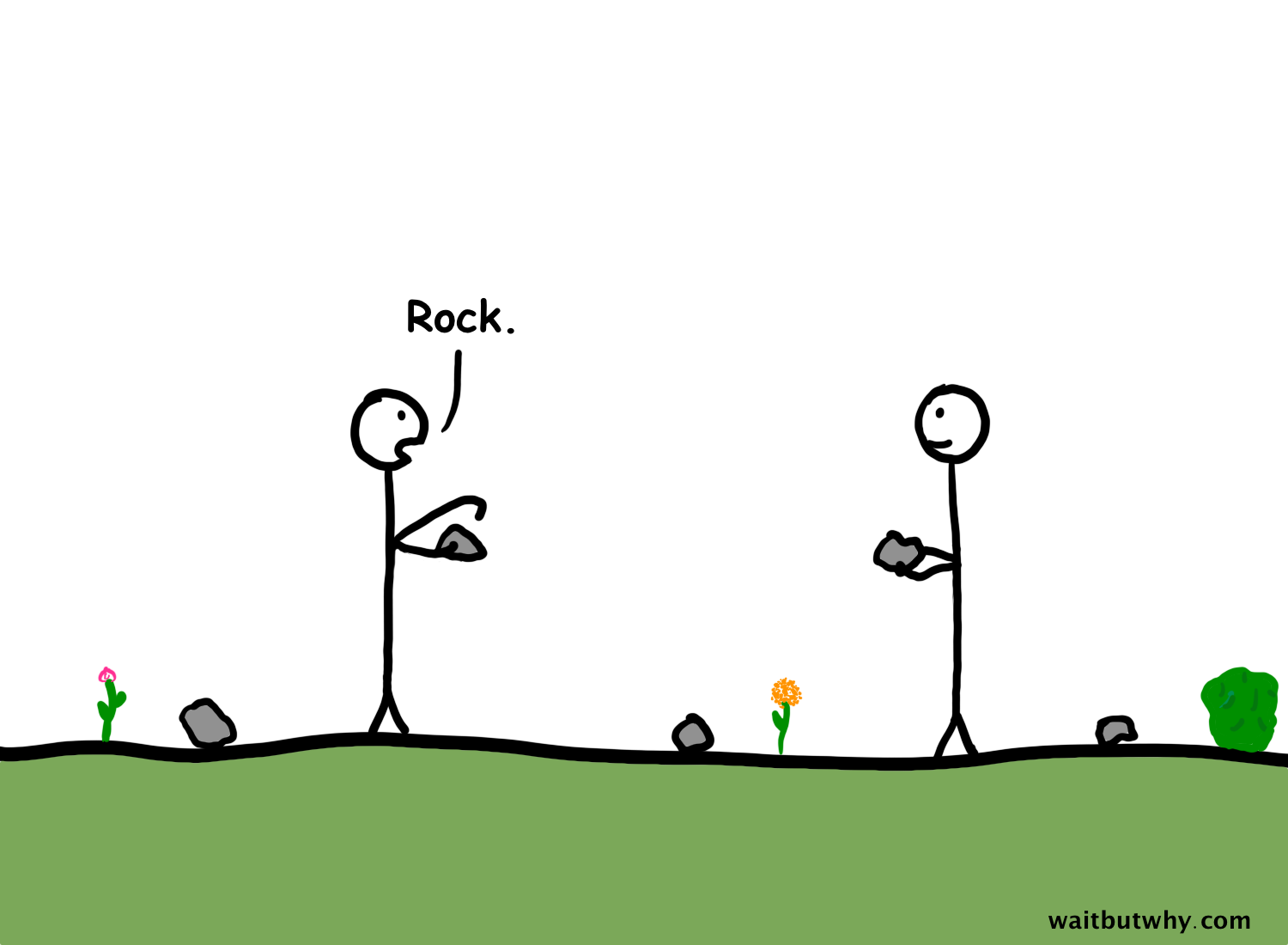

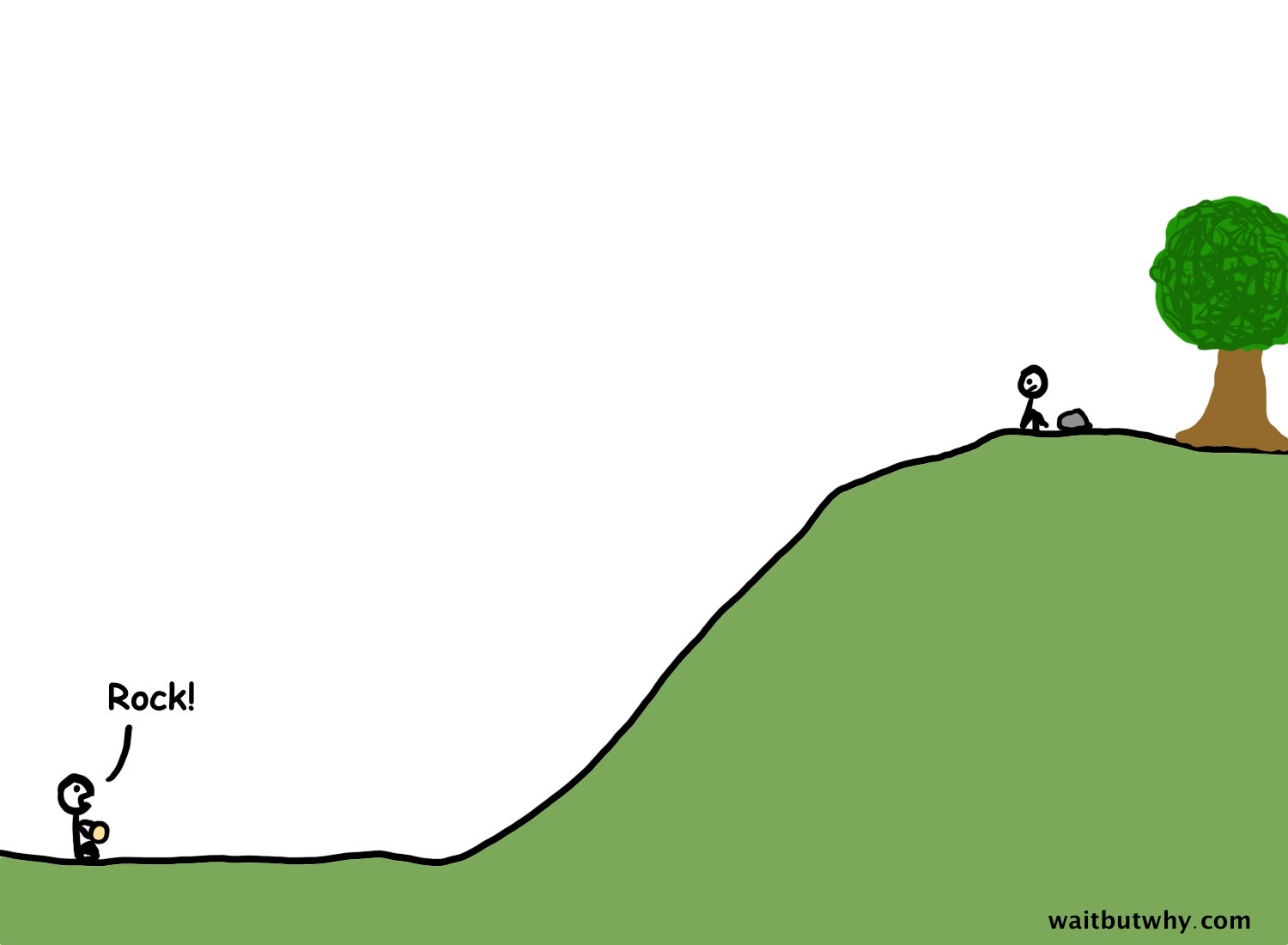

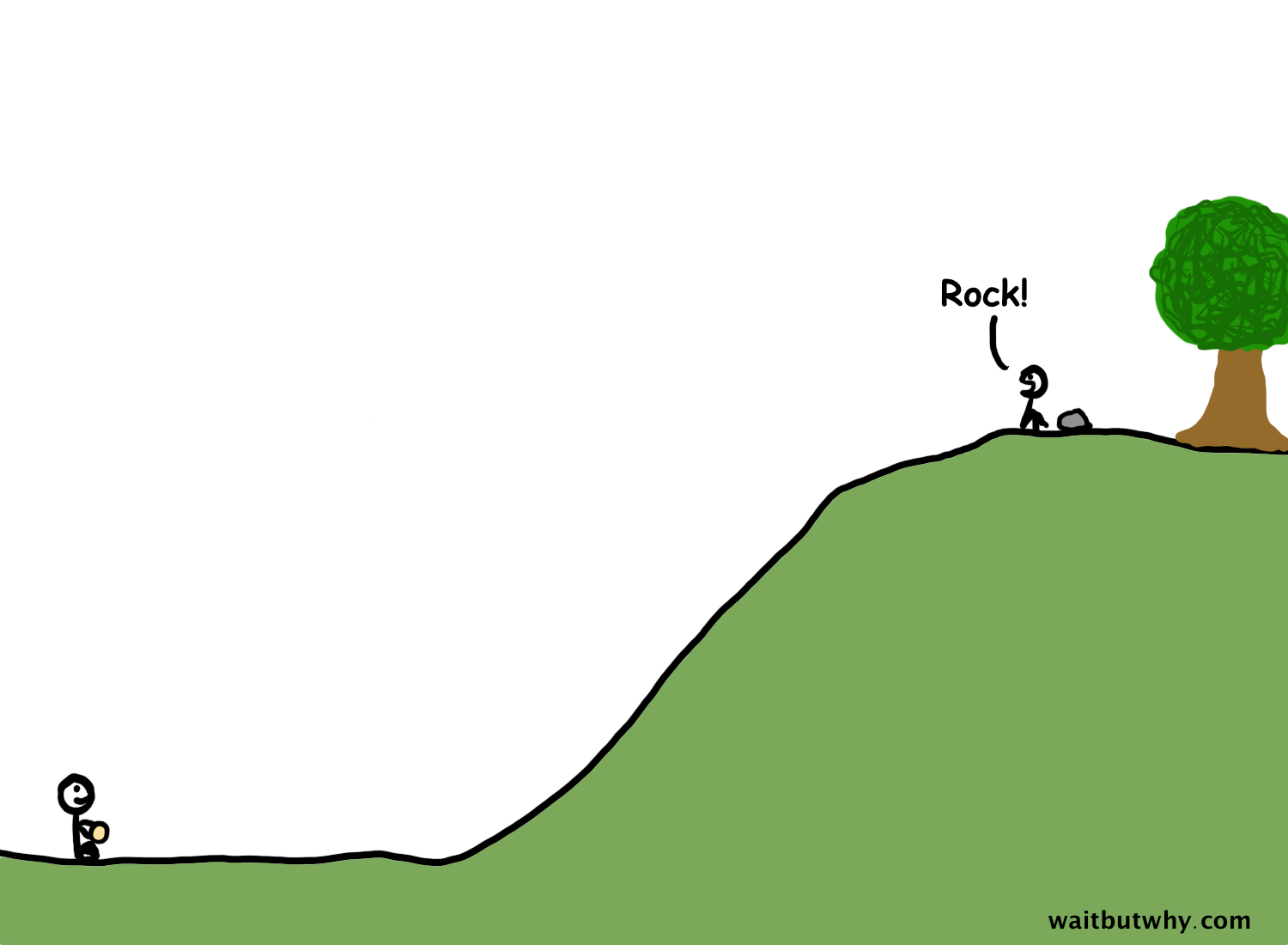

The human brain had advanced to the point where it could understand that even though the sound “rock” was not itself a rock, it could be used as a symbol of a rock—it was a sound that referred to a rock. The early human had invented language.

Soon there were words for all kinds of things, and by 50,000 BC, humans were speaking in full, complex language with each other.

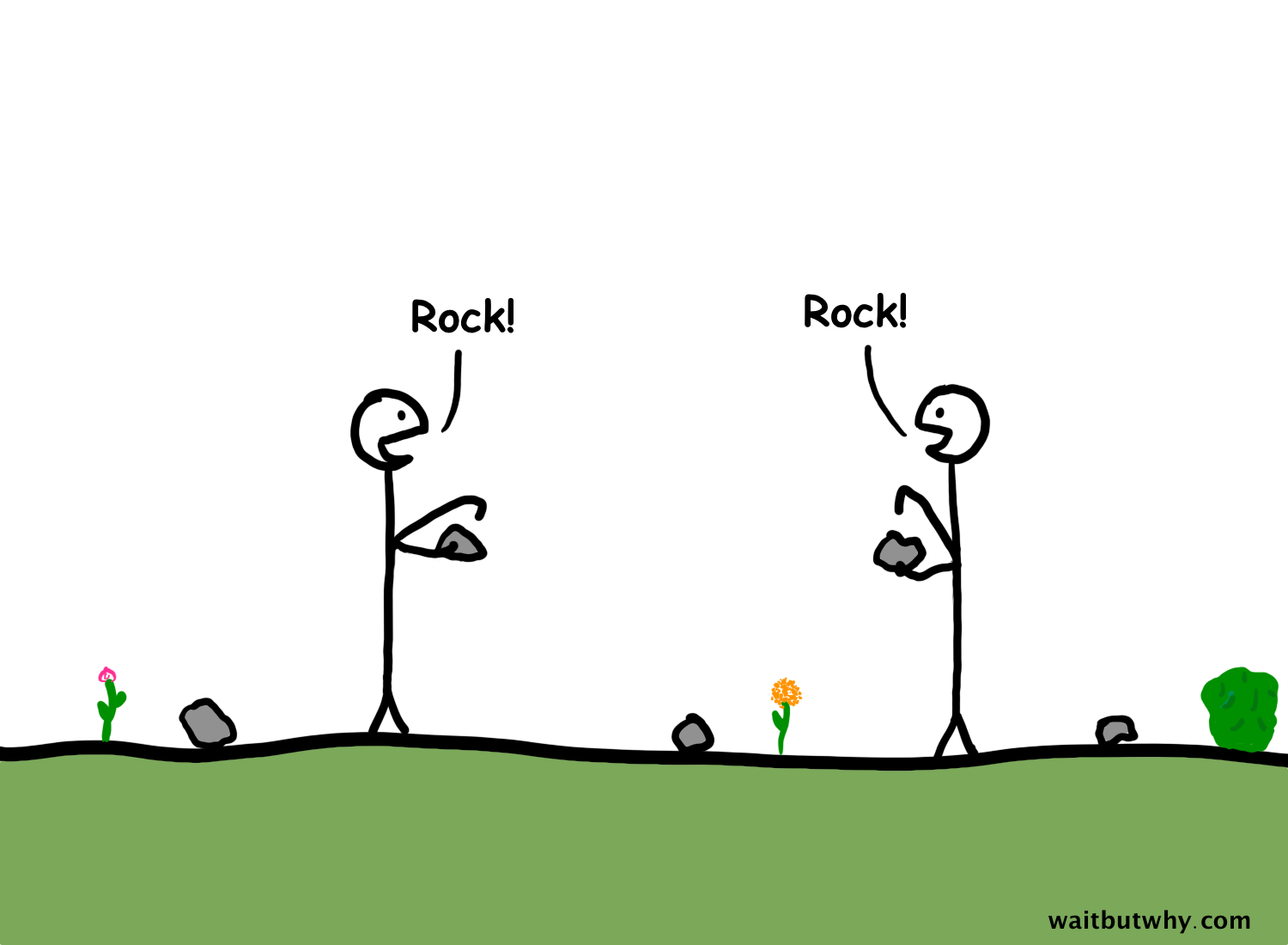

The neocortex had turned humans into magicians. Not only had he made the human head a wondrous internal ocean of complex thoughts, his latest breakthrough had found a way to translate those thoughts into a symbolic set of sounds and send them vibrating through the air into the heads of other humans, who could then decode the sounds and absorb the embedded idea into their own internal thought oceans. The human neocortex had been thinking about things for a long time—and he finally had someone to talk about it all with.

A neocortex party ensued. Neocortexes—fine—neocortices shared everything with each other—stories from their past, funny jokes they had thought of, opinions they had formed, plans for the future.

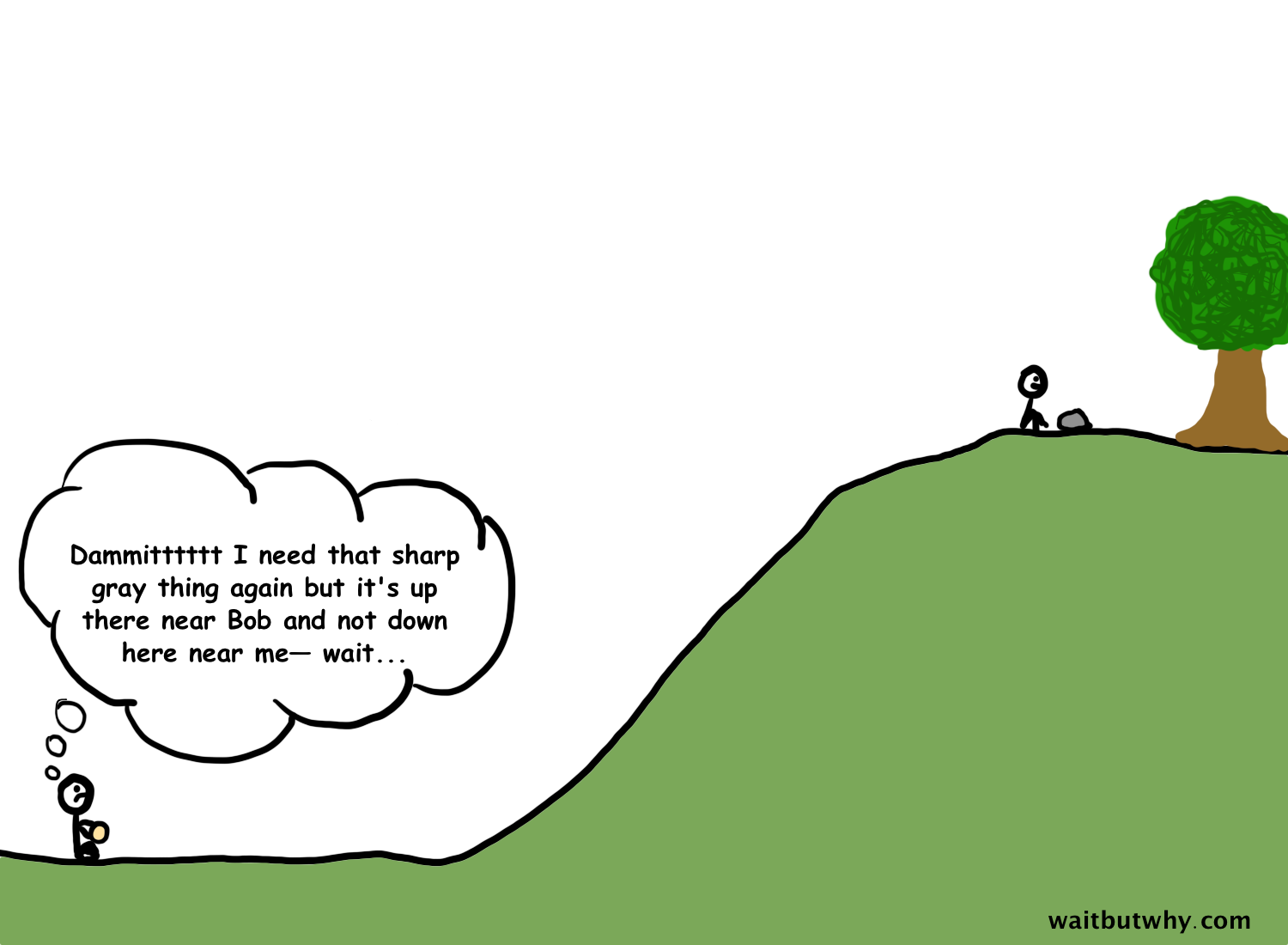

But most useful was sharing what they had learned. If one human learned through trial and error that a certain type of berry led to 48 hours of your life being run by diarrhea, they could use language to share the hard-earned lesson with the rest of their tribe, like photocopying the lesson and handing it to everyone else. Tribe members would then use language to pass along that lesson to their children, and their children would pass it to their own children. Rather than the same mistake being made again and again by many different people, one person’s “stay away from that berry” wisdom could travel through space and time to protect everyone else from having their bad experience.

The same thing would happen when one human figured out a new clever trick. One unusually-intelligent hunter particularly attuned to both star constellations and the annual migration patterns of wildebeest1 herds could share a system he devised that used the night sky to determine exactly how many days remained until the herd would return. Even though very few hunters would have been able to come up with that system on their own, through word-of-mouth, all future hunters in the tribe would now benefit from the ingenuity of one ancestor, with that one hunter’s crowning discovery serving as every future hunter’s starting point of knowledge.

And let’s say this knowledge advancement makes the hunting season more efficient, which gives tribe members more time to work on their weapons—which allows one extra-clever hunter a few generations later to discover a method for making lighter, denser spears that can be thrown more accurately. And just like that, every present and future hunter in the tribe hunts with a more effective spear.

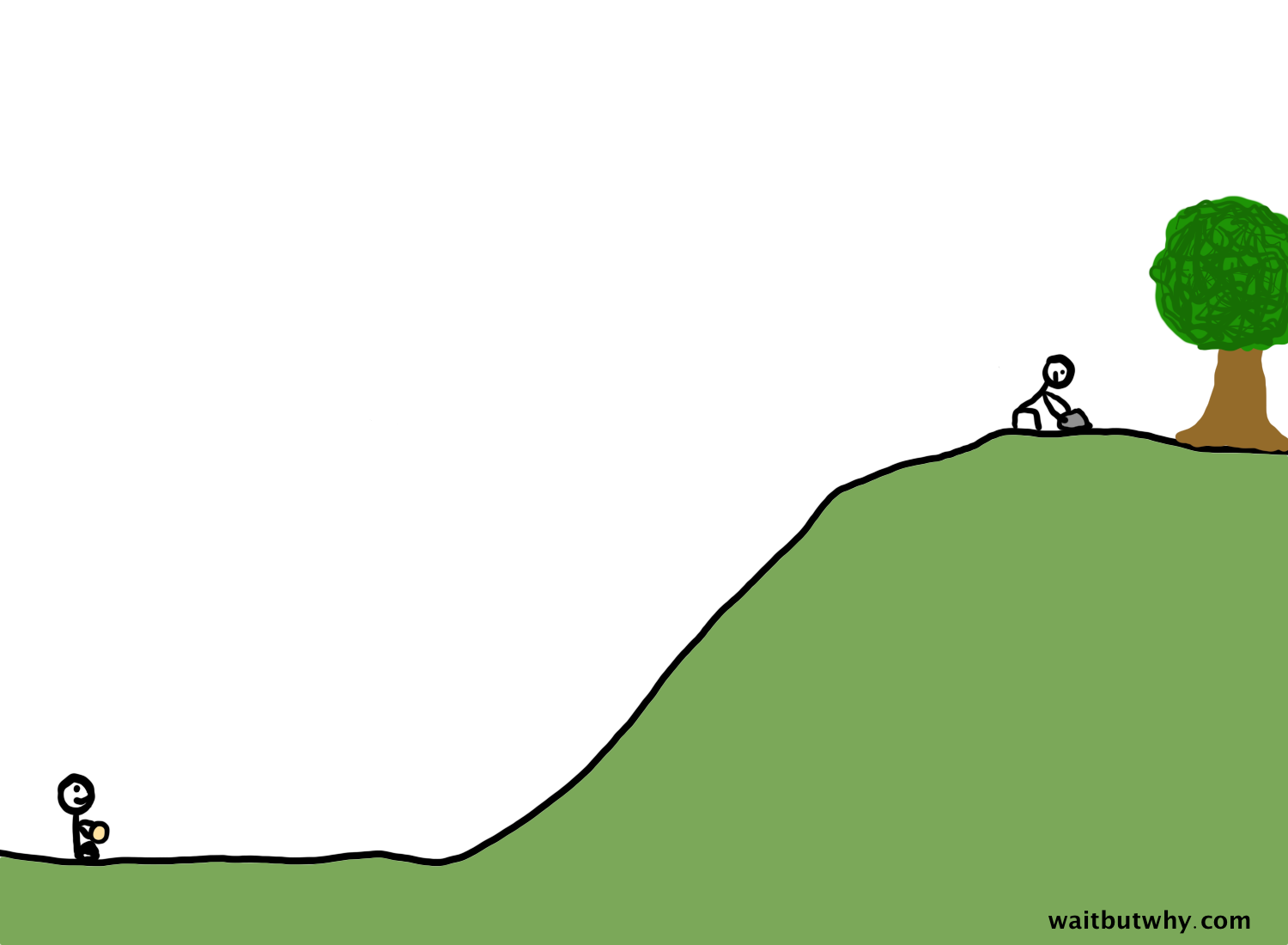

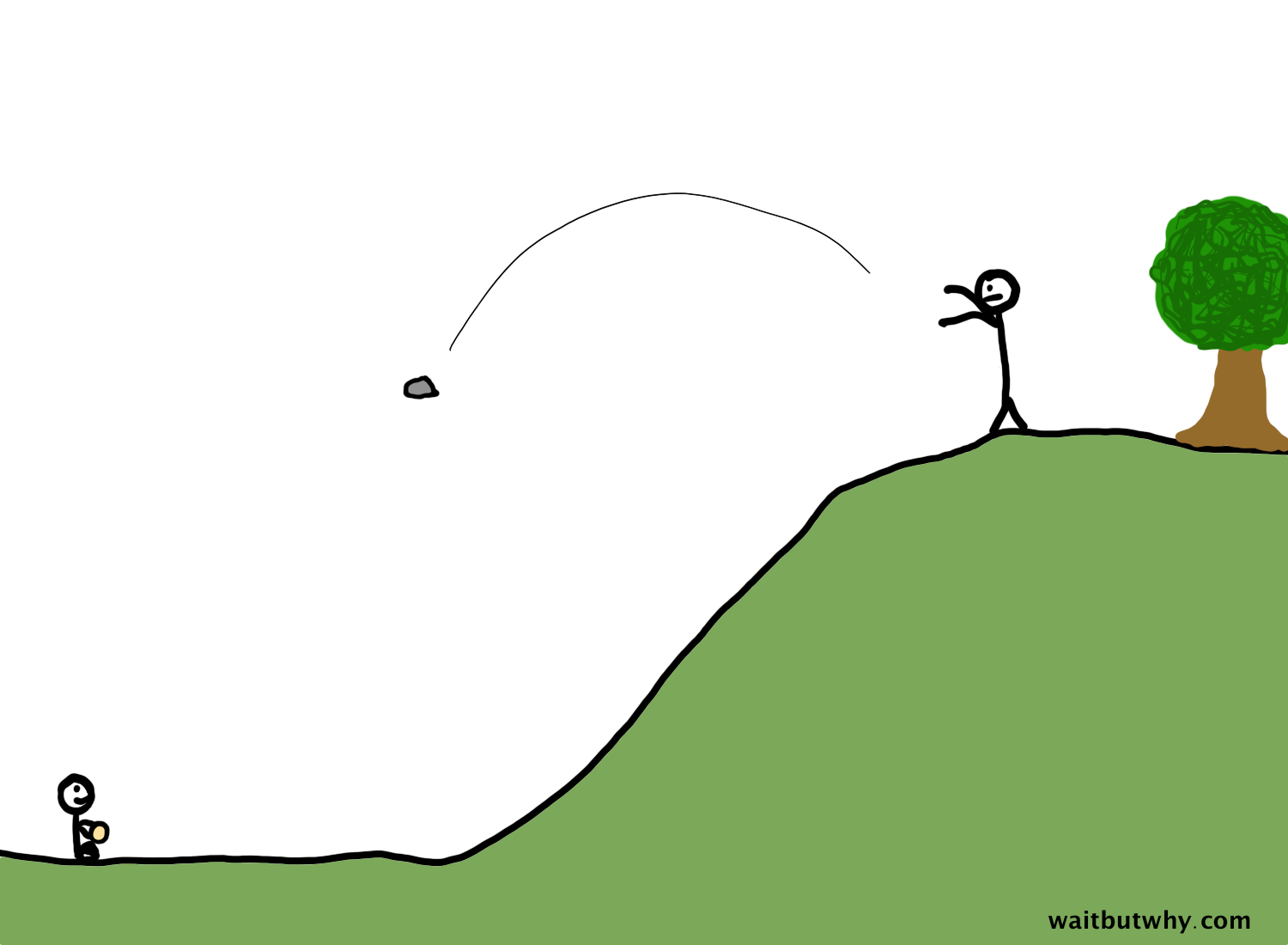

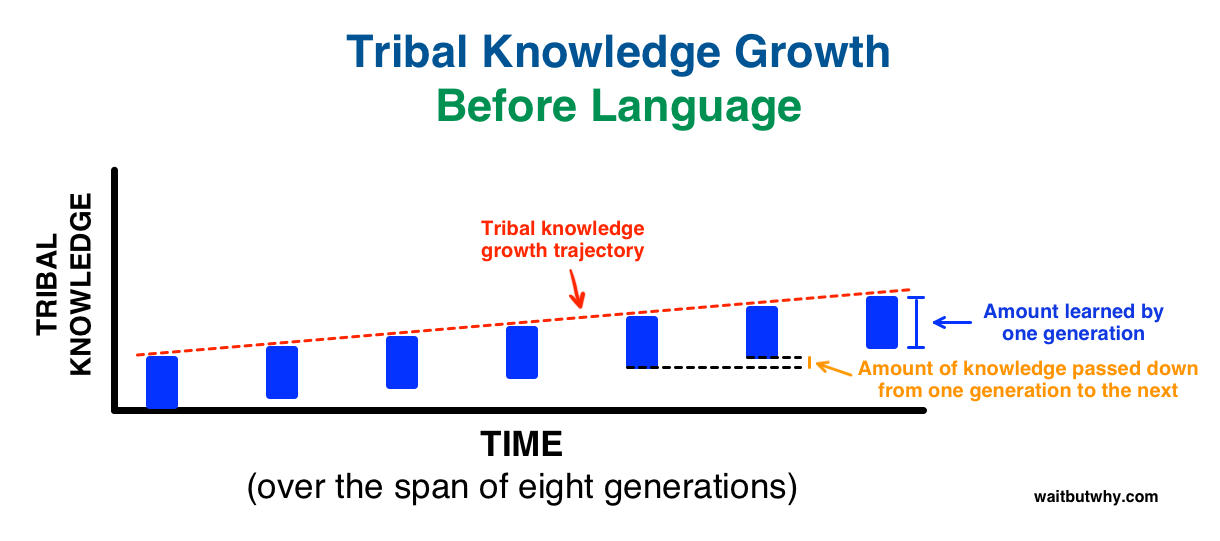

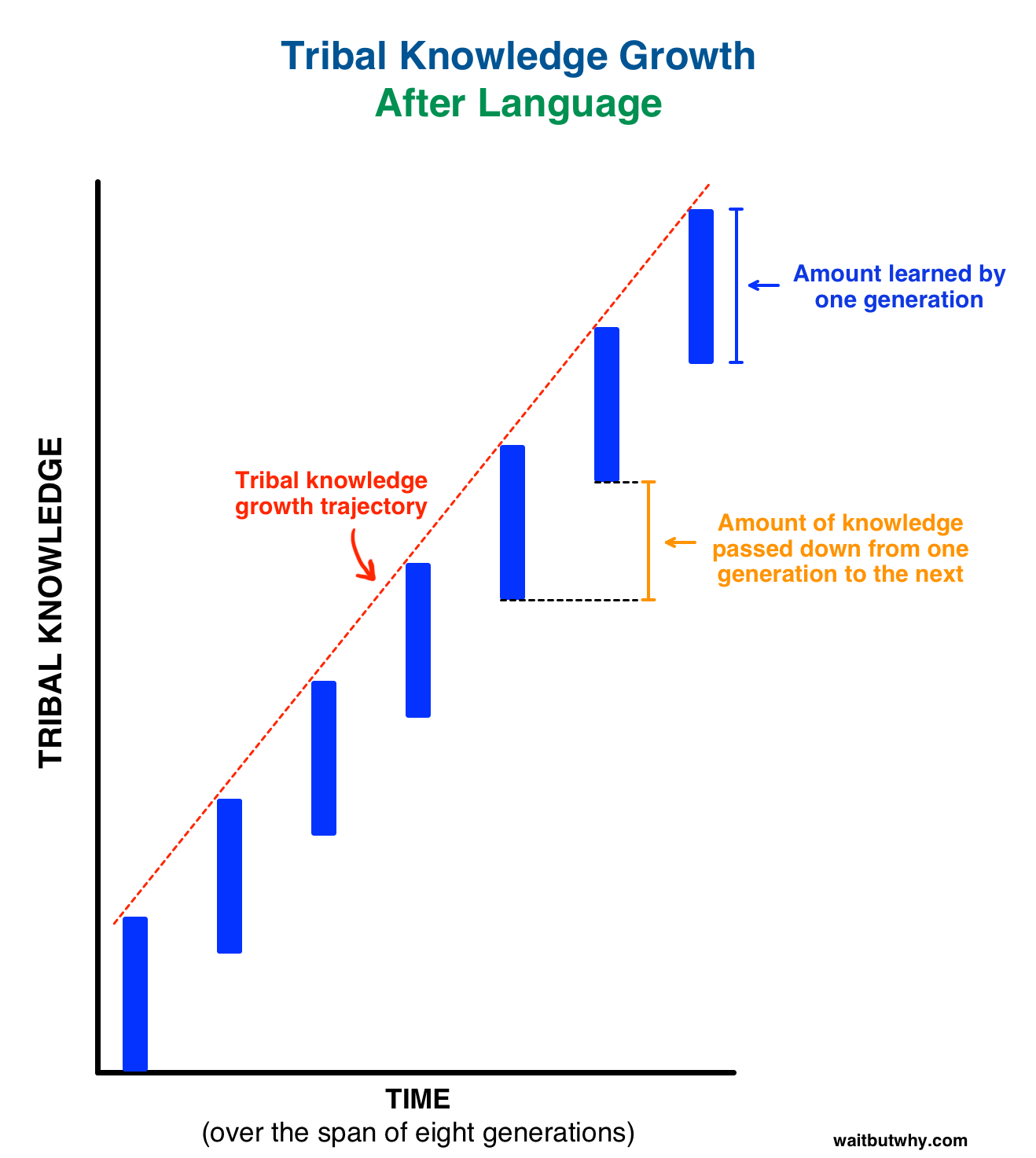

Language allows the best epiphanies of the very smartest people, through the generations, to accumulate into a little collective tower of tribal knowledge—a “greatest hits” of their ancestors’ best “aha!” moments. Every new generation has this knowledge tower installed in their heads as their starting point in life, leading them to new, even better discoveries that build on what their ancestors learned, as the tribe’s knowledge continues to grow bigger and wiser. Language is the difference between this:

And this:

The major trajectory upgrade happens for two reasons. Each generation can learn a lot more new things when they can talk to each other, compare notes, and combine their individual learnings (that’s why the blue bars are so much higher in the second graph). And each generation can successfully pass a higher percentage of their learnings on to the next generation, so knowledge sticks better through time.

Knowledge, when shared, becomes like a grand, collective, inter-generational collaboration. Hundreds of generations later, what started as a pro tip about a certain berry to avoid has become an intricate system of planting long rows of the stomach-friendly berry bushes and harvesting them annually. The initial stroke of genius about wildebeest migrations has turned into a system of goat domestication. The spear innovation, through hundreds of incremental tweaks over tens of thousands of years, has become the bow and arrow.

Language gives a group of humans a collective intelligence far greater than individual human intelligence and allows each human to benefit from the collective intelligence as if he came up with it all himself. We think of the bow and arrow as a primitive technology, but raise Einstein in the woods with no existing knowledge and tell him to come up with the best hunting device he can, and he won’t be nearly intelligent or skilled or knowledgeable enough to invent the bow and arrow. Only a collective human effort can pull that off.

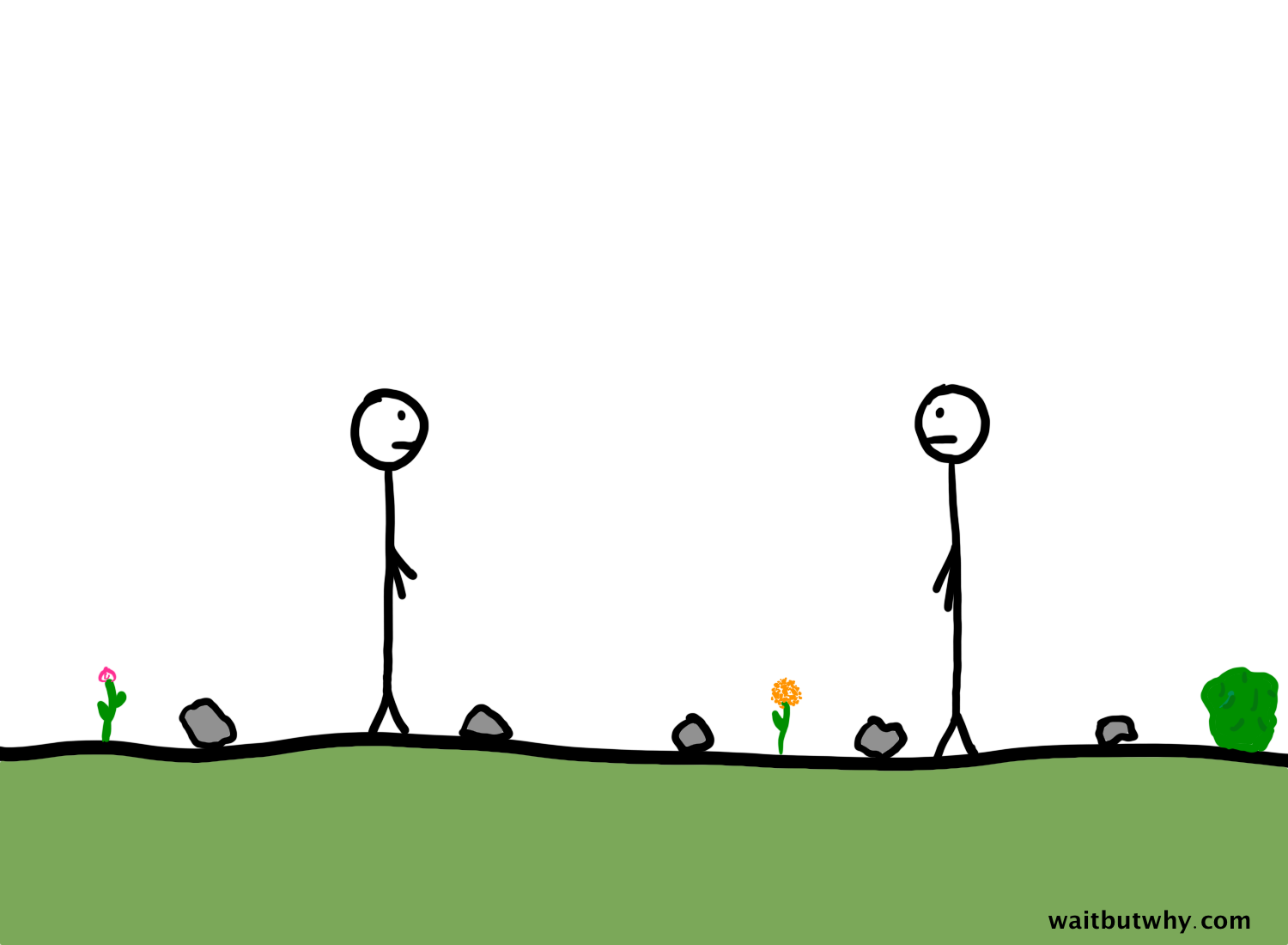

Being able to speak to each other also allowed humans to form complex social structures which, along with advanced technologies like farming and animal domestication, led tribes over time to begin to settle into permanent locations and merge into organized super-tribes. When this happened, each tribe’s tower of accumulated knowledge could be shared with the larger super-tribe, forming a super-tower. Mass cooperation raised the quality of life for everyone, and by 10,000 BC, the first cities had formed.

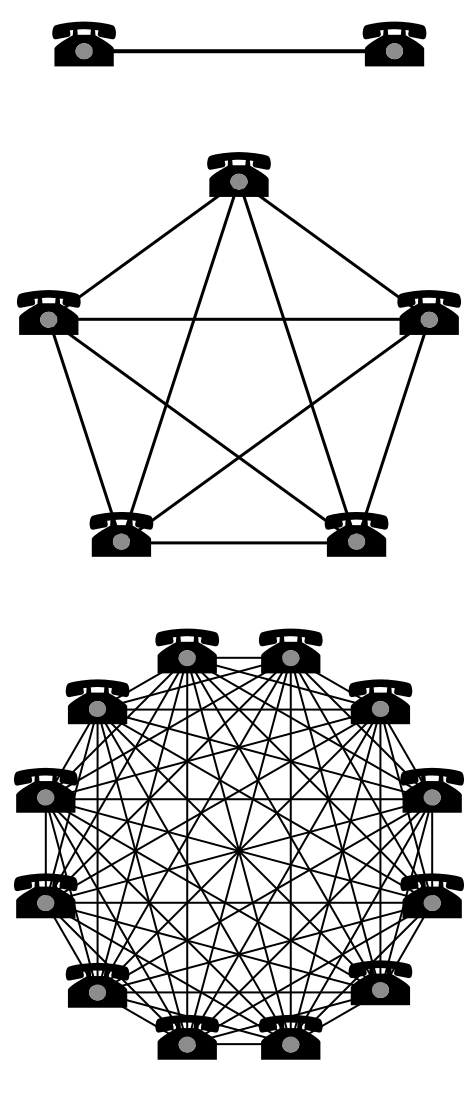

According to Wikipedia, there’s something called Metcalfe’s law, which states that “the value of a telecommunications network is proportional to the square of the number of connected users of the system.” And they include this little chart of old telephones:1

But the same idea applies to people. Two people can have one conversation. Three people have four unique conversation groups (three different two-person conversations and a fourth conversation between all three as a group). Five people have 26. Twenty people have 1,048,555.

So not only did the members of a city benefit from a huge knowledge tower as a foundation, but Metcalfe’s law means that the number of conversation possibilities now skyrocketed to an unprecedented amount of variety. More conversations meant more ideas bumping up against each other, which led to many more discoveries clicking together, and the pace of innovation soared.

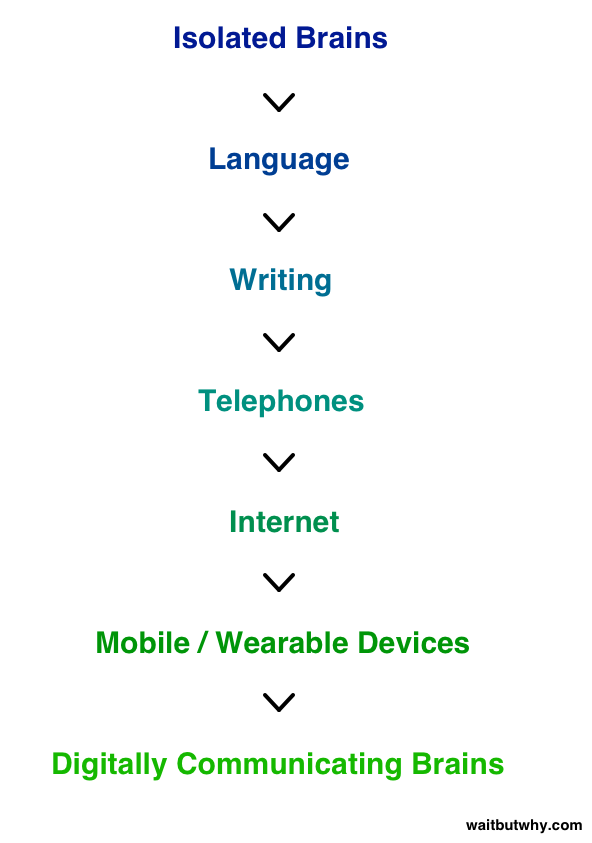

Humans soon mastered agriculture, which freed many people up to think about all kinds of other ideas, and it wasn’t long before they stumbled upon a new, giant breakthrough: writing.

Historians think humans first started writing things down about 5 – 6,000 years ago. Up until that point, the collective knowledge tower was stored only in a network of people’s memories and accessed only through livestream word-of-mouth communication. This system worked in small tribes, but with a vastly larger body of knowledge shared among a vastly larger group of people, memories alone would have had a hard time supporting it all, and most of it would have ended up lost.

If language let humans send a thought from one brain to another, writing let them stick a thought onto a physical object, like a stone, where it could live forever. When people began writing on thin sheets of parchment or paper, huge fields of knowledge that would take weeks to be conveyed by word of mouth could be compressed into a book or a scroll you could hold in your hand. The human collective knowledge tower now lived in physical form, neatly organized on the shelves of city libraries and universities.

These shelves became humanity’s grand instruction manual on everything. They guided humanity toward new inventions and discoveries, and those would in turn become new books on the shelves, as the grand instruction manual built upon itself. The manual taught us the intricacies of trade and currency, of shipbuilding and architecture, of medicine and astronomy. Each generation began life with a higher floor of knowledge and technology than the last, and progress continued to accelerate.

But painstakingly handwritten books were treated like treasures,2 and likely only accessible to the extreme elite (in the mid 15th century, there were only 30,000 books in all of Europe). And then came another breakthrough: the printing press.

In the 15th century, the beardy Johannes Gutenberg came up with a way to create multiple identical copies of the same book, much more quickly and cheaply than ever before. (Or, more accurately, when Gutenberg was born, humanity had already figured out the first 95% of how to invent the printing press, and Gutenberg, with that knowledge as his starting point, invented the last 5%.) (Oh, also, Gutenberg didn’t invent the printing press, the Chinese did a bunch of centuries earlier. Pretty reliable rule is that everything you think was invented somewhere other than China was probably actually invented in China.) Here’s how it worked:

It Turns Out Gutenberg Isn’t Actually Impressive Blue Box

To prepare to write this blue box, I found this video explaining how Gutenberg’s press worked and was surprised to find myself unimpressed. I always assumed Gutenberg had made some genius machine, but it turns out he just created a bunch of stamps of letters and punctuation and manually arranged them as the page of a book and then put ink on them and pressed a piece of paper onto the letters, and that was one book page. While he had the letters all set up for that page, he’d make a bunch of copies. Then he’d spend forever manually rearranging the stamps (this is the “movable type” part) into the next page, and then do a bunch of copies of that. His first project was 180 copies of the Bible,3 which took him and his employees two years.

That‘s Gutenberg’s thing? A bunch of stamps? I feel like I could have come up with that pretty easily. Not really clear why it took humanity 5,000 years to go from figuring out how to write to creating a bunch of manual stamps. I guess it’s not that I’m unimpressed with Gutenberg—I’m neutral on Gutenberg, he’s fine—it’s that I’m unimpressed with everyone else.

Anyway, despite how disappointing Gutenberg’s press turned out to be, it was a huge leap forward for humanity’s ability to spread information. Over the coming centuries, printing technology rapidly improved, bringing the number of pages a machine could print in an hour from about 25 in Gutenberg’s time4 up 100-fold to 2,400 by the early 19th century.2

Mass-produced books allowed information to spread like wildfire, and with books being made increasingly affordable, no longer was education an elite privilege—millions now had access to books, and literacy rates shot upwards. One person’s thoughts could now reach millions of people. The era of mass communication had begun.

The avalanche of books allowed knowledge to transcend borders, as the world’s regional knowledge towers finally merged into one species-wide knowledge tower that stretched into the stratosphere.

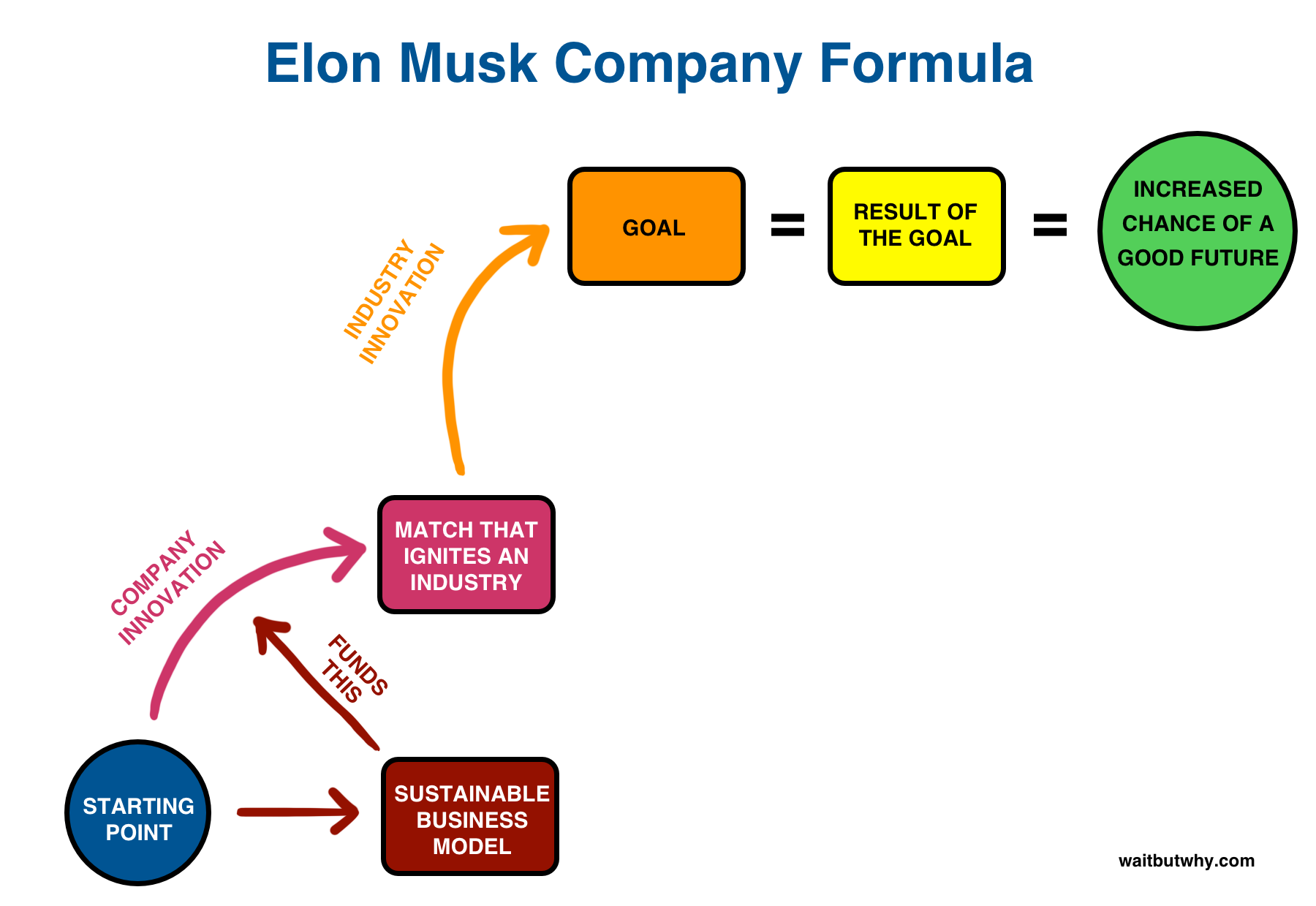

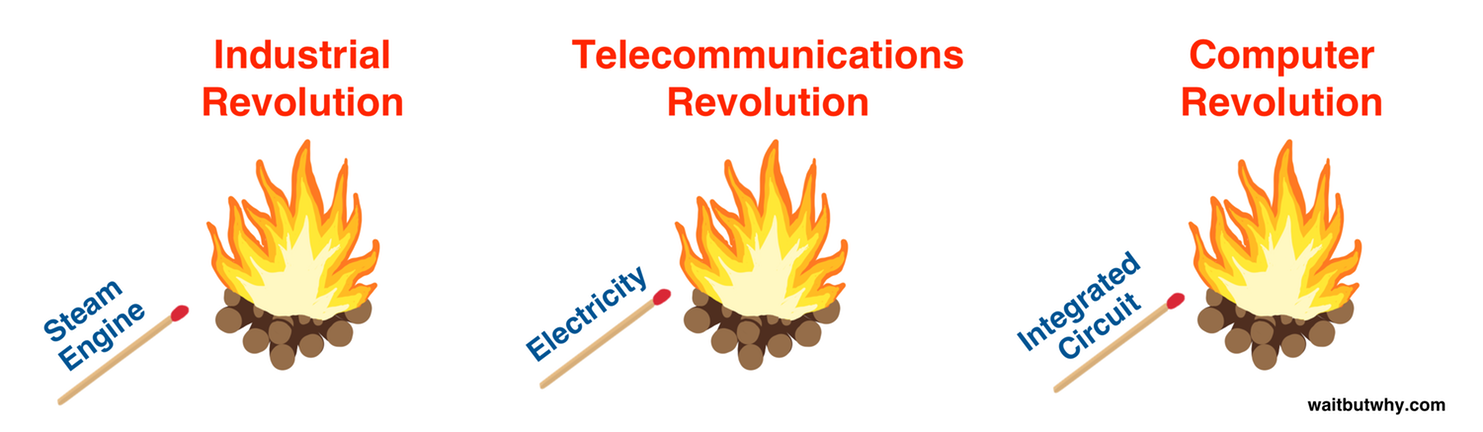

The better we could communicate on a mass scale, the more our species began to function like a single organism, with humanity’s collective knowledge tower as its brain and each individual human brain like a nerve or a muscle fiber in its body. With the era of mass communication upon us, the collective human organism—the Human Colossus—rose into existence.

With the entire body of collective human knowledge in its brain, the Human Colossus began inventing things no human could have dreamed of inventing on their own—things that would have seemed like absurd science fiction to people only a few generations before.

It turned our ox-drawn carts into speedy locomotives and our horse-and-buggies into shiny metal cars. It turned our lanterns into lightbulbs and written letters into telephone calls and factory workers into industrial machines. It sent us soaring through the skies and out into space. It redefined the meaning of “mass communication” by giving us radio and TV, opening up a world where a thought in someone’s head could be beamed instantly into the brains of a billion people.

If an individual human’s core motivation is to pass its genes on, which keeps the species going, the forces of macroeconomics make the Human Colossus’s core motivation to create value, which means it tends to want to invent newer and better technology. Every time it does that, it becomes an even better inventor, which means it can invent new stuff even faster.

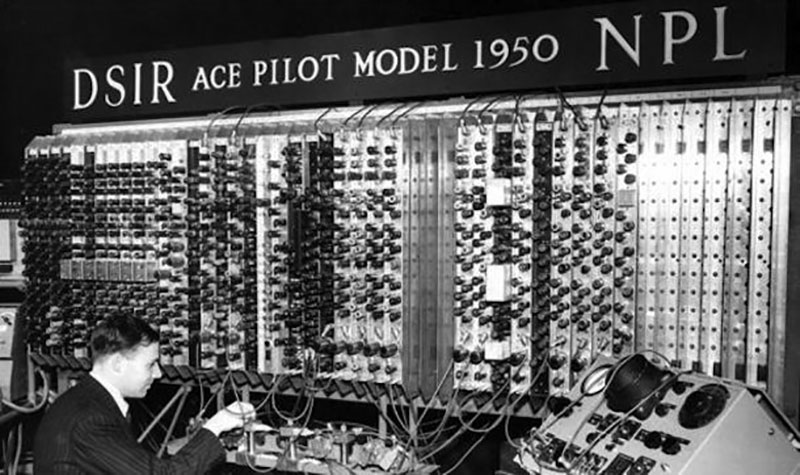

And around the middle of the 20th century, the Human Colossus began working on its most ambitious invention yet.

The Colossus had figured out a long time ago that the best way to create value was to invent value-creating machines. Machines were better than humans at doing many kinds of work, which generated a flood of new resources that could be put towards value creation. Perhaps even more importantly, machine labor freed up huge portions of human time and energy—i.e. huge portions of the Colossus itself—to focus on innovation. It had already outsourced the work of our arms to factory machines and the work of our legs to driving machines, and it had done so through the power of its brain—now what if, somehow, it could outsource the work of the brain itself to a machine?

The first digital computers sprung up in the 1940s.

One kind of brain labor computers could do was the work of information storage—they were remembering machines. But we already knew how to outsource our memories using books, just like we had been outsourcing our leg labor to horses long before cars provided a far better solution. Computers were simply a memory-outsourcing upgrade.

Information-processing was a different story—a type of brain labor we had never figured out how to outsource. The Human Colossus had always had to do all of its own computing. Computers changed that.

Factory machines allowed us to outsource a physical process—we put a material in, the machines physically processed it and spit out the results. Computers could do the same thing for information processing. A software program was like a factory machine for information processes.

These new information-storage/organizing/processing machines proved to be useful. Computers began to play a central role in the day-to-day operation of companies and governments. By the late 1980s, it was common for individual people to own their own personal brain assistant.

Then came another leap.

In the early 90s, we taught millions of isolated machine-brains how to communicate with one another. They formed a worldwide computer network, and a new giant was born—the Computer Colossus.

The Computer Colossus and the great network it formed were like popeye spinach for the Human Colossus.

If individual human brains are the nerves and muscle fibers of the Human Colossus, the internet gave the giant its first legit nervous system. Each of its nodes was now interconnected to all of its other nodes, and information could travel through the system with light speed. This made the Human Colossus a faster, more fluid thinker.

The internet gave billions of humans instant, free, easily-searchable access to the entire human knowledge tower (which by now stretched past the moon). This made the Human Colossus a smarter, faster learner.

And if individual computers had served as brain extensions for individual people, companies, or governments, the Computer Colossus was a brain extension for the entire Human Colossus itself.

With its first real nervous system, an upgraded brain, and a powerful new tool, the Human Colossus took inventing to a whole new level—and noticing how useful its new computer friend was, it focused a large portion of its efforts on advancing computer technology.

It figured out how to make computers faster and cheaper. It made internet faster and wireless. It made computing chips smaller and smaller until there was a powerful computer in everyone’s pocket.

Each innovation was like a new truckload of spinach for the Human Colossus.

But today, the Human Colossus has its eyes set on an even bigger idea than more spinach. Computers have been a game-changer, allowing humanity to outsource many of its brain-related tasks and better function as a single organism. But there’s one kind of brain labor computers still can’t quite do. Thinking.

Computers can compute and organize and run complex software—software that can even learn on its own. But they can’t think in the way humans can. The Human Colossus knows that everything it’s built has originated with its ability to reason creatively and independently—and it knows that the ultimate brain extension tool would be one that can really, actually, legitimately think. It has no idea what it will be like when the Computer Colossus can think for itself—when it one day opens its eyes and becomes a real colossus—but with its core goal to create value and push technology to its limits, the Human Colossus is determined to find out.

___________

We’ll come back here in a bit. First, we have some learning to do.

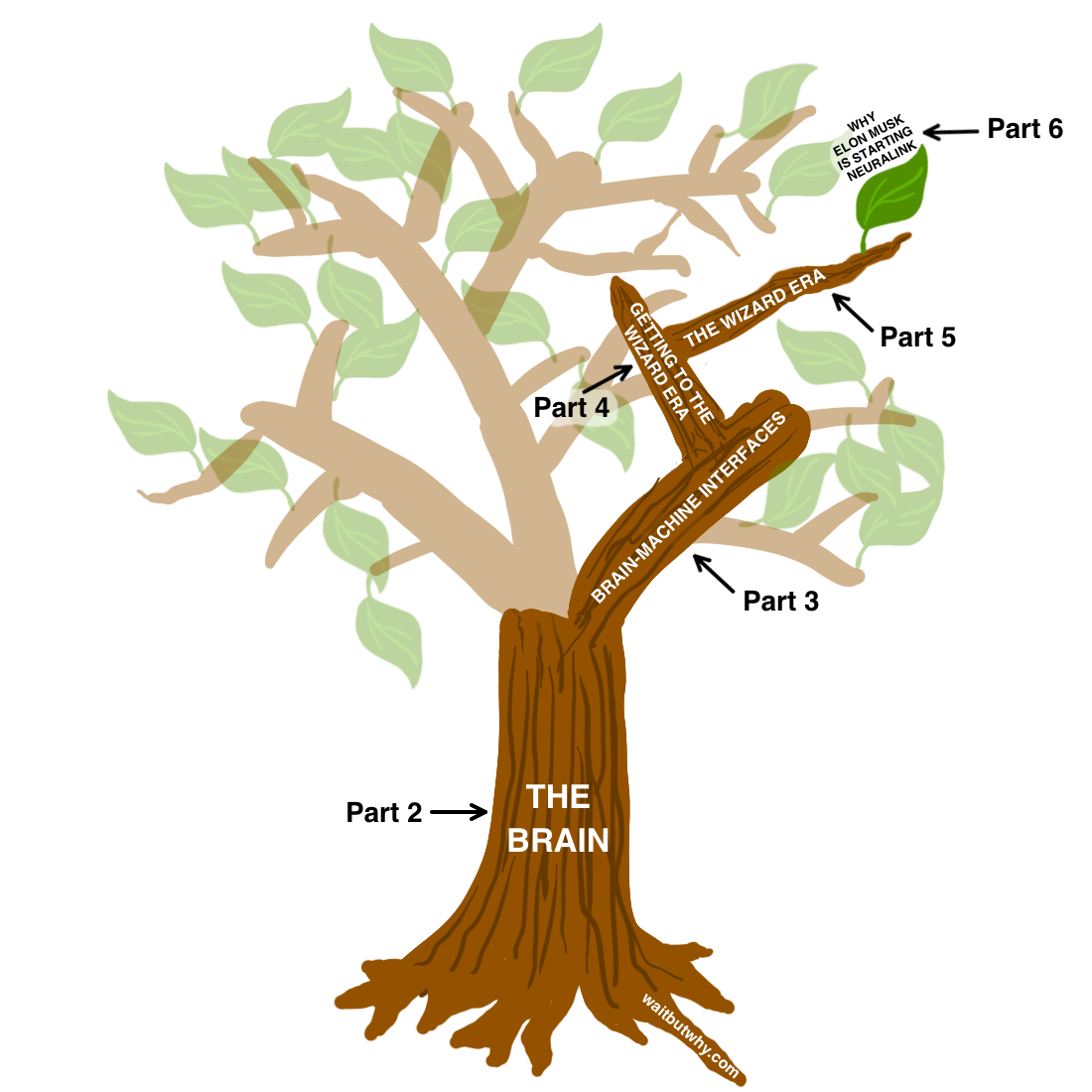

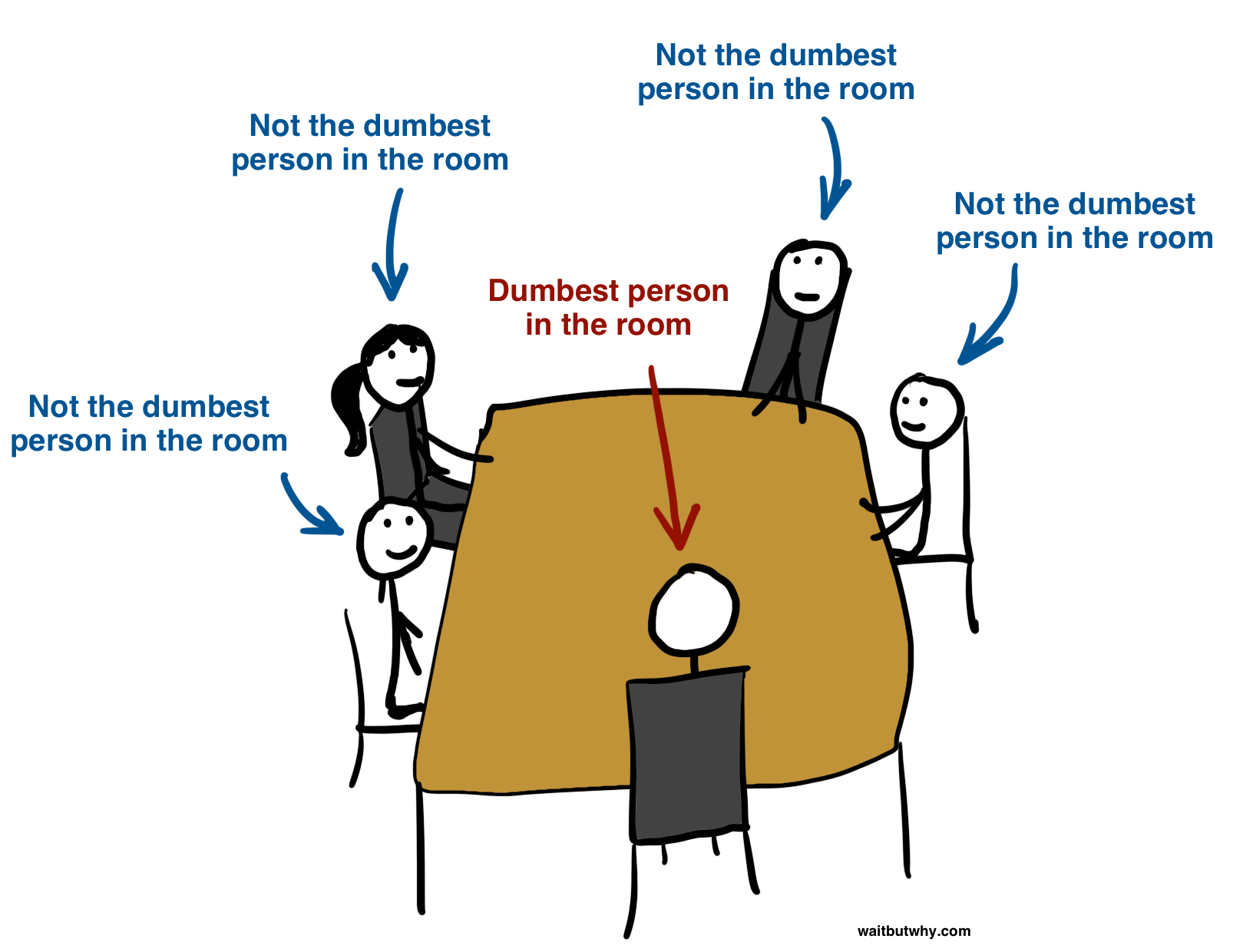

As we’ve discussed before, knowledge works like a tree. If you try to learn a branch or a leaf of a topic before you have a solid tree trunk foundation of understanding in your head, it won’t work. The branches and leaves will have nothing to stick to, so they’ll fall right out of your head.

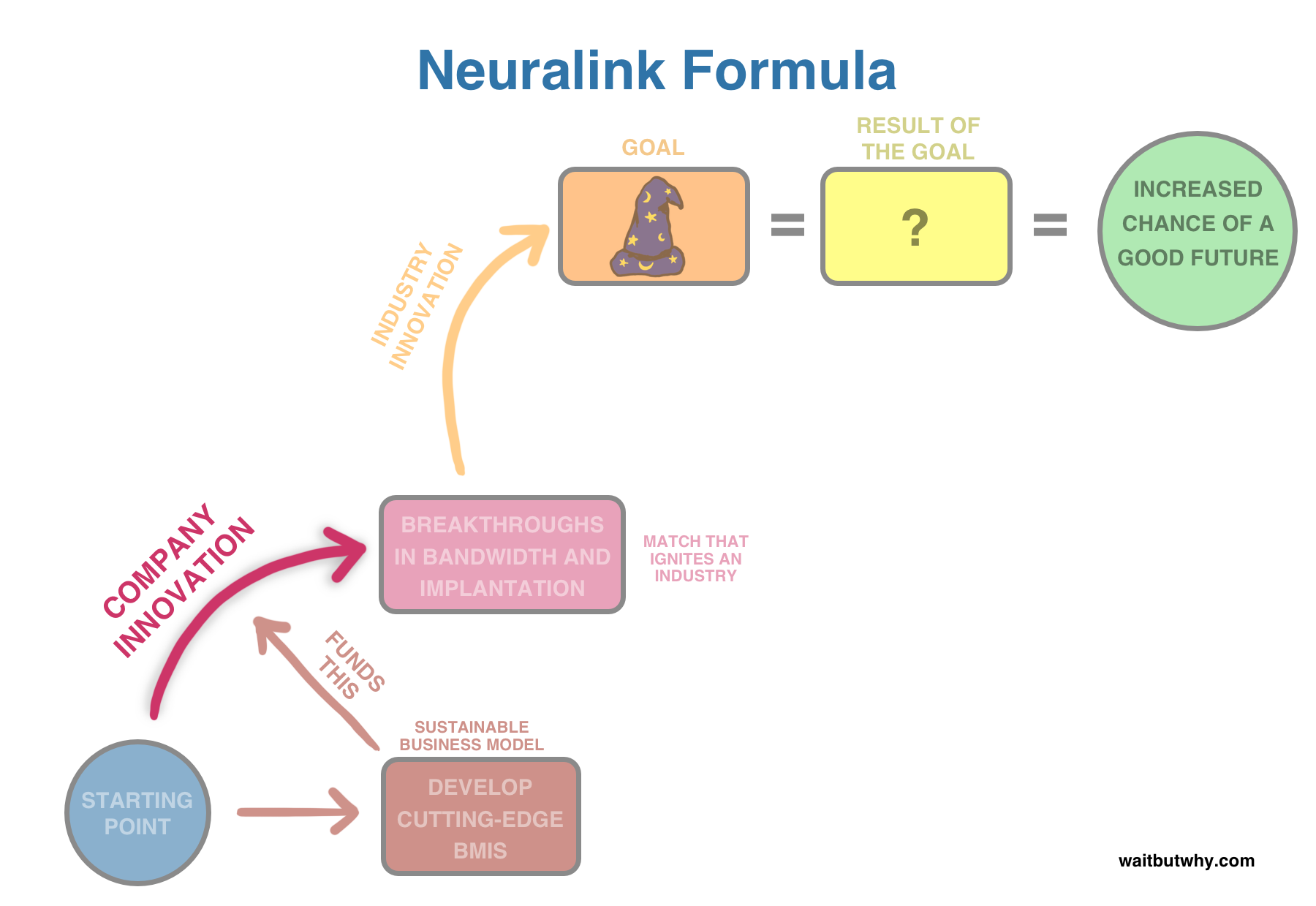

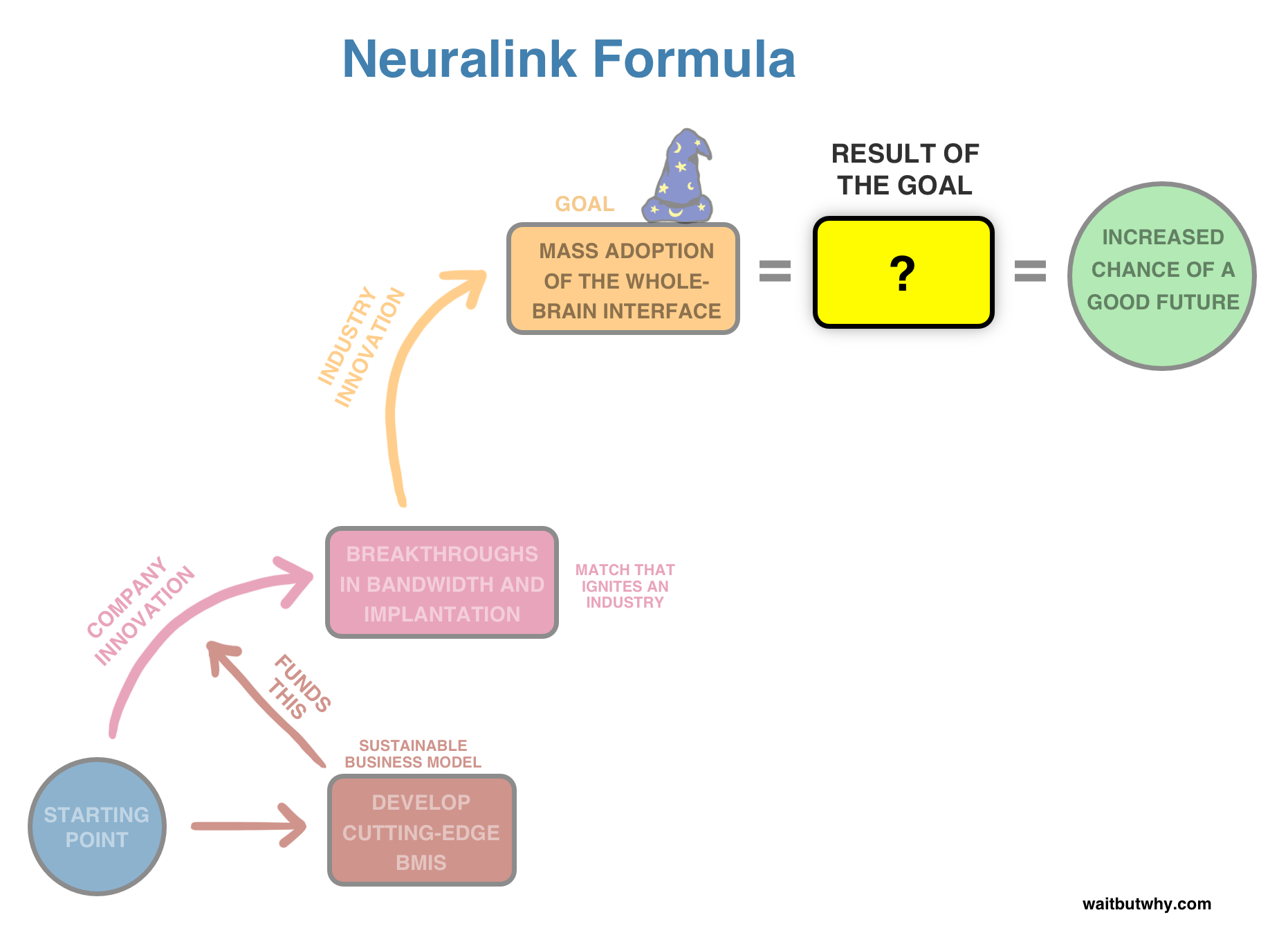

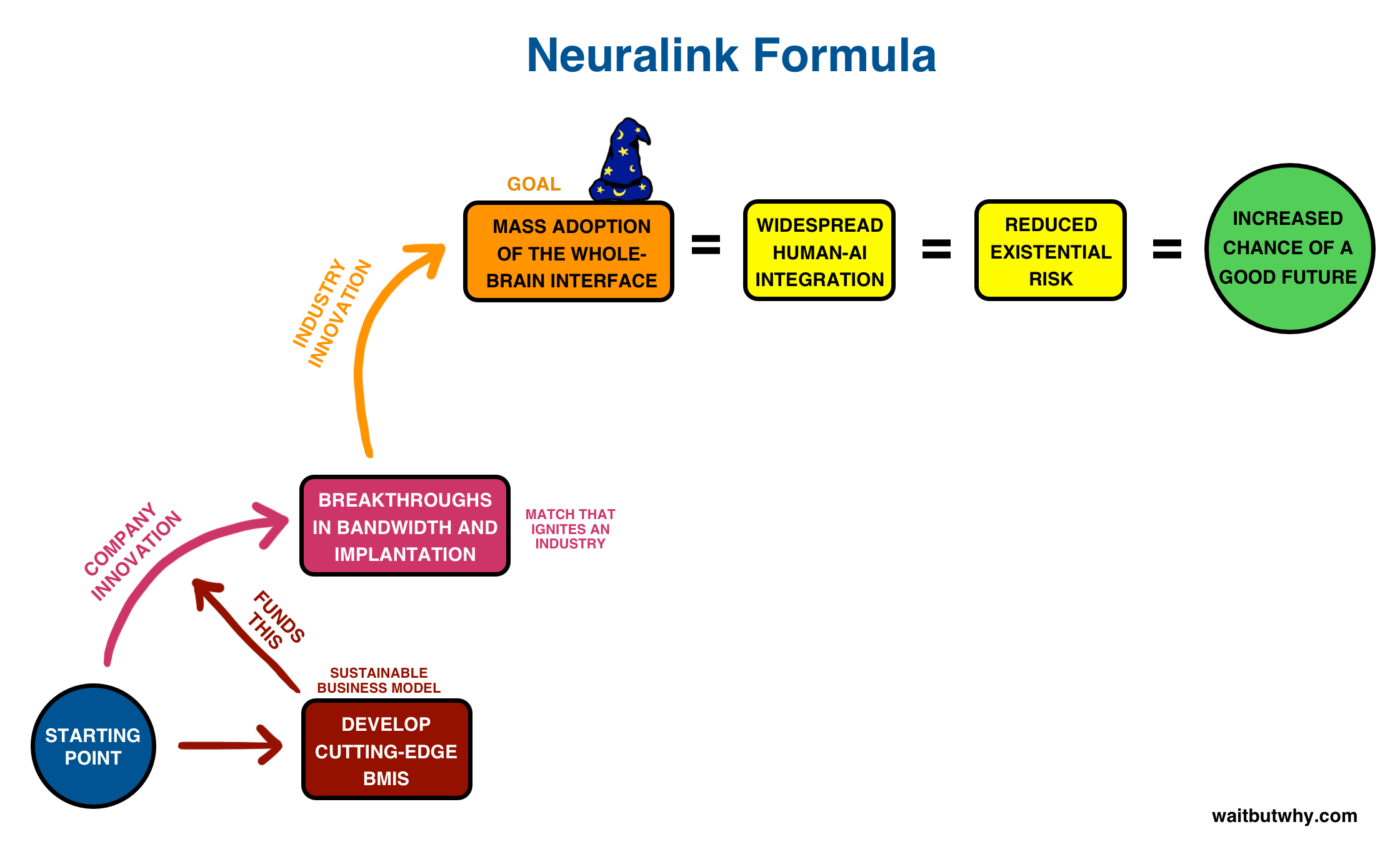

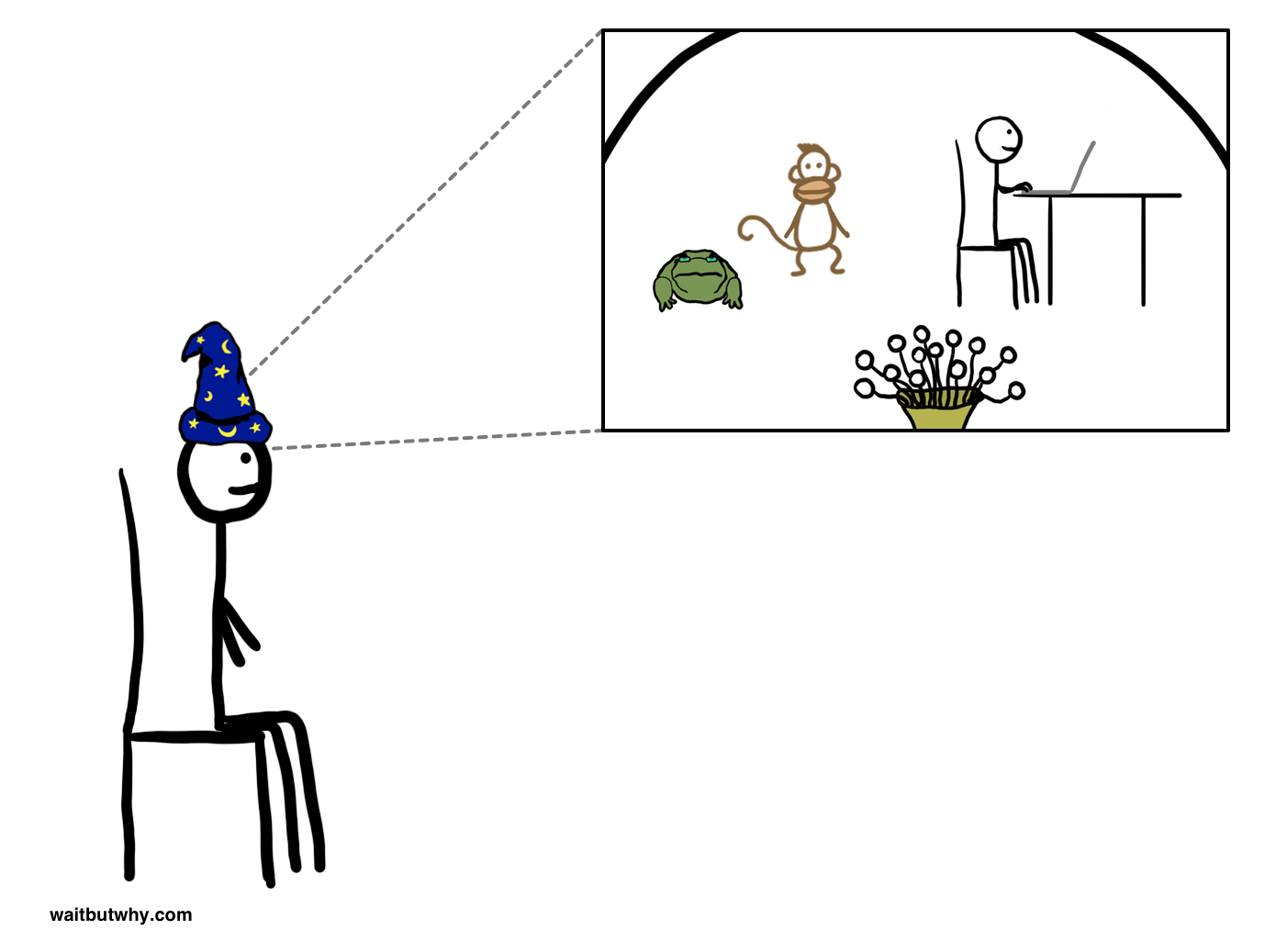

We’ve established that Elon Musk wants to build a wizard hat for the brain, and understanding why he wants to do that is the key to understanding Neuralink—and to understanding what our future might actually be like.

But none of that will make much sense until we really get into the truly mind-blowing concept of what a wizard hat is, what it might be like to wear one, and how we get there from where we are today.

The foundation for that discussion is an understanding of what brain-machine interfaces are, how they work, and where the technology is today.

Finally, BMIs themselves are just a larger branch—not the tree’s trunk. In order to really understand BMIs and how they work, we need to understand the brain. Getting how the brain works is our tree trunk.

So we’ll start with the brain, which will prepare us to learn about BMIs, which will teach us about what it’ll take to build a wizard hat, and that’ll set things up for an insane discussion about the future—which will get our heads right where they need to be to wrap themselves around why Elon thinks a wizard hat is such a critical piece of our future. And by the time we reach the end, this whole thing should click into place.

Part 2: The Brain

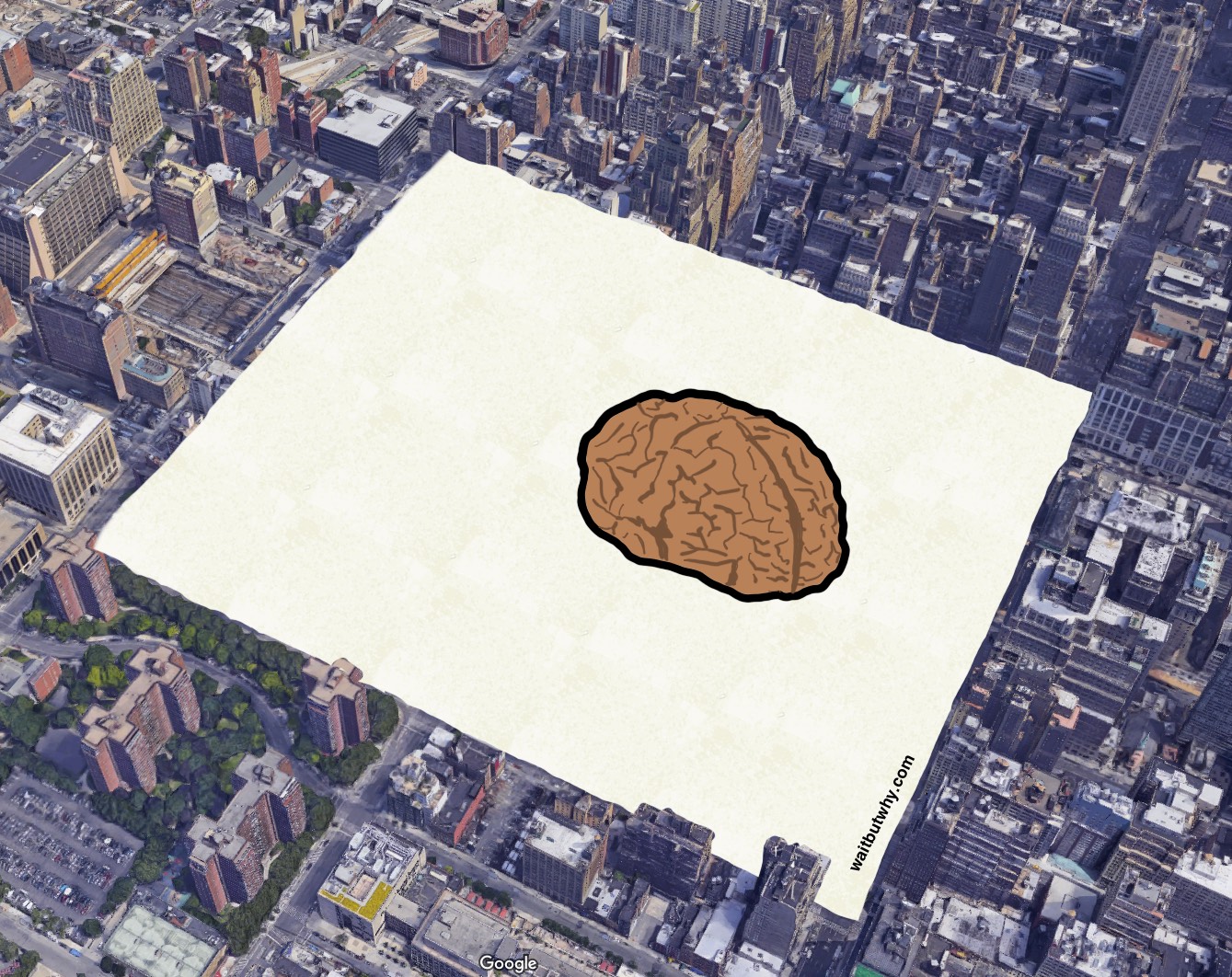

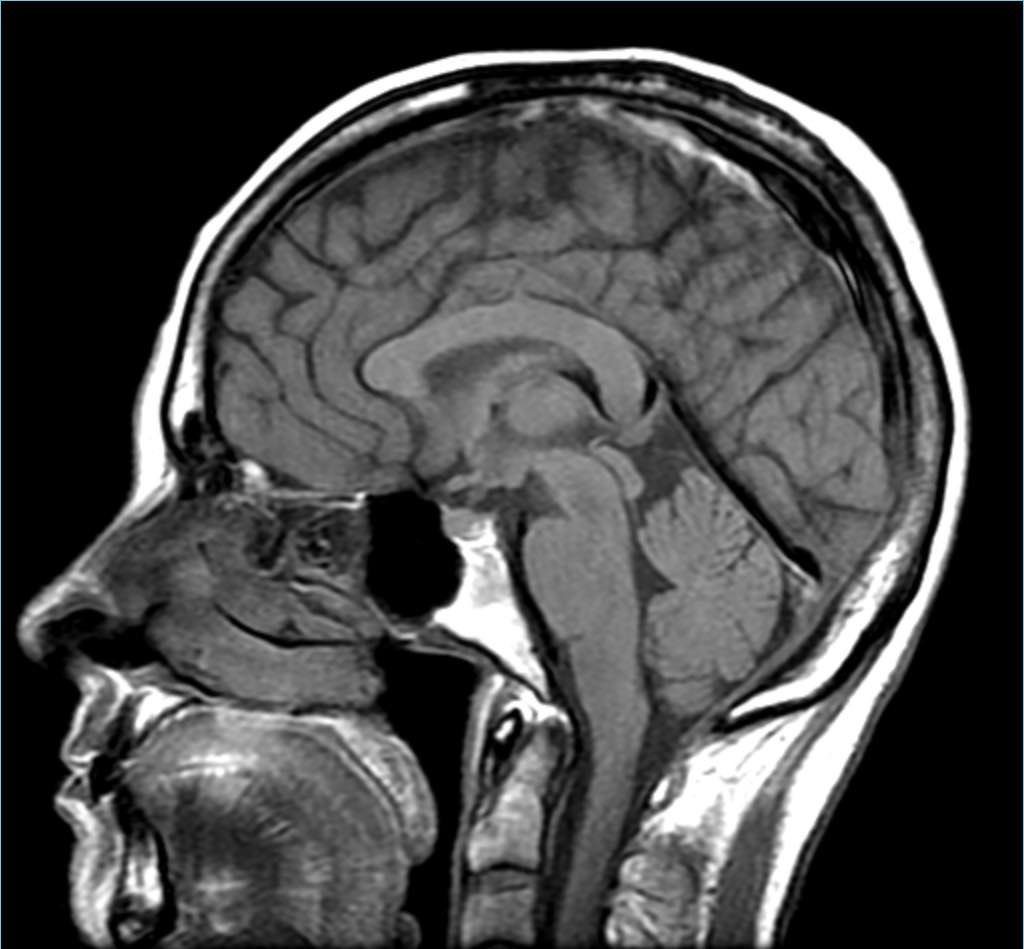

This post was a nice reminder of why I like working with a brain that looks nice and cute like this:

Because the real brain is extremely uncute and upsetting-looking. People are gross.

But I’ve been living in a shimmery, oozy, blood-vessel-lined Google Images hell for the past month, and now you have to deal with it too. So just settle in.

We’ll start outside the head. One thing I will give to biology is that it’s sometimes very satisfying,5 and the brain has some satisfying things going on. The first of which is that there’s a real Russian doll situation going on with your head.

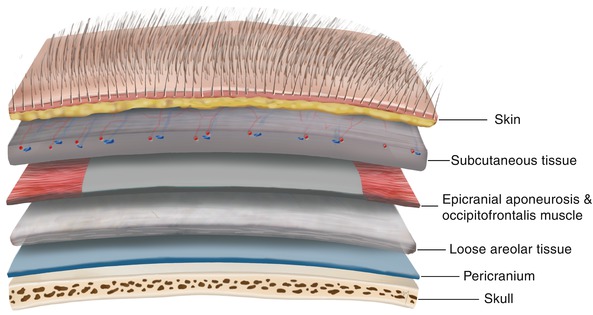

You have your hair, and under that is your scalp, and then you think your skull comes next—but it’s actually like 19 things and then your skull:3

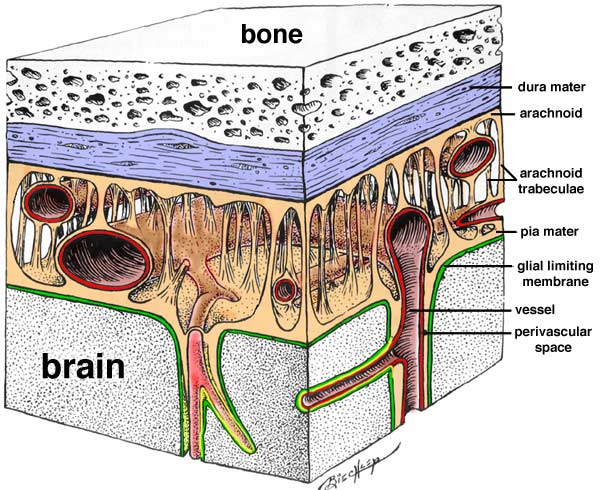

Then below your skull,6 another whole bunch of things are going on before you get to the brain4:

Your brain has three membranes around it underneath the skull:

On the outside, there’s the dura mater (which means “hard mother” in Latin), a firm, rugged, waterproof layer. The dura is flush with the skull. I’ve heard it said that the brain has no pain sensory area, but the dura actually does—it’s about as sensitive as the skin on your face—and pressure on or contusions in the dura often account for people’s bad headaches.

Then below that there’s the arachnoid mater (“spider mother”), which is a layer of skin and then an open space with these stretchy-looking fibers. I always thought my brain was just floating aimlessly in my head in some kind of fluid, but actually, the only real space gap between the outside of the brain and the inner wall of the skull is this arachnoid business. Those fibers stabilize the brain in position so it can’t move too much, and they act as a shock absorber when your head bumps into something. This area is filled with spinal fluid, which keeps the brain mostly buoyant, since its density is similar to that of water.

Finally you have the pia mater (“soft mother”), a fine, delicate layer of skin that’s fused with the outside of the brain. You know how when you see a brain, it’s always covered with icky blood vessels? Those aren’t actually on the brain’s surface, they’re embedded in the pia. (For the non-squeamish, here’s a video of a professor peeling the pia off of a human brain.)

Here’s the full overview, using the head of what looks like probably a pig:

From the left you have the skin (the pink), then two scalp layers, then the skull, then the dura, arachnoid, and on the far right, just the brain covered by the pia.

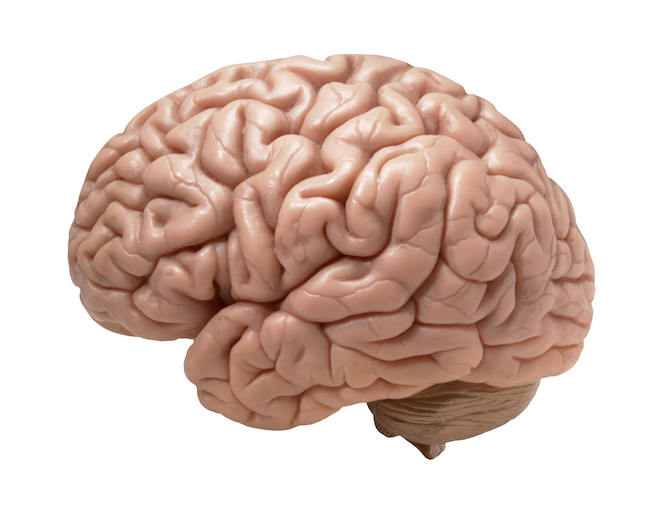

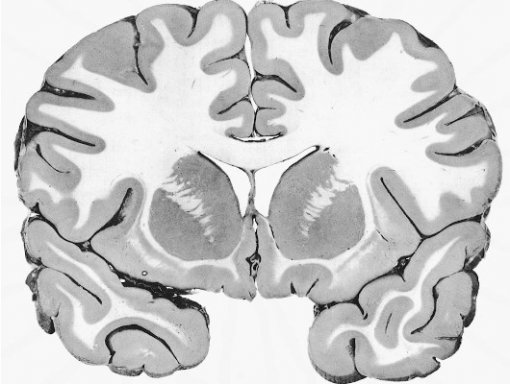

Once we’ve stripped everything down, we’re left with this silly boy:5

This ridiculous-looking thing is the most complex known object in the universe—three pounds of what neuroengineer Tim Hanson calls “one of the most information-dense, structured, and self-structuring matter known.”6 All while operating on only 20 watts of power (an equivalently powerful computer runs on 24,000,000 watts).

It’s also what MIT professor Polina Anikeeva calls “soft pudding you could scoop with a spoon.” Brain surgeon Ben Rapoport described it to me more scientifically, as “somewhere between pudding and jello.” He explained that if you placed a brain on a table, gravity would make it lose its shape and flatten out a bit, kind of like a jellyfish. We often don’t think of the brain as so smooshy, because it’s normally suspended in water.

But this is what we all are. You look in the mirror and see your body and your face and you think that’s you—but that’s really just the machine you’re riding in. What you actually are is a zany-looking ball of jello. I hope that’s okay.

And given how weird that is, you can’t really blame Aristotle, or the ancient Egyptians, or many others, for assuming that the brain was somewhat-meaningless “cranial stuffing” (Aristotle believed the heart was the center of intelligence).7

Eventually, humans figured out the deal. But only kind of.

Professor Krishna Shenoy likens our understanding of the brain to humanity’s grasp on the world map in the early 1500s.

Another professor, Jeff Lichtman, is even harsher. He starts off his courses by asking his students the question, “If everything you need to know about the brain is a mile, how far have we walked in this mile?” He says students give answers like three-quarters of a mile, half a mile, a quarter of a mile, etc.—but that he believes the real answer is “about three inches.”8

A third professor, neuroscientist Moran Cerf, shared with me an old neuroscience saying that points out why trying to master the brain is a bit of a catch-22: “If the human brain were so simple that we could understand it, we would be so simple that we couldn’t.”

Maybe with the help of the great knowledge tower our species is building, we can get there at some point. For now, let’s go through what we do currently know about the jellyfish in our heads—starting with the big picture.

The brain, zoomed out

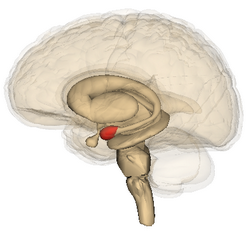

Let’s look at the major sections of the brain using a hemisphere cross section. So this is what the brain looks like in your head:

Now let’s take the brain out of the head and remove the left hemisphere, which gives us a good view of the inside.9

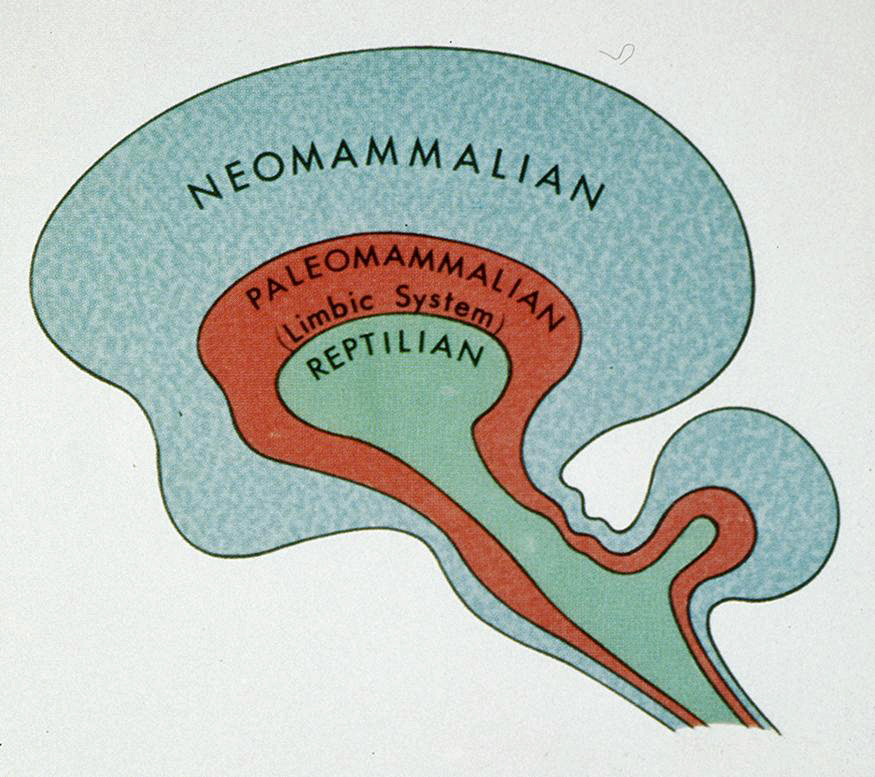

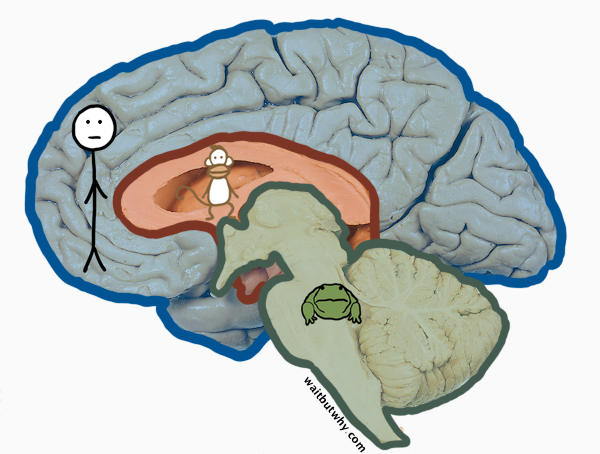

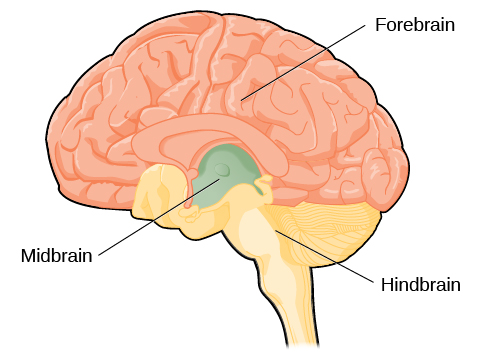

Neurologist Paul MacLean made a simple diagram that illustrates the basic idea we talked about earlier of the reptile brain coming first in evolution, then being built upon by mammals, and finally being built upon again to give us our brain trifecta.

Here’s how this essentially maps out on our real brain:

Let’s take a look at each section:

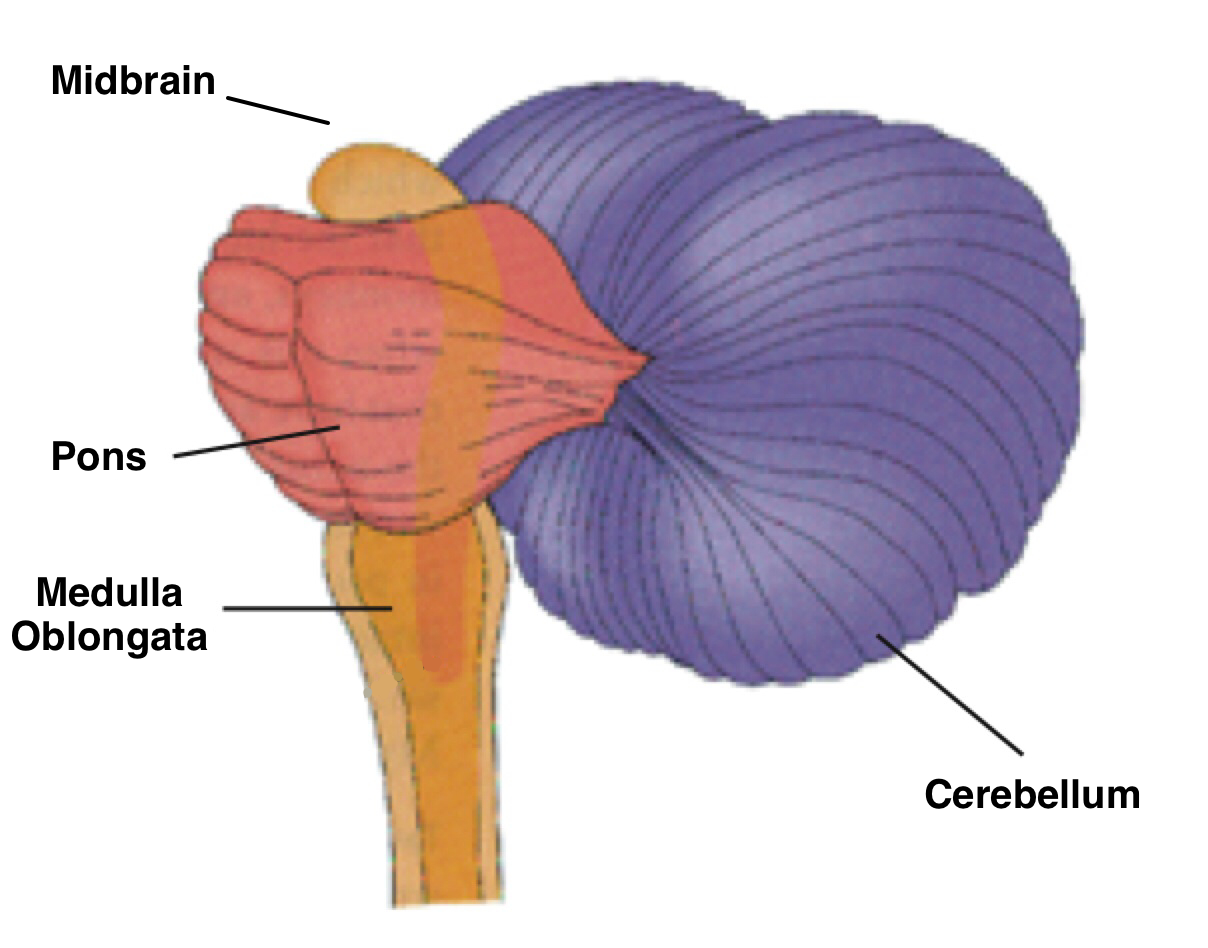

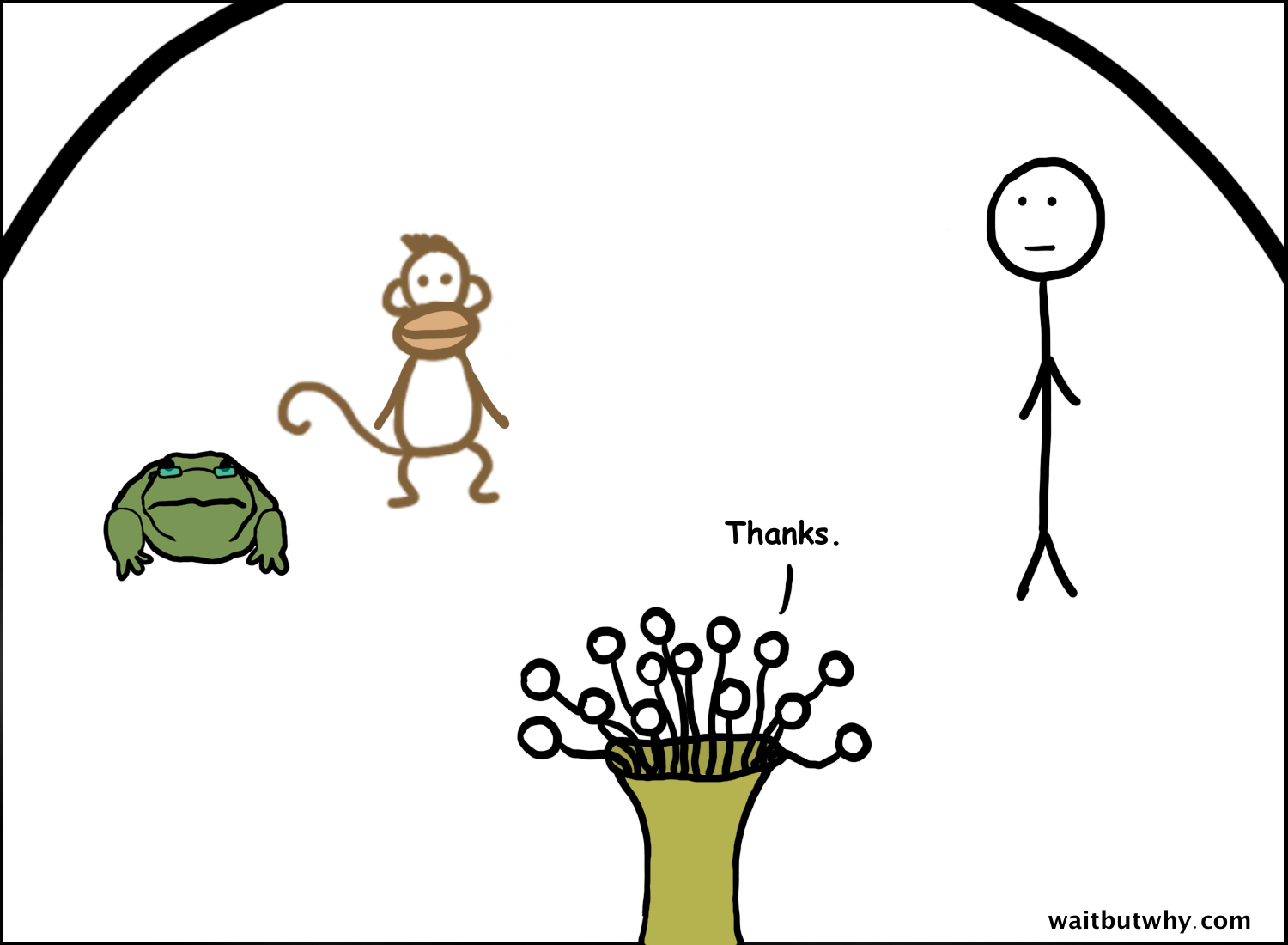

The Reptilian Brain: The Brain Stem (and Cerebellum)

This is the most ancient part of our brain:10

That’s the section of our brain cross section above that the frog boss resides over. In fact, a frog’s entire brain is similar to this lower part of our brain. Here’s a real frog brain:11

When you understand the function of these parts, the fact that they’re ancient makes sense—everything these parts do, frogs and lizards can do. These are the major sections (click any of these spinning images to see a high-res version):

The medulla oblongata really just wants you to not die. It does the thankless tasks of controlling involuntary things like your heart rate, breathing, and blood pressure, along with making you vomit when it thinks you’ve been poisoned.

The pons’s thing is that it does a little bit of this and a little bit of that. It deals with swallowing, bladder control, facial expressions, chewing, saliva, tears, and posture—really just whatever it’s in the mood for.

The midbrain is dealing with an even bigger identity crisis than the pons. You know a brain part is going through some shit when almost all its functions are already another brain part’s thing. In the case of the midbrain, it deals with vision, hearing, motor control, alertness, temperature control, and a bunch of other things that other people in the brain already do. The rest of the brain doesn’t seem very into the midbrain either, given that they created a ridiculously uneven “forebrain, midbrain, hindbrain” divide that intentionally isolates the midbrain all by itself while everyone else hangs out.12

One thing I’ll grant the pons and midbrain is that it’s the two of them that control your voluntary eye movement, which is a pretty legit job. So if right now you move your eyes around, that’s you doing something specifically with your pons and midbrain.7

The odd-looking thing that looks like your brain’s scrotum is your cerebellum (Latin for “little brain”), which makes sure you stay a balanced, coordinated, and normal-moving person. Here’s that rad professor again showing you what a real cerebellum looks like.8

The Paleo-Mammalian Brain: The Limbic System

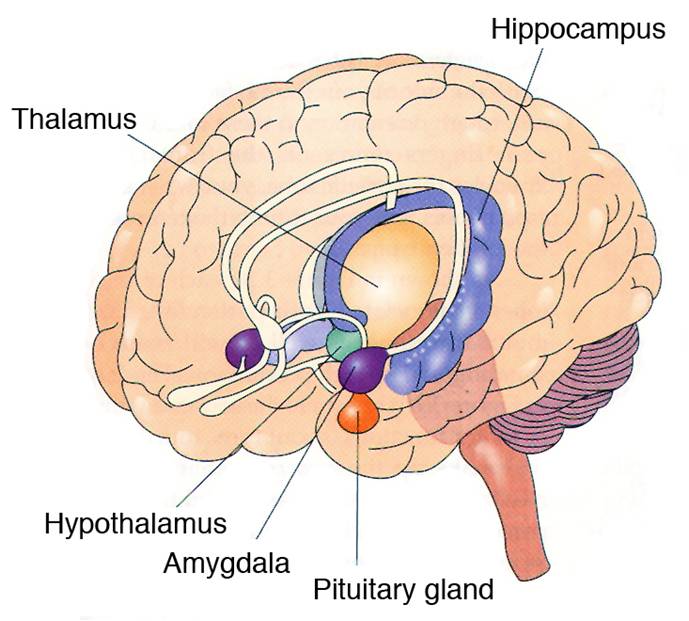

Above the brain stem is the limbic system—the part of the brain that makes humans so insane.13

The limbic system is a survival system. A decent rule of thumb is that whenever you’re doing something that your dog might also do—eating, drinking, having sex, fighting, hiding or running away from something scary—your limbic system is probably behind the wheel. Whether it feels like it or not, when you’re doing any of those things, you’re in primitive survival mode.

The limbic system is also where your emotions live, and in the end, emotions are also all about survival—they’re the more advanced mechanisms of survival, necessary for animals living in a complex social structure.

In other posts, when I refer to your Instant Gratification Monkey, your Social Survival Mammoth, and all your other animals—I’m usually referring to your limbic system. Anytime there’s an internal battle going on in your head, it’s likely that the limbic system’s role is urging you to do the thing you’ll later regret doing.

I’m pretty sure that gaining control over your limbic system is both the definition of maturity and the core human struggle. It’s not that we would be better off without our limbic systems—limbic systems are half of what makes us distinctly human, and most of the fun of life is related to emotions and/or fulfilling your animal needs—it’s just that your limbic system doesn’t get that you live in a civilization, and if you let it run your life too much, it’ll quickly ruin your life.

Anyway, let’s take a closer look at it. There are a lot of little parts of the limbic system, but we’ll keep it to the biggest celebrities:

The amygdala is kind of an emotional wreck of a brain structure. It deals with anxiety, sadness, and our responses to fear. There are two amygdalae, and oddly, the left one has been shown to be more balanced, sometimes producing happy feelings in addition to the usual angsty ones, while the right one is always in a bad mood.

Your hippocampus (Greek for “seahorse” because it looks like one) is like a scratch board for memory. When rats start to memorize directions in a maze, the memory gets encoded in their hippocampus—quite literally. Different parts of the rat’s two hippocampi will fire during different parts of the maze, since each section of the maze is stored in its own section of the hippocampus. But if after learning one maze, the rat is given other tasks and is brought back to the original maze a year later, it will have a hard time remembering it, because the hippocampus scratch board has been mostly wiped of the memory so as to free itself up for new memories.

The condition in the movie Memento is a real thing—anterograde amnesia—and it’s caused by damage to the hippocampus. Alzheimer’s also starts in the hippocampus before working its way through many parts of the brain, which is why, of the slew of devastating effects of the disease, diminished memory happens first.

In its central position in the brain, the thalamus also serves as a sensory middleman that receives information from your sensory organs and sends them to your cortex for processing. When you’re sleeping, the thalamus goes to sleep with you, which means the sensory middleman is off duty. That’s why in a deep sleep, some sound or light or touch often will not wake you up. If you want to wake someone up who’s in a deep sleep, you have to be aggressive enough to wake their thalamus up.

The exception is your sense of smell, which is the one sense that bypasses the thalamus. That’s why smelling salts are used to wake up a passed-out person. While we’re here, cool fact: smell is the function of the olfactory bulb and is the most ancient of the senses. Unlike the other senses, smell is located deep in the limbic system, where it works closely with the hippocampus and amygdala—which is why smell is so closely tied to memory and emotion.

The Neo-Mammalian Brain: The Cortex

Finally, we arrive at the cortex. The cerebral cortex. The neocortex. The cerebrum. The pallium.

The most important part of the whole brain can’t figure out what its name is. Here’s what’s happening:

The What the Hell is it Actually Called Blue Box

The cerebrum is the whole big top/outside part of the brain but it also technically includes some of the internal parts too.

Cortex means “bark” in Latin and is the word used for the outer layer of many organs, not just the brain. The outside of the cerebellum is the cerebellar cortex. And the outside of the cerebrum is the cerebral cortex. Only mammals have cerebral cortices. The equivalent part of the brain in reptiles is called the pallium.

The neocortex is often used interchangeably with “cerebral cortex,” but it’s technically the outer layers of the cerebral cortex that are especially developed in more advanced mammals. The other parts are called the allocortex.

In the rest of this post, we’ll be mostly referring to the neocortex but we’ll just call it the cortex, since that’s the least annoying way to do it for everyone.

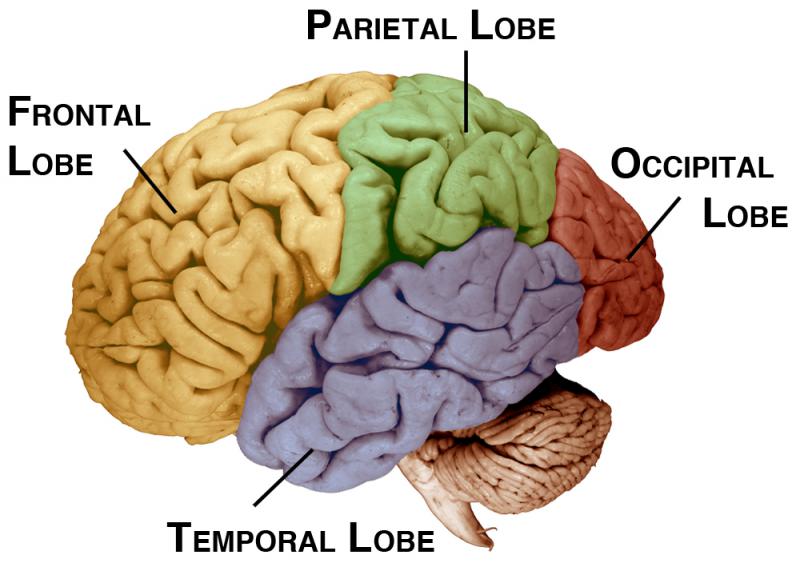

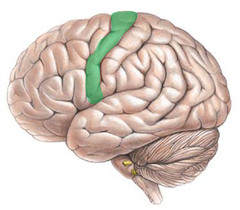

The cortex is in charge of basically everything—processing what you see, hear, and feel, along with language, movement, thinking, planning, and personality.

It’s divided into four lobes:14

It’s pretty unsatisfying to describe what they each do, because they each do so many things and there’s a lot of overlap, but to oversimplify:

The frontal lobe (click the words to see a gif) handles your personality, along with a lot of what we think of as “thinking”—reasoning, planning, and executive function. In particular, a lot of your thinking takes place in the front part of the frontal lobe, called the prefrontal cortex—the adult in your head. The prefrontal cortex is the other character in those internal battles that go on in your life. The rational decision-maker trying to get you to do your work. The authentic voice trying to get you to stop worrying so much what others think and just be yourself. The higher being who wishes you’d stop sweating the small stuff.

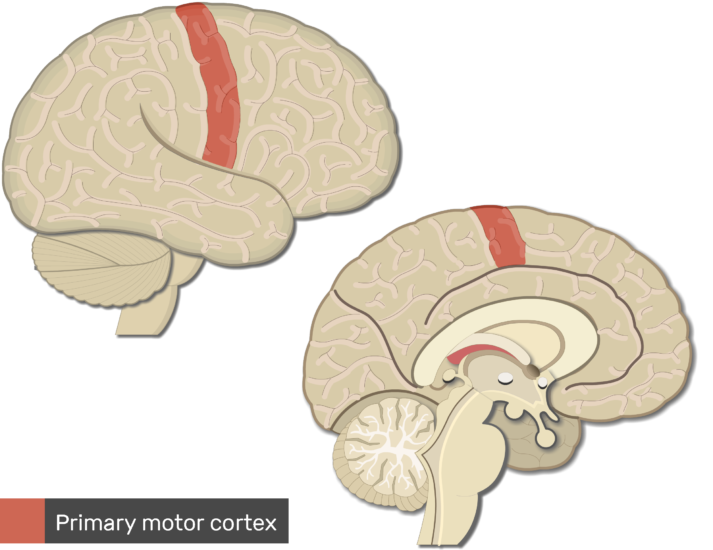

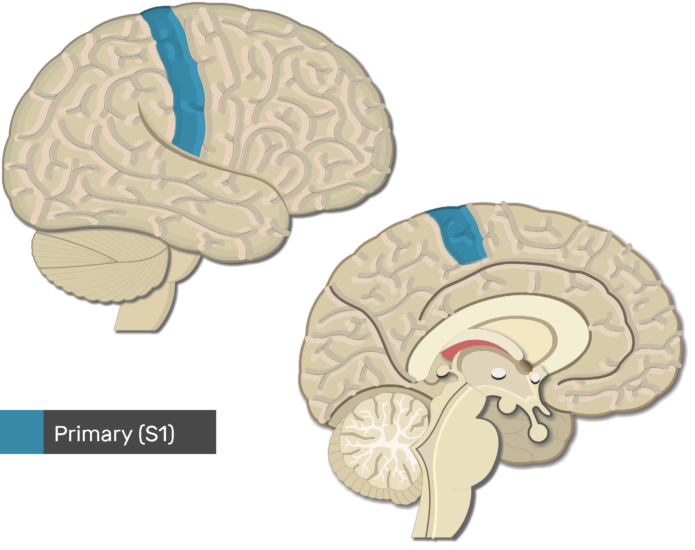

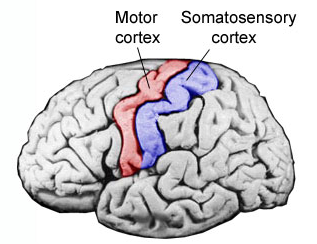

As if that’s not enough to worry about, the frontal lobe is also in charge of your body’s movement. The top strip of the frontal lobe is your primary motor cortex.15

Then there’s the parietal lobe which, among other things, controls your sense of touch, particularly in the primary somatosensory cortex, the strip right next to the primary motor cortex.16

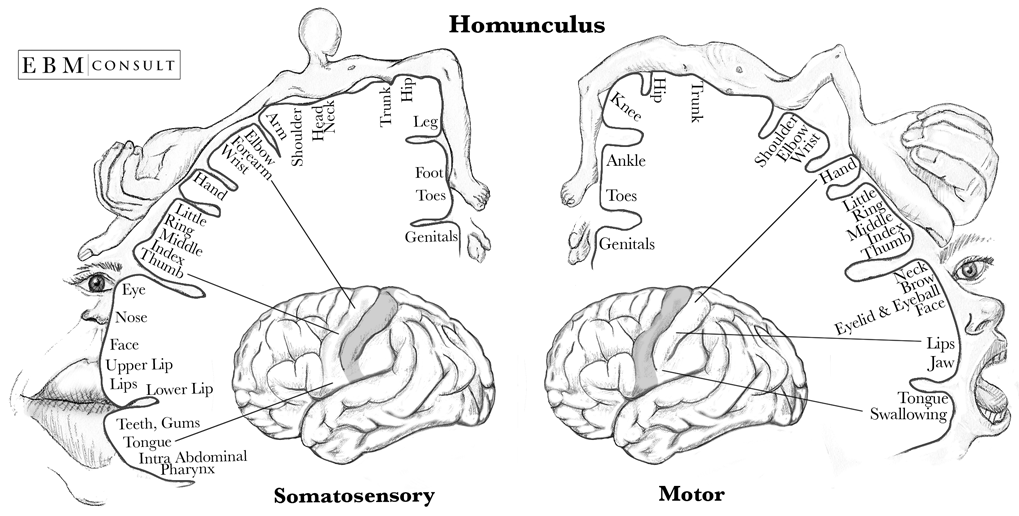

The motor and somatosensory cortices are fun because they’re well-mapped. Neuroscientists know exactly which part of each strip connects to each part of your body. Which leads us to the creepiest diagram of this post: the homunculus.

The homunculus, created by pioneer neurosurgeon Wilder Penfield, visually displays how the motor and somatosensory cortices are mapped. The larger the body part in the diagram, the more of the cortex is dedicated to its movement or sense of touch. A couple interesting things about this:

First, it’s amazing that more of your brain is dedicated to the movement and feeling of your face and hands than to the rest of your body combined. This makes sense though—you need to make incredibly nuanced facial expressions and your hands need to be unbelievably dexterous, while the rest of your body—your shoulder, your knee, your back—can move and feel things much more crudely. This is why people can play the piano with their fingers but not with their toes.

Second, it’s interesting how the two cortices are basically dedicated to the same body parts, in the same proportions. I never really thought about the fact that the same parts of your body you need to have a lot of movement control over tend to also be the most sensitive to touch.

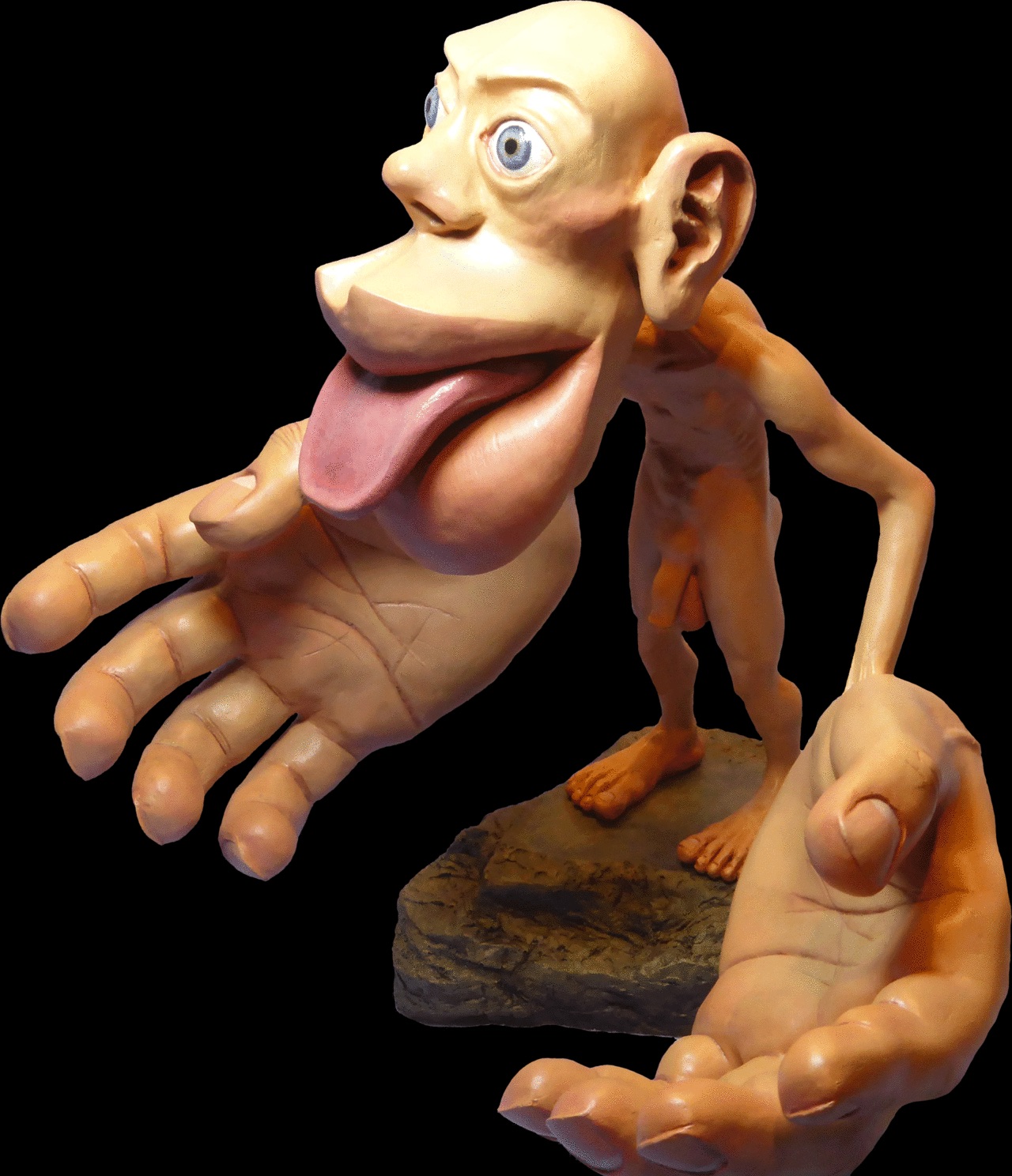

Finally, I came across this shit and I’ve been living with it ever since—so now you have to too. A 3-dimensional homunculus man.17

Moving on—

The temporal lobe is where a lot of your memory lives, and being right next to your ears, it’s also the home of your auditory cortex.

Last, at the back of your head is the occipital lobe, which houses your visual cortex and is almost entirely dedicated to vision.

Now for a long time, I thought these major lobes were chunks of the brain—like, segments of the whole 3D structure. But actually, the cortex is just the outer two millimeters of the brain—the thickness of a nickel—and the meat of the space underneath is mostly just wiring.

The Why Brains Are So Wrinkly Blue Box

As we’ve discussed, the evolution of our brain happened by building outwards, adding newer, fancier features on top of the existing model. But building outwards has its limits, because the need for humans to emerge into the world through someone’s vagina puts a cap on how big our heads could be.9

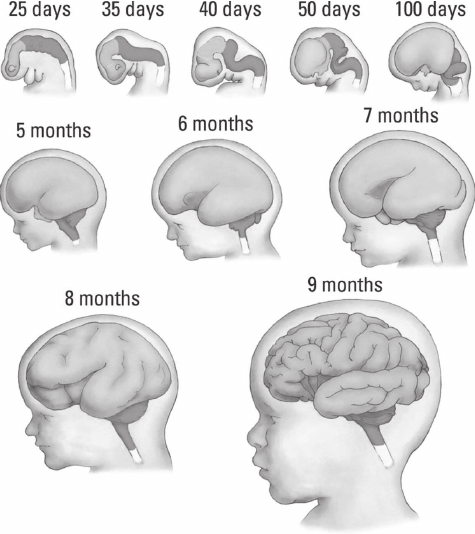

So evolution got innovative. Because the cortex is so thin, it scales by increasing its surface area. That means that by creating lots of folds (including both sides folding down into the gap between the two hemispheres), you can more than triple the area of the brain’s surface without increasing the volume too much. When the brain first develops in the womb, the cortex is smooth—the folds form mostly in the last two months of pregnancy:18

Cool explainer of how the folds form here.

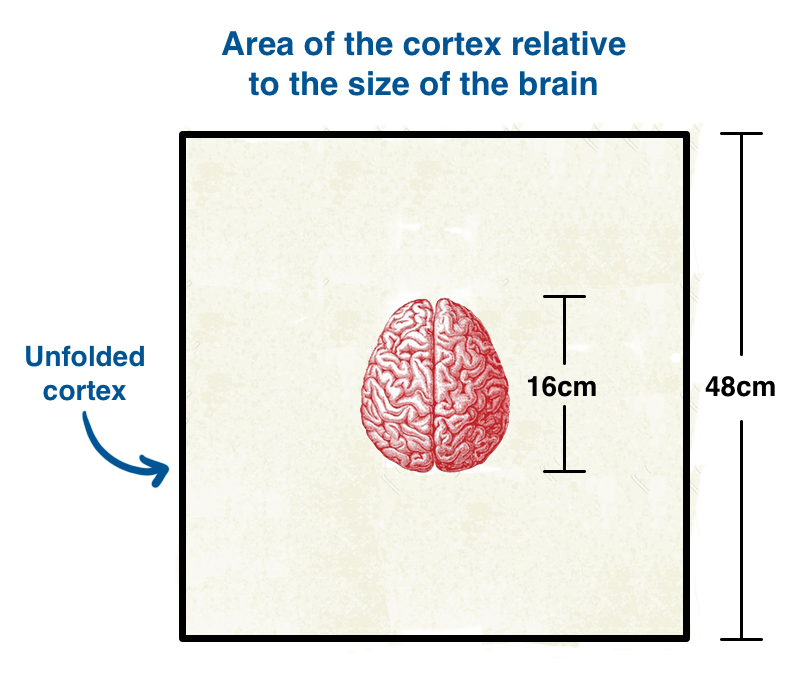

If you could take the cortex off the brain, you’d end up with a 2mm-thick sheet with an area of 2,000-2,400cm2—about the size of a 48cm x 48cm (19in x 19in) square.10 A dinner napkin.

This napkin is where most of the action in your brain happens—it’s why you can think, move, feel, see, hear, remember, and speak and understand language. Best napkin ever.

And remember before when I said that you were a jello ball? Well the you you think of when you think of yourself—it’s really mainly your cortex. Which means you’re actually a napkin.

The magic of the folds in increasing the napkin’s size is clear when we put another brain on top of our stripped-off cortex:

So while it’s not perfect, modern science has a decent understanding of the big picture when it comes to the brain. We also have a decent understanding of the little picture. Let’s check it out:

The brain, zoomed in

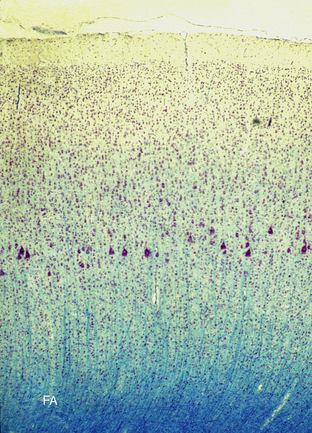

Even though we figured out that the brain was the seat of our intelligence a long time ago, it wasn’t until pretty recently that science understood what the brain was made of. Scientists knew that the body was made of cells, but in the late 19th century, Italian physician Camillo Golgi figured out how to use a staining method to see what brain cells actually looked like. The result was surprising:

That wasn’t what a cell was supposed to look like. Without quite realizing it yet,11 Golgi had discovered the neuron.

Scientists realized that the neuron was the core unit in the vast communication network that makes up the brains and nervous systems of nearly all animals.

But it wasn’t until the 1950s that scientists worked out how neurons communicate with each other.

An axon, the long strand of a neuron that carries information, is normally microscopic in diameter—too small for scientists to test on until recently. But in the 1930s, English zoologist J. Z. Young discovered that the squid, randomly, could change everything for our understanding, because squids have an unusually huge axon in their bodies that could be experimented on. A couple decades later, using the squid’s giant axon, scientists Alan Hodgkin and Andrew Huxley definitively figured out how neurons send information: the action potential. Here’s how it works.

So there are a lot of different kinds of neurons—19

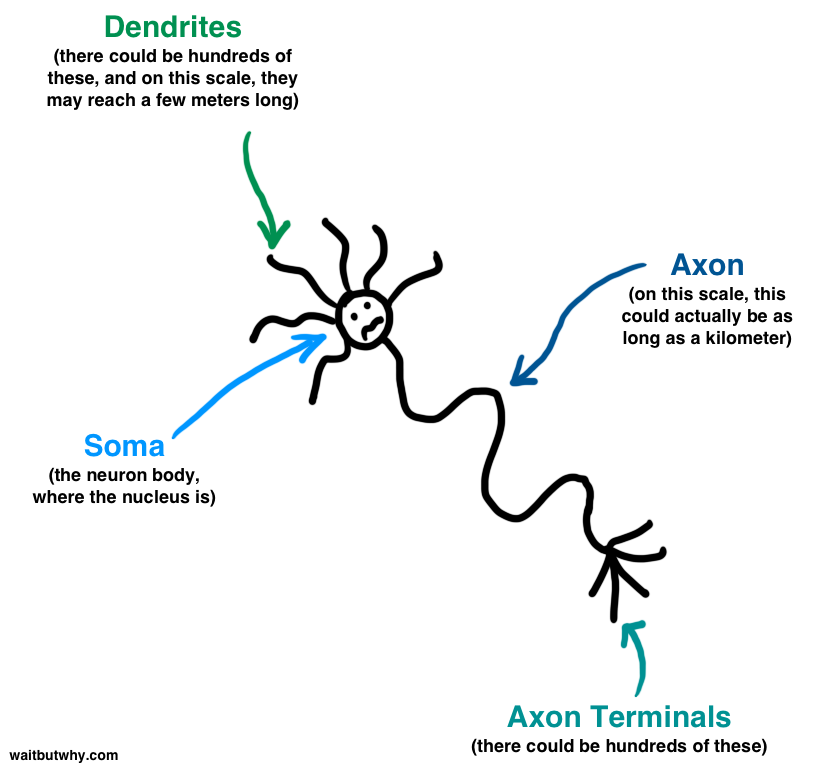

—but for simplicity, we’ll discuss the cliché textbook neuron—a pyramidal cell, like one you might find in your motor cortex. To make a neuron diagram, we can start with a guy:

And then if we just give him a few extra legs, some hair, take his arms off, and stretch him out—we have a neuron.

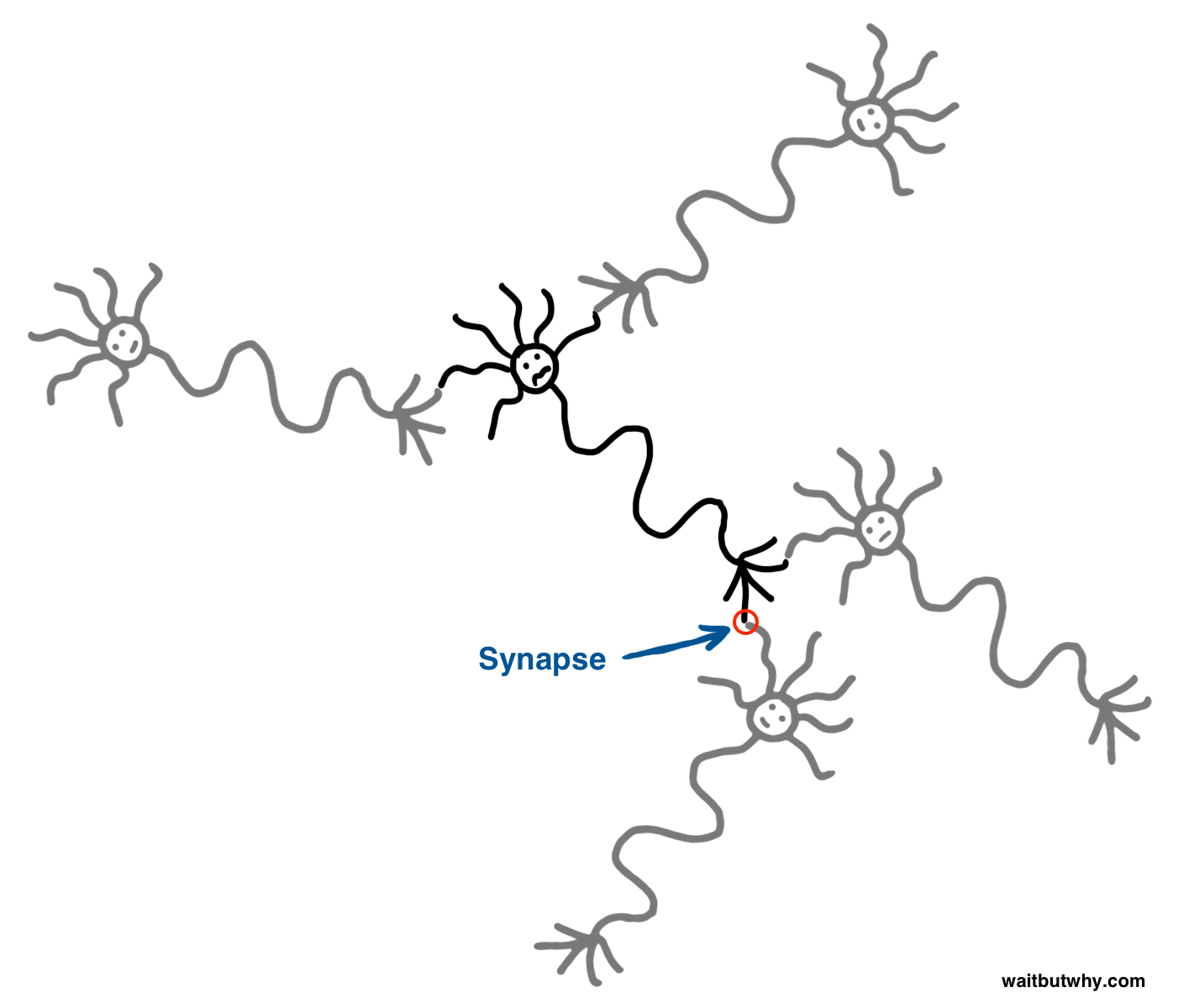

And let’s add in a few more neurons.

Rather than launch into the full, detailed explanation for how action potentials work—which involves a lot of unnecessary and uninteresting technical information you already dealt with in 9th-grade biology—I’ll link to this great Khan Academy explainer article for those who want the full story. We’ll go through the very basic ideas that are relevant for our purposes.

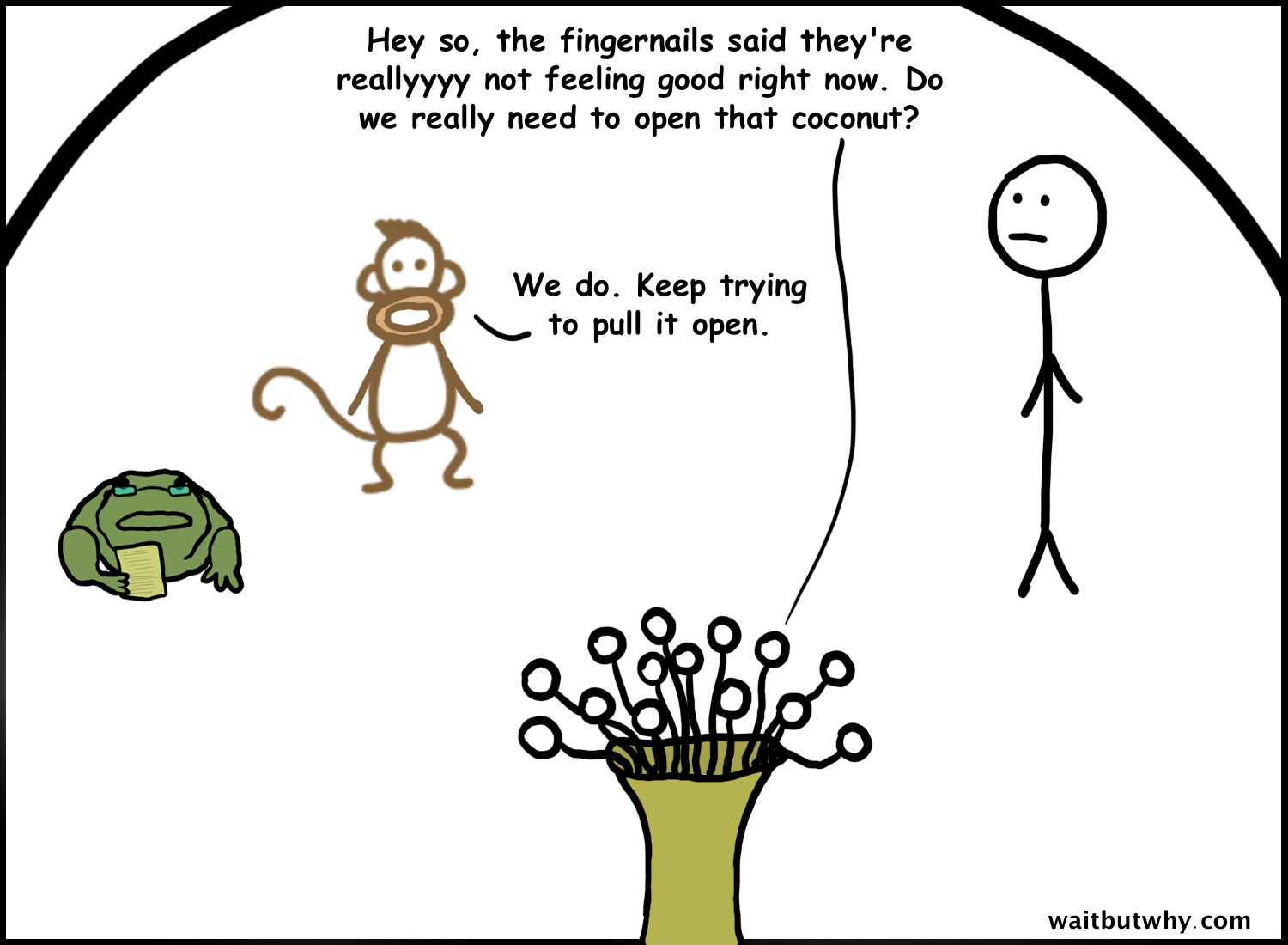

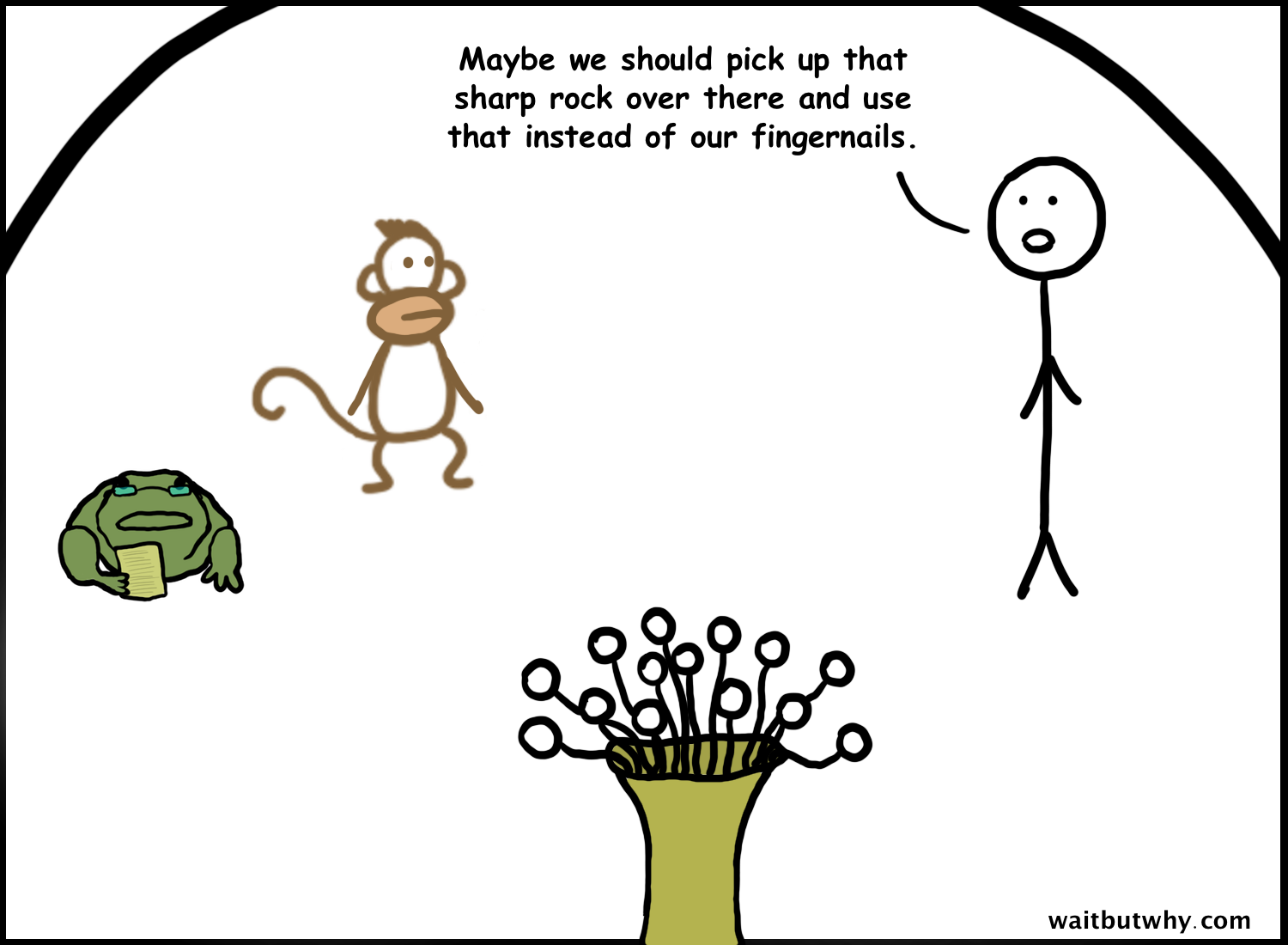

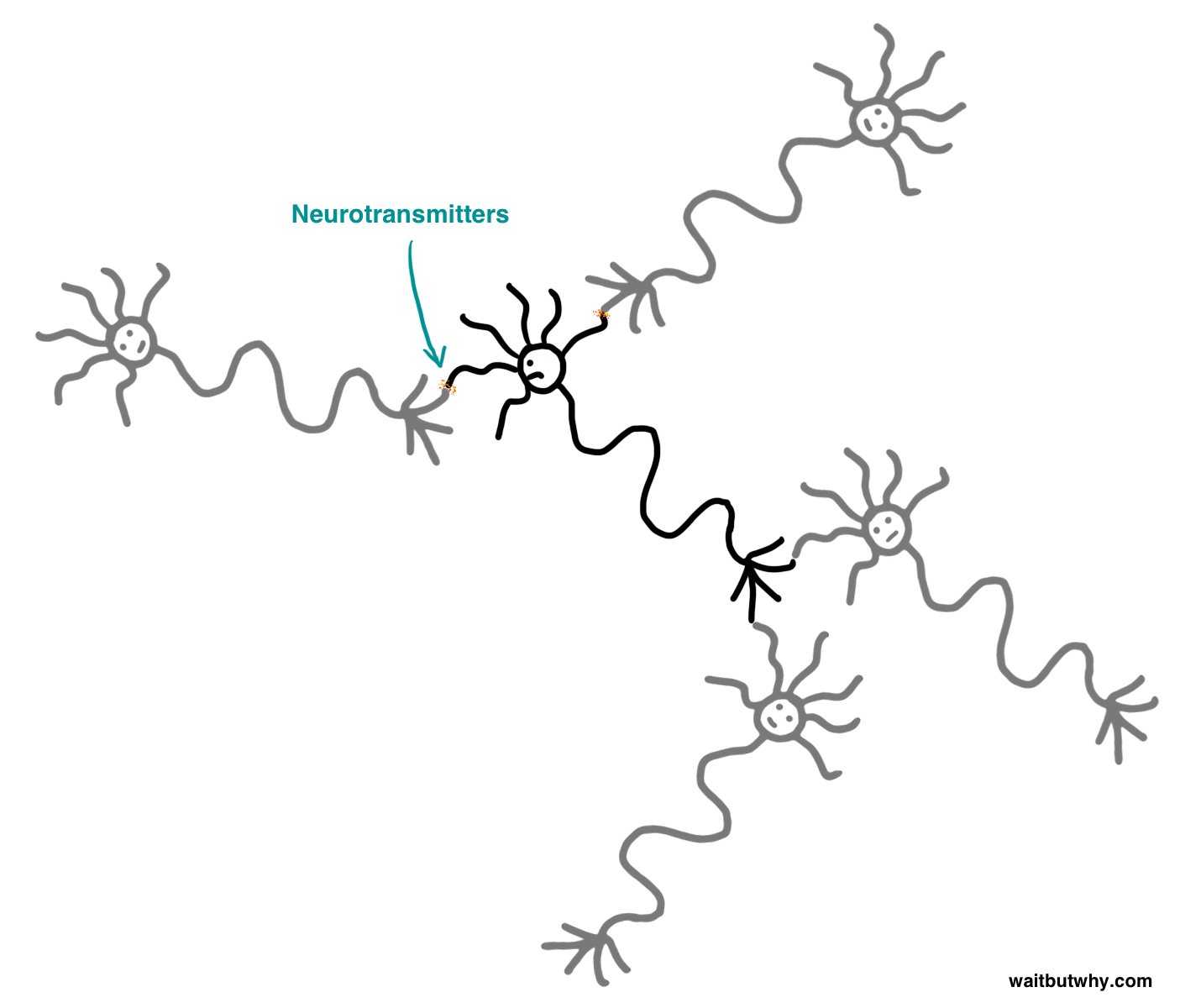

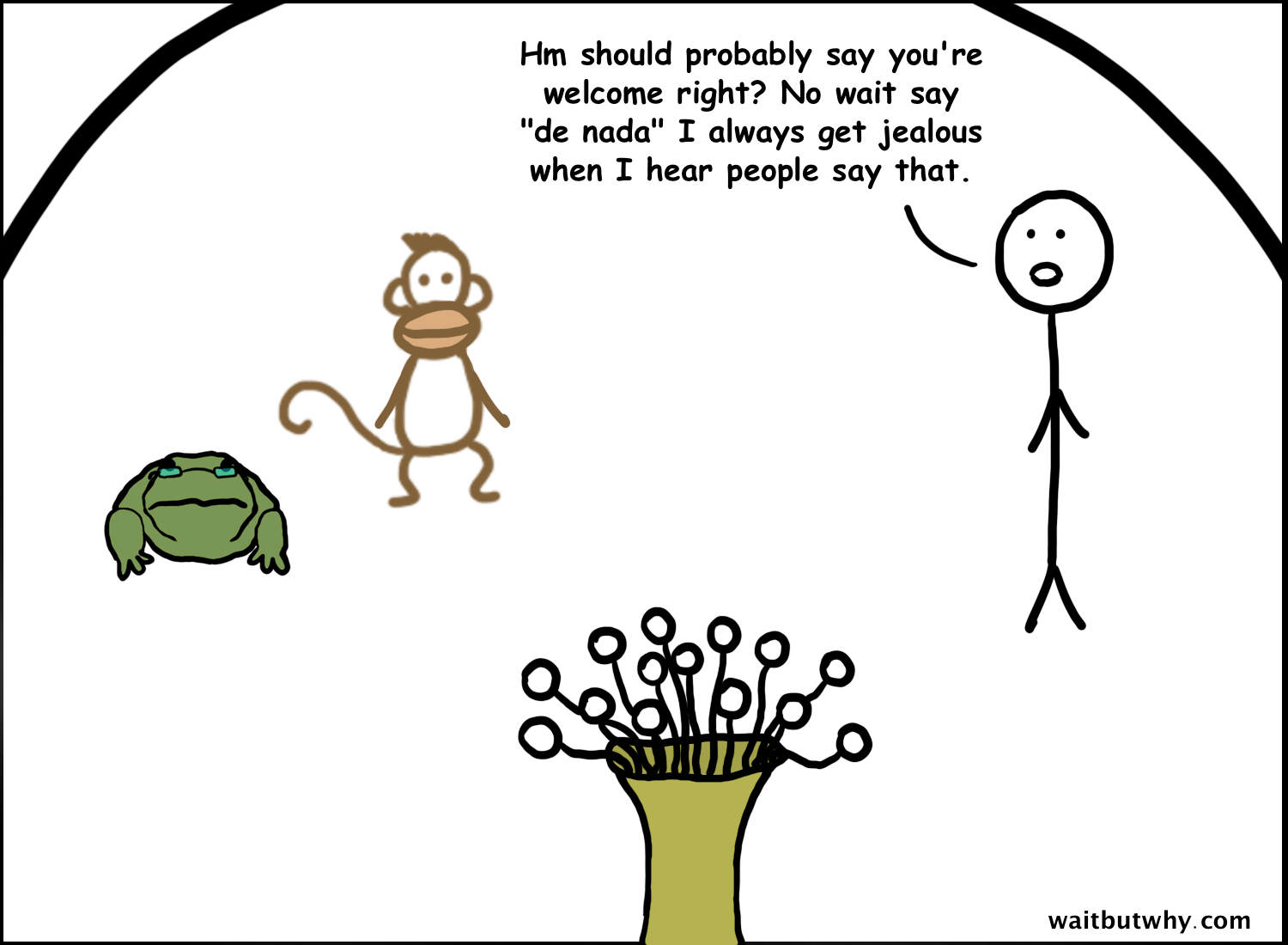

So our guy’s body stem—the neuron’s axon—has a negative “resting potential,” which means that when it’s at rest, its electrical charge is slightly negative. At all times, a bunch of people’s feet keep touching12 our guy’s hair—the neuron’s dendrites—whether he likes it or not. Their feet drop chemicals called neurotransmitters13 onto his hair—which pass through his head (the cell body, or soma) and, depending on the chemical, raise or lower the charge in his body a little bit. It’s a little unpleasant for our neuron guy, but not a huge deal—and nothing else happens.

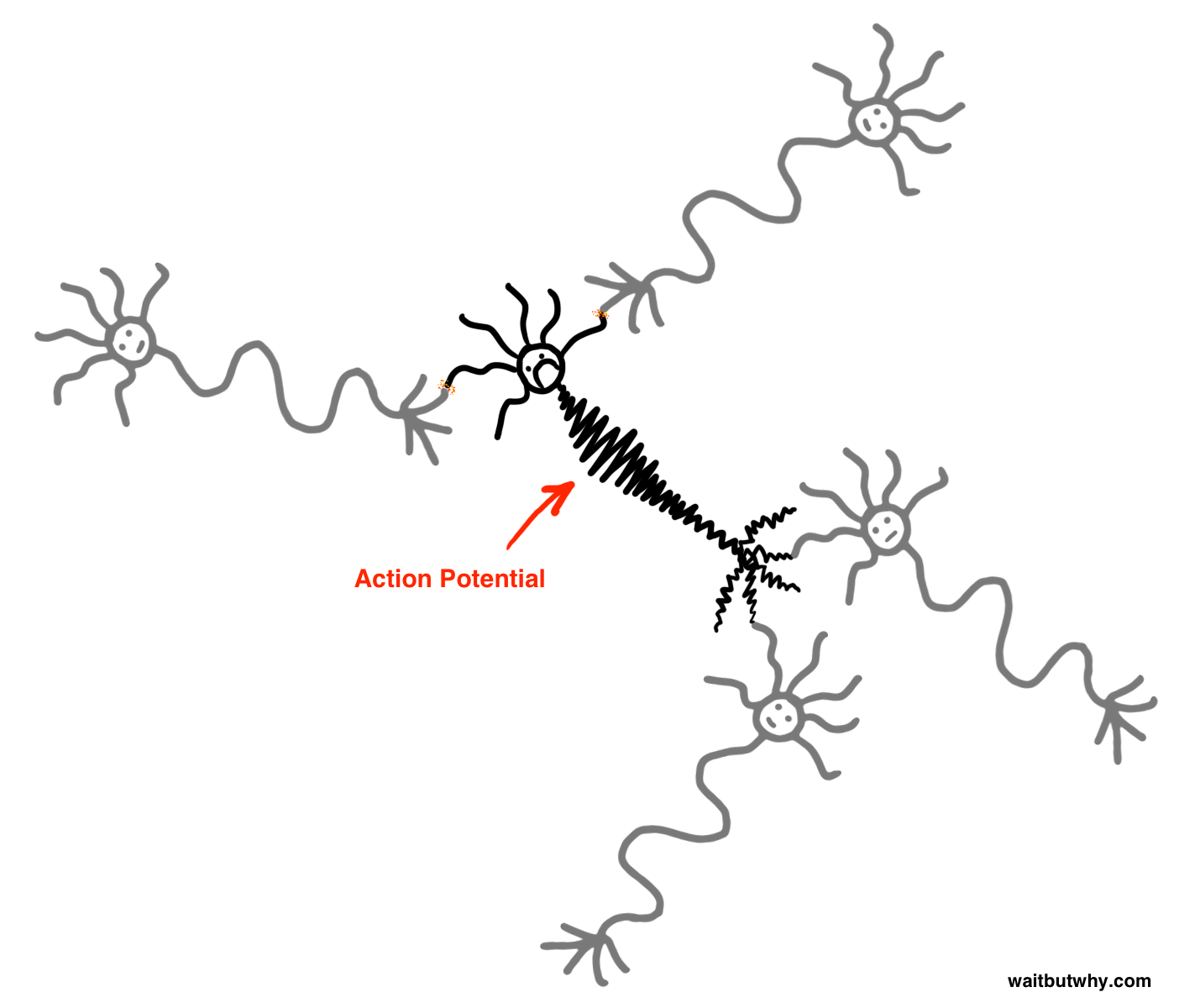

But if enough chemicals touch his hair to raise his charge over a certain point—the neuron’s “threshold potential”—then it triggers an action potential, and our guy is electrocuted.

This is a binary situation—either nothing happens to our guy, or he’s fully electrocuted. He can’t be kind of electrocuted, or extra electrocuted—he’s either not electrocuted at all, or he’s fully electrocuted to the exact same degree every time.

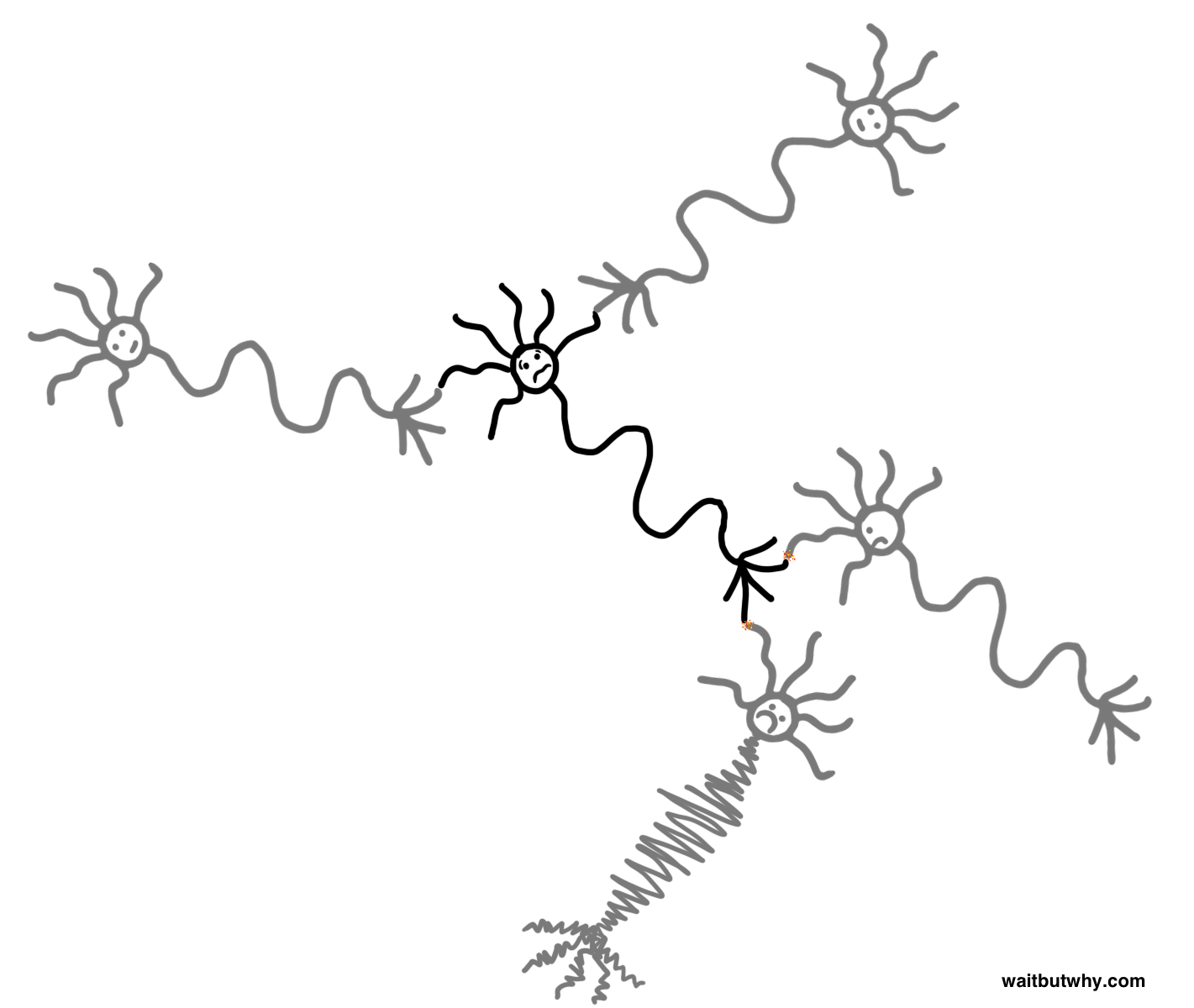

When this happens, a pulse of electricity (in the form of a brief reversal of his body’s normal charge from negative to positive and then rapidly back down to his normal negative) zips down his body (the axon) and into his feet—the neuron’s axon terminals—which themselves touch a bunch of other people’s hair (the points of contact are called synapses). When the action potential reaches his feet, it causes them to release chemicals onto the people’s hair they’re touching, which may or may not cause those people to be electrocuted, just like he was.

This is usually how info moves through the nervous system—chemical information sent in the tiny gap between neurons triggers electrical information to pass through the neuron—but sometimes, in situations when the body needs to move a signal extra quickly, neuron-to-neuron connections can themselves be electric.

Action potentials move at between 1 and 100 meters/second. Part of the reason for this large range is that another type of cell in the nervous system—a Schwann cell—acts like a super nurturing grandmother and constantly wraps some types of axons in layers of fat blankets called myelin sheath. Like this (takes a second to start):20

On top of its protection and insulation benefits, the myelin sheath is a major factor in the pace of communication—action potentials travel much faster through axons when they’re covered in myelin sheath:1421

One nice example of the speed difference created by myelin: You know how when you stub your toe, your body gives you that one second of reflection time to think about what you just did and what you’re about to feel, before the pain actually kicks in? What’s happening is you feel both the sensation of your toe hitting against something and the sharp part of the pain right away, because sharp pain information is sent to the brain via types of axons that are myelinated. It takes a second or two for the dull pain to kick in because dull pain is sent via unmyelinated “C fibers”—at only around one meter/second.

Neural Networks

Neurons are similar to computer transistors in one way—they also transmit information in the binary language of 1’s (action potential firing) and 0’s (no action potential firing). But unlike computer transistors, the brain’s neurons are constantly changing.

You know how sometimes you learn a new skill and you get pretty good at it, and then the next day you try again and you suck again? That’s because what made you get good at the skill the day before was adjustments to the amount or concentration of the chemicals in the signaling between neurons. Repetition caused chemicals to adjust, which helped you improve, but the next day the chemicals were back to normal so the improvement went away.

But then if you keep practicing, you eventually get good at something in a lasting way. What’s happened is you’ve told the brain, “this isn’t just something I need in a one-off way,” and the brain’s neural network has responded by making structural changes to itself that last. Neurons have shifted shape and location and strengthened or weakened various connections in a way that has built a hard-wired set of pathways that know how to do that skill.

Neurons’ ability to alter themselves chemically, structurally, and even functionally, allow your brain’s neural network to optimize itself to the external world—a phenomenon called neuroplasticity. Babies’ brains are the most neuroplastic of all. When a baby is born, its brain has no idea if it needs to accommodate the life of a medieval warrior who will need to become incredibly adept at sword-fighting, a 17th-century musician who will need to develop fine-tuned muscle memory for playing the harpsichord, or a modern-day intellectual who will need to store and organize a tremendous amount of information and master a complex social fabric—but the baby’s brain is ready to shape itself to handle whatever life has in store for it.

Babies are the neuroplasticity superstars, but neuroplasticity remains throughout our whole lives, which is why humans can grow and change and learn new things. And it’s why we can form new habits and break old ones—your habits are reflective of the existing circuitry in your brain. If you want to change your habits, you need to exert a lot of willpower to override your brain’s neural pathways, but if you can keep it going long enough, your brain will eventually get the hint and alter those pathways, and the new behavior will stop requiring willpower. Your brain will have physically built the changes into a new habit.

Altogether, there are around 100 billion neurons in the brain that make up this unthinkably vast network—similar to the number of stars in the Milky Way and over 10 times the number of people in the world. Around 15 – 20 billion of those neurons are in the cortex, and the rest are in the animal parts of your brain (surprisingly, the random cerebellum has more than three times as many neurons as the cortex).

Let’s zoom back out and look at another cross section of the brain—this time cut not from front to back to show a single hemisphere, but from side to side:22

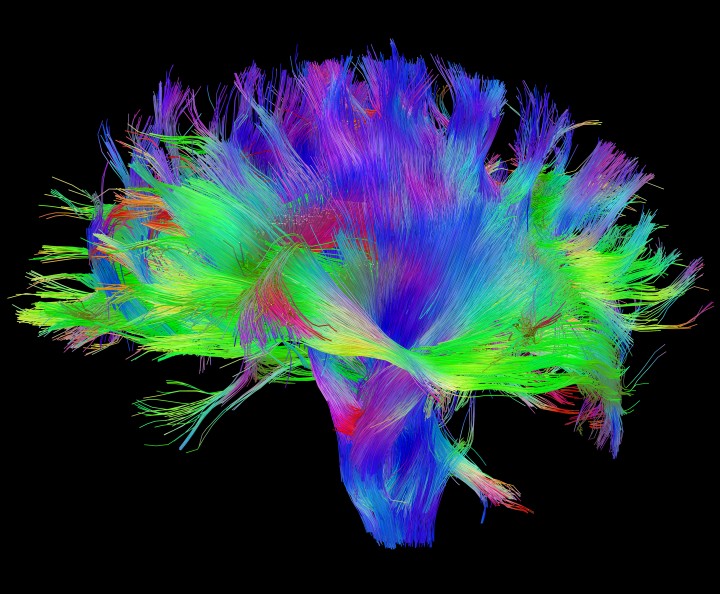

Brain material can be divided into what’s called gray matter and white matter. Gray matter actually looks darker in color and is made up of the cell bodies (somas) of the brain’s neurons and their thicket of dendrites and axons—along with a lot of other stuff. White matter is made up primarily of wiring—axons carrying information from somas to other somas or to destinations in the body. White matter is white because those axons are usually wrapped in myelin sheath, which is fatty white tissue.

There are two main regions of gray matter in the brain—the internal cluster of limbic system and brain stem parts we discussed above, and the nickel-thick layer of cortex around the outside. The big chunk of white matter in between is made up mostly of the axons of cortical neurons. The cortex is like a great command center, and it beams many of its orders out through the mass of axons making up the white matter beneath it.

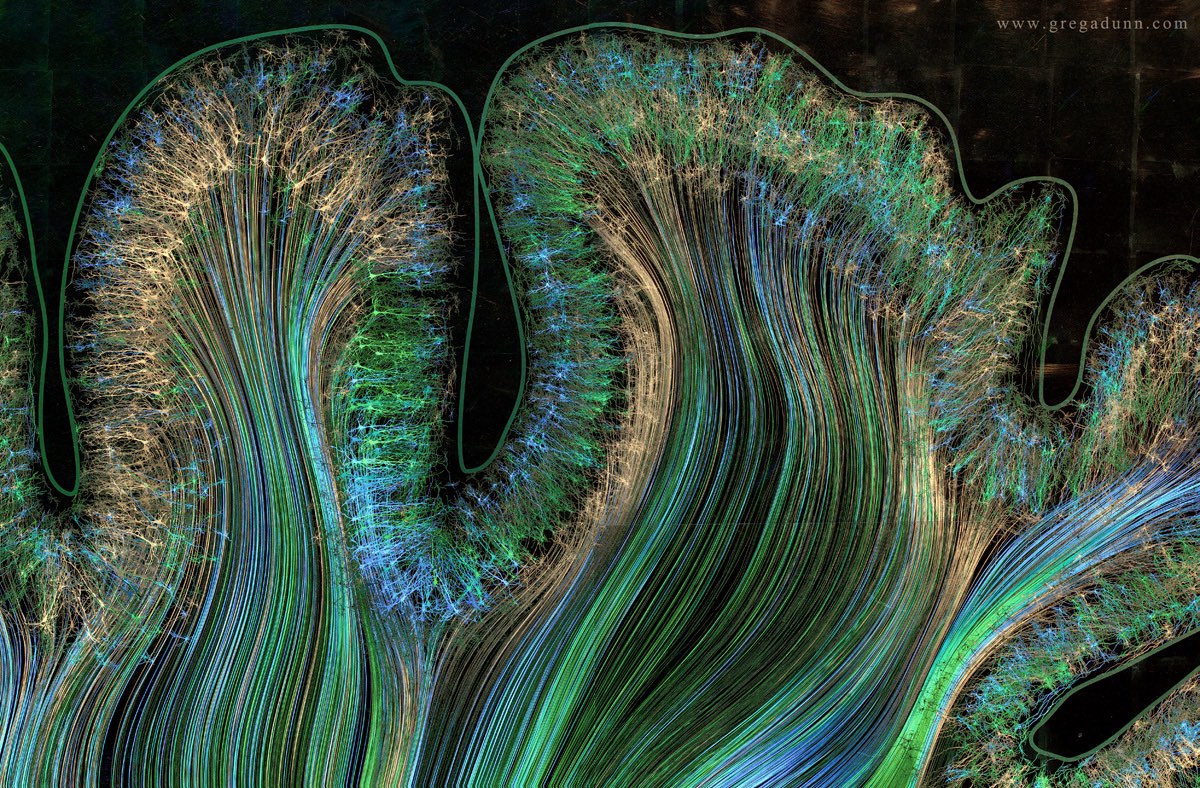

The coolest illustration of this concept that I’ve come across15 is a beautiful set of artistic representations done by Dr. Greg A. Dunn and Dr. Brian Edwards. Check out the distinct difference between the structure of the outer layer of gray matter cortex and the white matter underneath it (click to view in high res):

Those cortical axons might be taking information to another part of the cortex, to the lower part of the brain, or through the spinal cord—the nervous system’s superhighway—and into the rest of the body.16

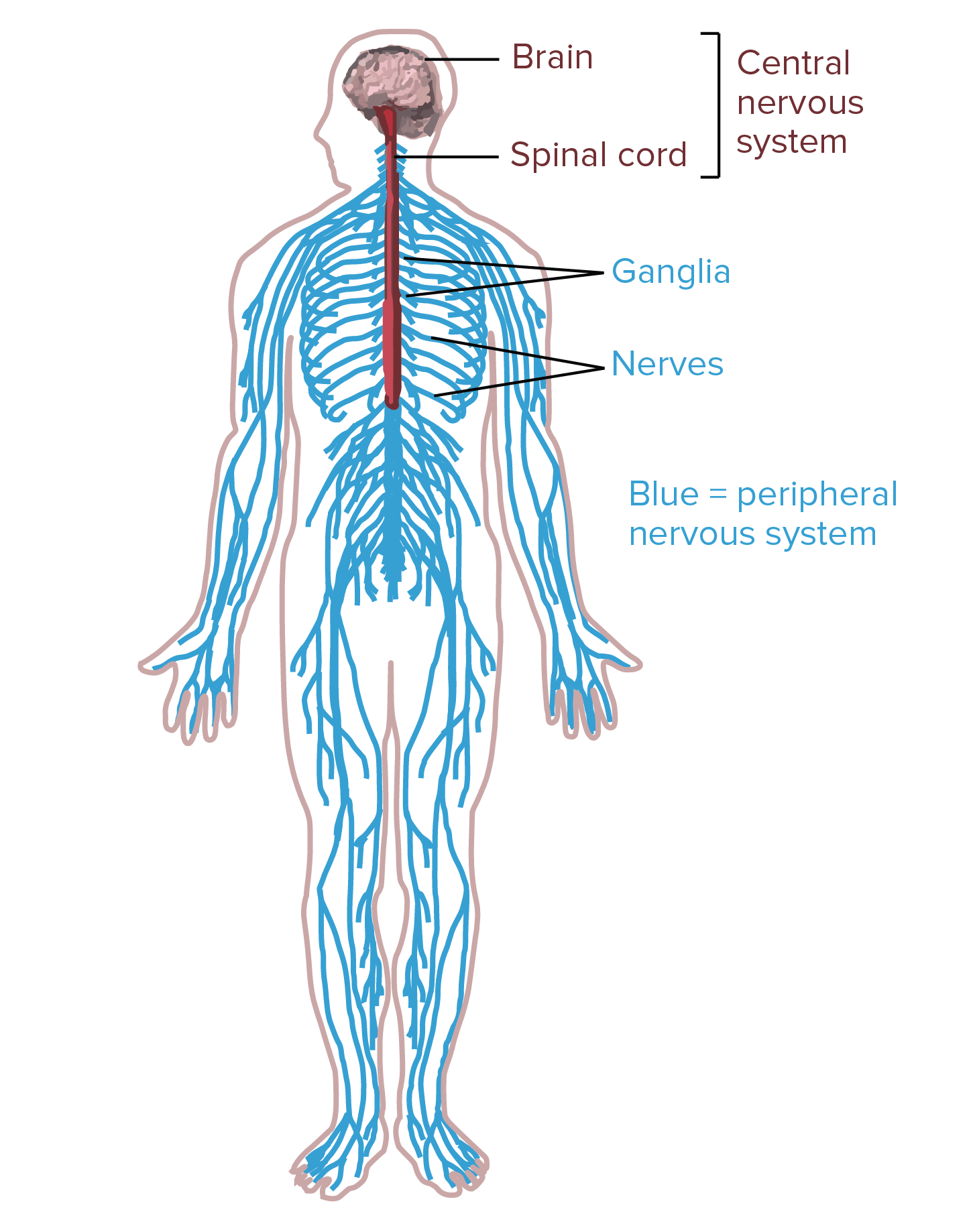

Let’s look at the whole nervous system:23

The nervous system is divided into two parts: the central nervous system—your brain and spinal cord—and the peripheral nervous system—made up of the neurons that radiate outwards from the spinal cord into the rest of the body.

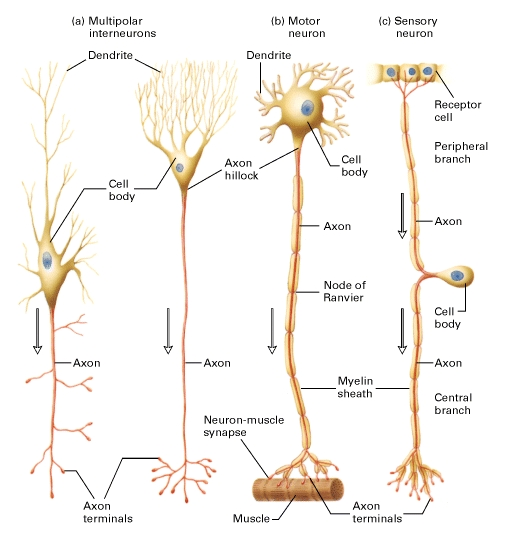

Most types of neurons are interneurons—neurons that communicate with other neurons. When you think, it’s a bunch of interneurons talking to each other. Interneurons are mostly contained to the brain.

The two other kinds of neurons are sensory neurons and motor neurons—those are the neurons that head down into your spinal cord and make up the peripheral nervous system. These neurons can be up to a meter long.17 Here’s a typical structure of each type:24

Remember our two strips?25

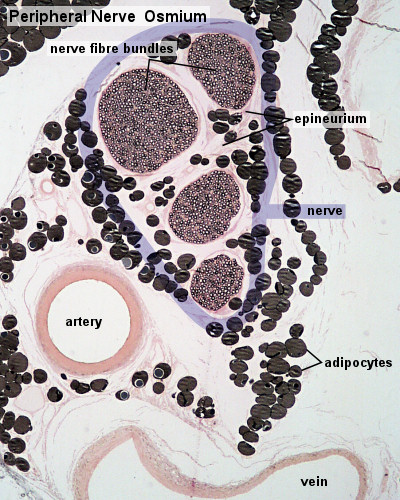

These strips are where your peripheral nervous system originates. The axons of sensory neurons head down from the somatosensory cortex, through the brain’s white matter, and into the spinal cord (which is just a massive bundle of axons). From the spinal cord, they head out to all parts of your body. Each part of your skin is lined with nerves that originate in the somatosensory cortex. A nerve, by the way, is a few bundles of axons wrapped together in a little cord. Here’s a nerve up close:26

The nerve is the whole thing circled in purple, and those four big circles inside are bundles of many axons (here’s a helpful cartoony drawing).

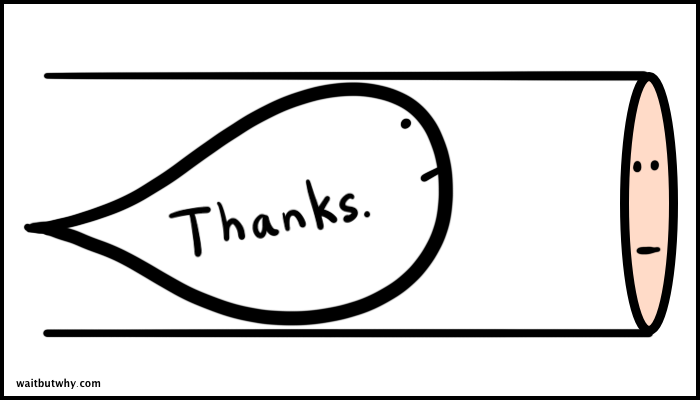

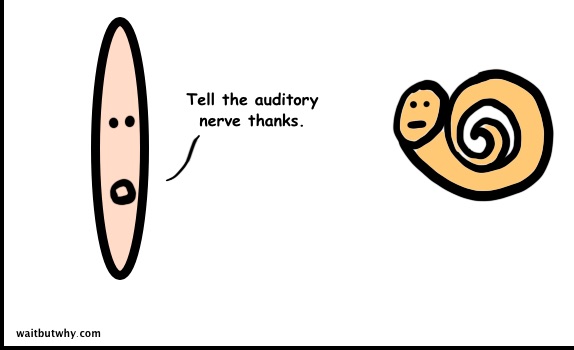

So if a fly lands on your arm, here’s what happens:

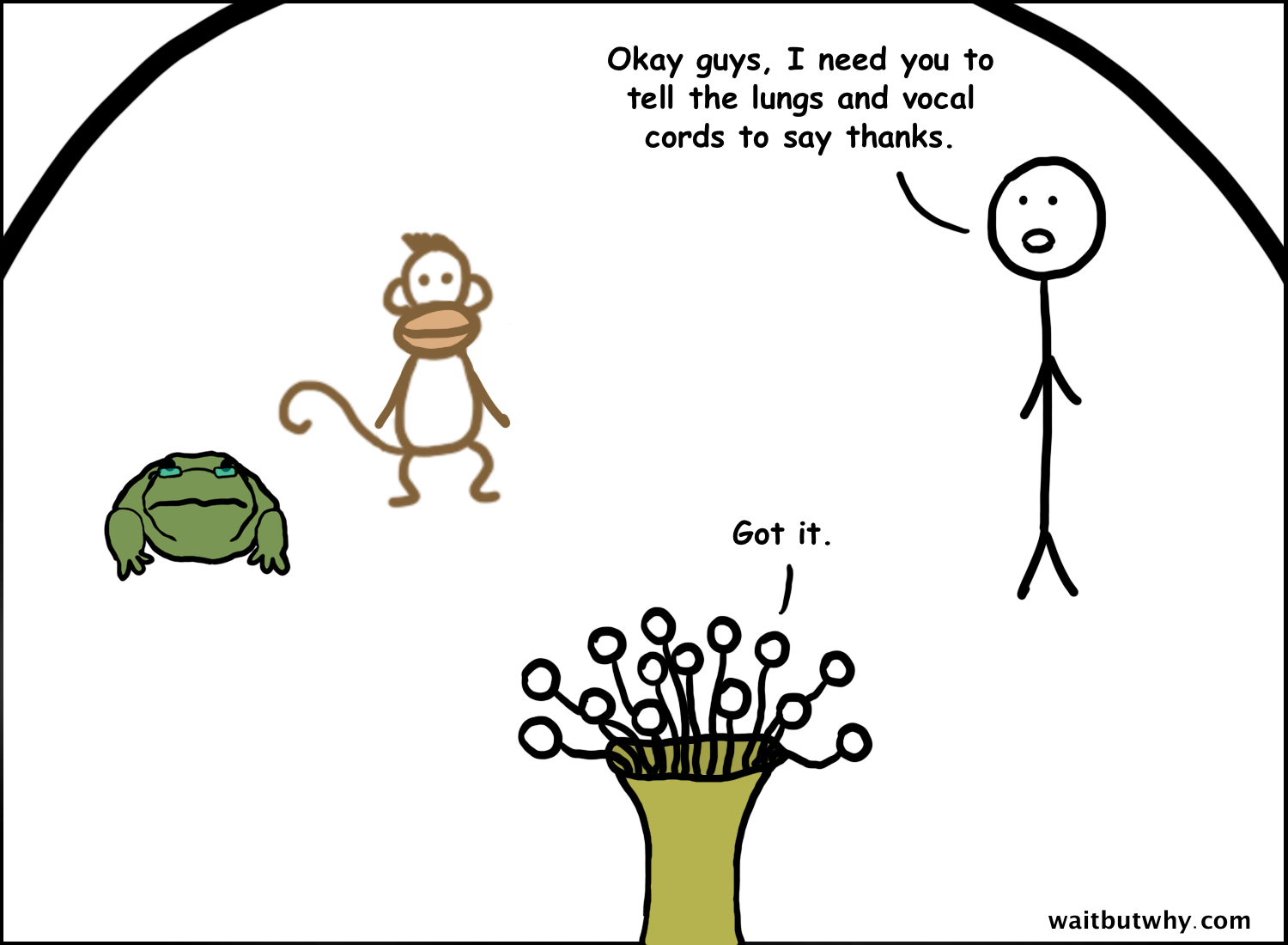

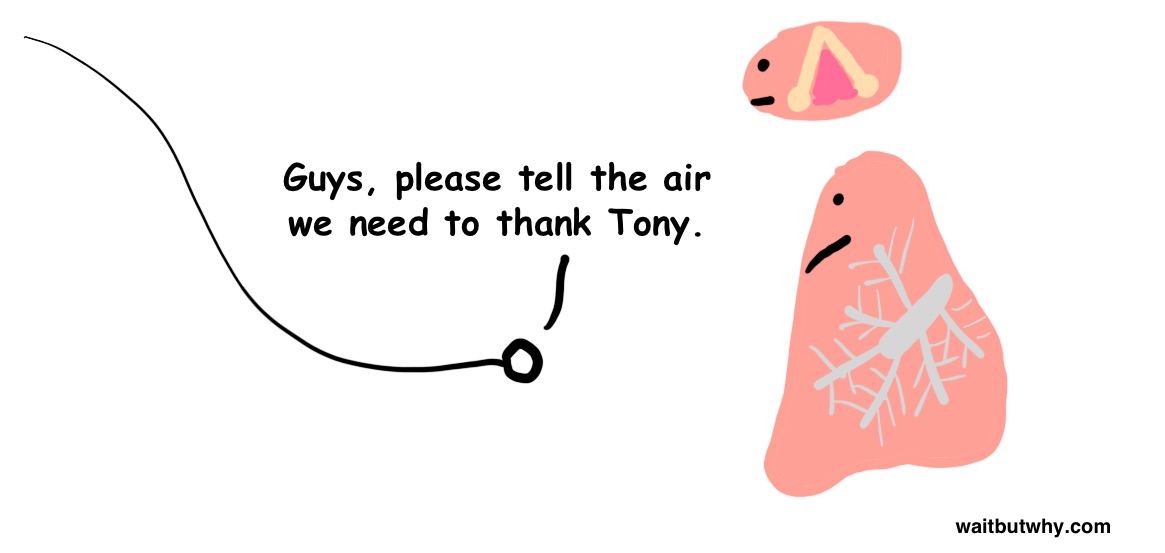

The fly touches your skin and stimulates a bunch of sensory nerves. The axon terminals in the nerves have a little fit and start action potential-ing, sending the signal up to the brain to tell on the fly. The signals head into the spinal cord and up to the somas in the somatosensory cortex.18 The somatosensory cortex then taps the motor cortex on the shoulder and tells it that there’s a fly on your arm and that it needs to deal with it (lazy). The particular somas in your motor cortex that connect to the muscles in your arm then start action potential-ing, sending the signals back into the spinal cord and then out to the muscles of the arm. The axon terminals at the end of those neurons stimulate your arm muscles, which constrict to shake your arm to get the fly off (by now the fly has already thrown up on your arm), and the fly (whose nervous system now goes through its own whole thing) flies off.

Then your amygdala looks over and realizes there was a bug on you, and it tells your motor cortex to jump embarrassingly, and if it’s a spider instead of a fly, it also tells your vocal cords to yell out involuntarily and ruin your reputation.

So it seems so far like we do kind of actually understand the brain, right? But then why did that professor ask that question—If everything you need to know about the brain is a mile, how far have we walked in this mile?—and say the answer was three inches?

Well here’s the thing.

You know how we totally get how an individual computer sends an email and we totally understand the broad concepts of the internet, like how many people are on it and what the biggest sites are and what the major trends are—but all the stuff in the middle—the inner workings of the internet—are pretty confusing?

And you know how economists can tell you all about how an individual consumer functions and they can also tell you about the major concepts of macroeconomics and the overarching forces at play—but no one can really tell you all the ins and outs of how the economy works or predict what will happen with the economy next month or next year?

The brain is kind of like those things. We get the little picture—we know all about how a neuron fires. And we get the big picture—we know how many neurons are in the brain and what the major lobes and structures control and how much energy the whole system uses. But the stuff in between—all that middle stuff about how each part of the brain actually does its thing?

Yeah we don’t get that.

What really makes it clear how confounded we are is hearing a neuroscientist talk about the parts of the brain we understand best.

Like the visual cortex. We understand the visual cortex pretty well because it’s easy to map.

Research scientist Paul Merolla described it to me:

The visual cortex has very nice anatomical function and structure. When you look at it, you literally see a map of the world. So when something in your visual field is in a certain region of space, you’ll see a little patch in the cortex that represents that region of space, and it’ll light up. And as that thing moves over, there’s a topographic mapping where the neighboring cells will represent that. It’s almost like having Cartesian coordinates of the real world that will map to polar coordinates in the visual cortex. And you can literally trace from your retina, through your thalamus, to your visual cortex, and you’ll see an actual mapping from this point in space to this point in the visual cortex.

So far so good. But then he went on:

So that mapping is really useful if you want to interact with certain parts of the visual cortex, but there’s many regions of vision, and as you get deeper into the visual cortex, it becomes a little bit more nebulous, and this topographic representation starts to break down. … There’s all these levels of things going on in the brain, and visual perception is a great example of that. We look at the world, and there’s just this physical 3D world out there—like you look at a cup, and you just see a cup—but what your eyes are seeing is really just a bunch of pixels. And when you look in the visual cortex, you see that there are roughly 20-40 different maps. V1 is the first area, where it’s tracking little edges and colors and things like that. And there’s other areas looking at more complicated objects, and there’s all these different visual representations on the surface of your brain, that you can see. And somehow all of that information is being bound together in this information stream that’s being coded in a way that makes you believe you’re just seeing a simple object.

And the motor cortex, another one of the best-understood areas of the brain, might be even more difficult to understand on a granular level than the visual cortex. Because even though we know which general areas of the motor cortex map to which areas of the body, the individual neurons in these motor cortex areas aren’t topographically set up, and the specific way they work together to create movement in the body is anything but clear. Here’s Paul again:

The neural chatter in everyone’s arm movement part of the brain is a little bit different—it’s not like the neurons speak English and say “move”—it’s a pattern of electrical activity, and in everyone it’s a little bit different. … And you want to be able to seamlessly understand that it means “Move the arm this way” or “move the arm toward the target” or “move the arm to the left, move it up, grasp, grasp with a certain kind of force, reach with a certain speed,” and so on. We don’t think about these things when we move—it just happens seamlessly. So each brain has a unique code with which it talks to the muscles in the arm and hand.

The neuroplasticity that makes our brains so useful to us also makes them incredibly difficult to understand—because the way each of our brains works is based on how that brain has shaped itself, based on its particular environment and life experience.

And again, those are the areas of the brain we understand the best. “When it comes to more sophisticated computation, like language, memory, mathematics,” one expert told me, “we really don’t understand how the brain works.” He lamented that, for example, the concept of one’s mother is coded in a different way, and in different parts of the brain, for every person. And in the frontal lobe—you know, that part of the brain where you really live—”there’s no topography at all.”

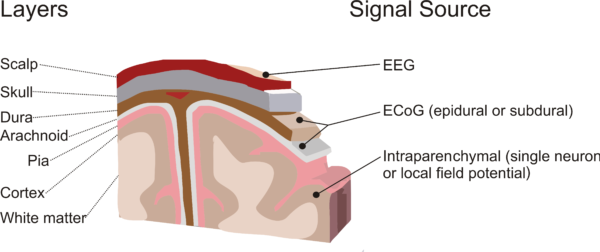

But somehow, none of this is why building effective brain-computer interfaces is so hard, or so daunting. What makes BMIs so hard is that the engineering challenges are monumental. It’s physically working with the brain that makes BMIs among the hardest engineering endeavors in the world.

So with our brain background tree trunk built, we’re ready to head up to our first branch.

Part 3: Brain-Machine Interfaces

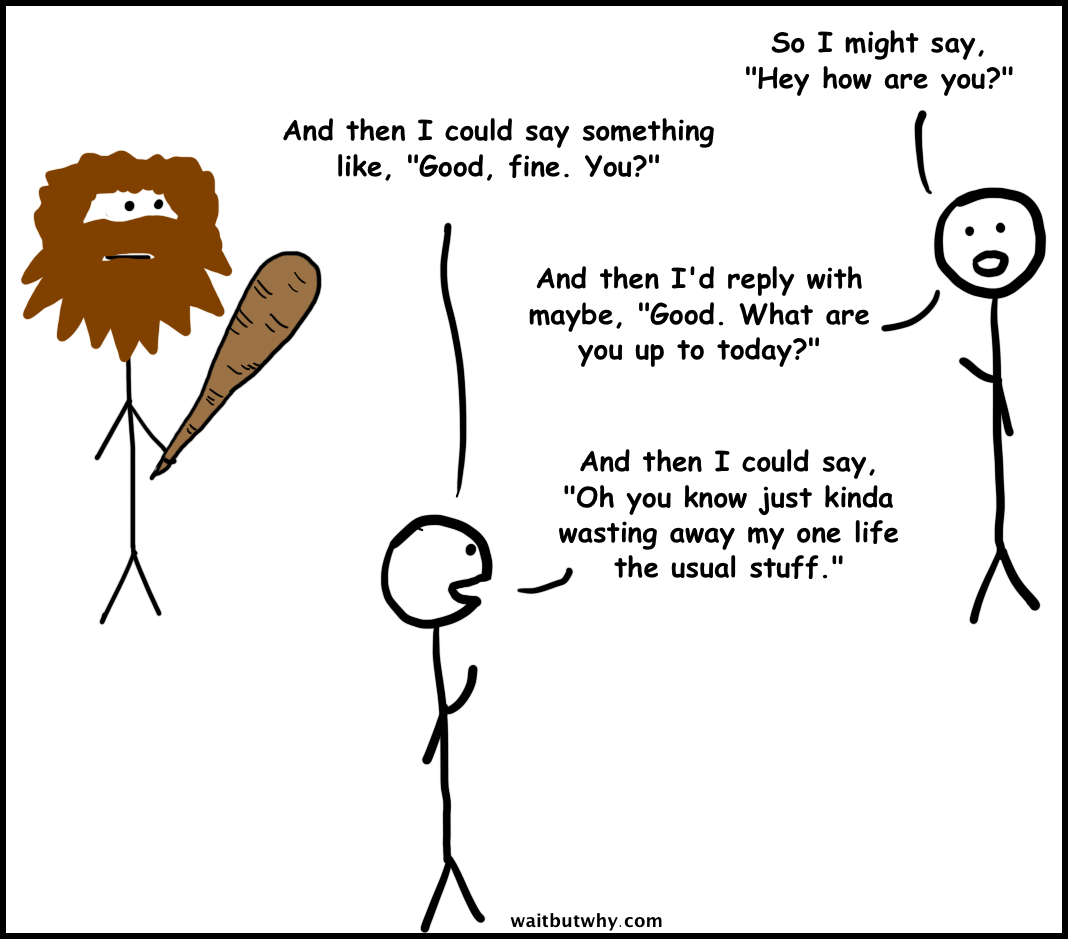

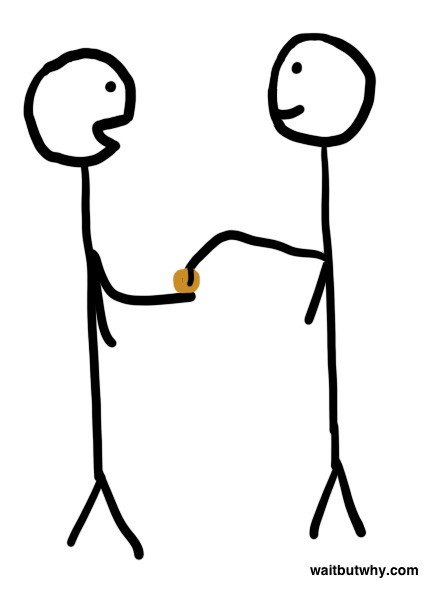

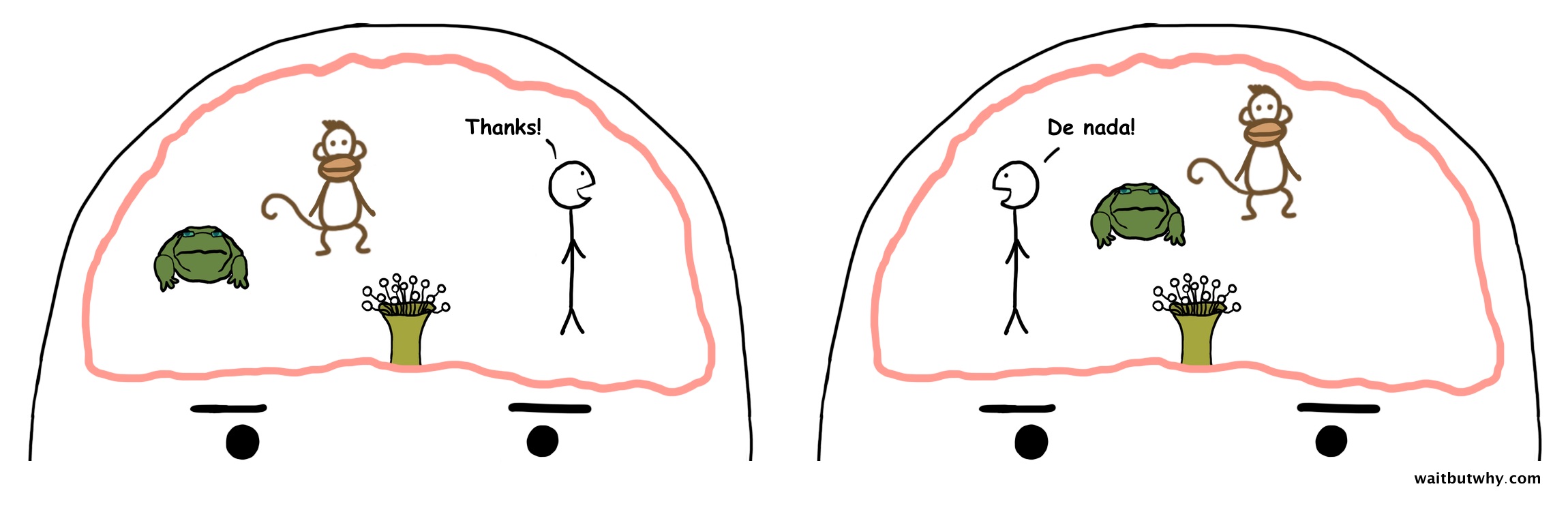

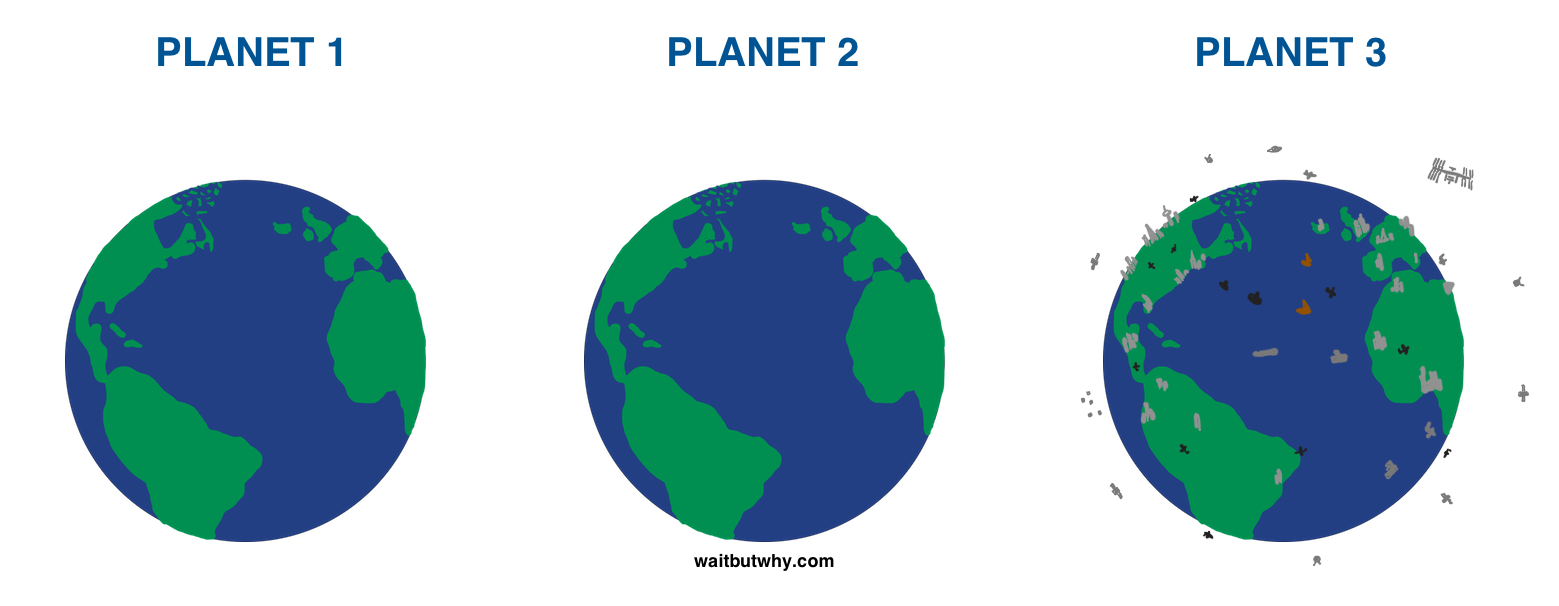

Let’s zip back in time for a second to 50,000 BC and kidnap someone and bring him back here to 2017.

This is Bok. Bok, we’re really thankful that you and your people invented language.

As a way to thank you, we want to show you all the amazing things we were able to build because of your invention.

Alright, first let’s take Bok on a plane, and into a submarine, and to the top of the Burj Khalifa. Now we’ll show him a telescope and a TV and an iPhone. And now we’ll let him play around on the internet for a while.

Okay that was fun. How’d it go, Bok?

Yeah we figured that you’d be pretty surprised. To wrap up, let’s show him how we communicate with each other.

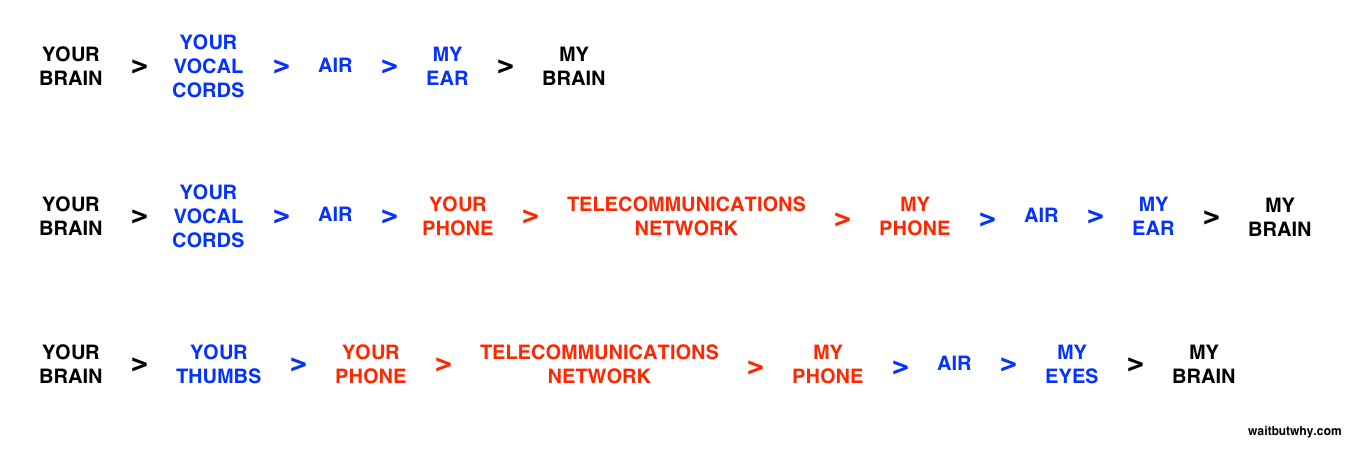

Bok would be shocked to learn that despite all the magical powers humans have gained as a result of having learned to speak to each other, when it comes to actually speaking to each other, we’re no more magical than the people of his day. When two people are together and talking, they’re using 50,000-year-old technology.

Bok might also be surprised that in a world run by fancy machines, the people who made all the machines are walking around with the same biological bodies that Bok and his friends walk around with. How can that be?

This is why brain-machine interfaces—a subset of the broader field of neural engineering, which itself is a subset of biotechnology—are such a tantalizing new industry. We’ve conquered the world many times over with our technology, but when it comes to our brains—our most central tool—the tech world has for the most part been too daunted to dive in.

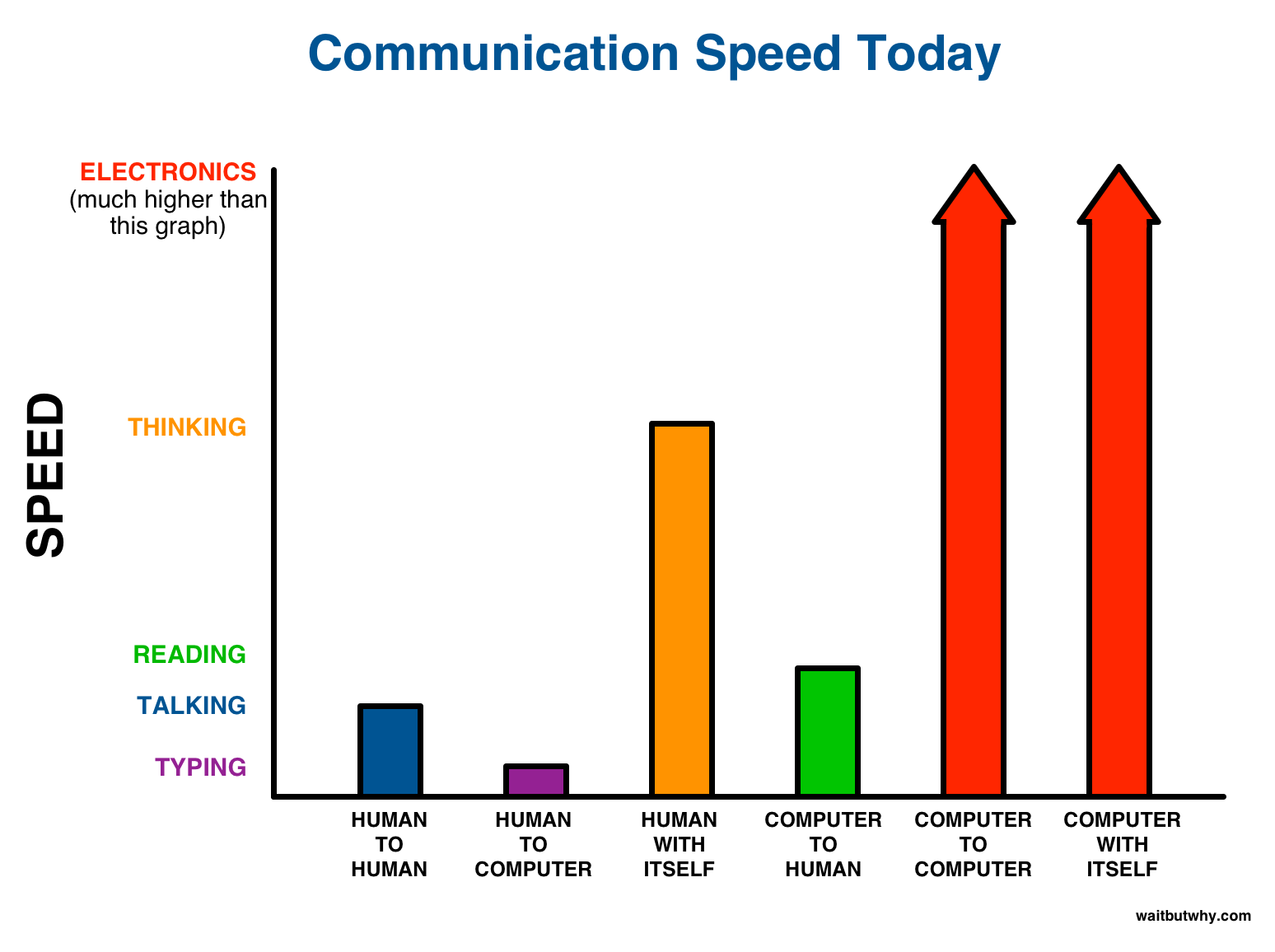

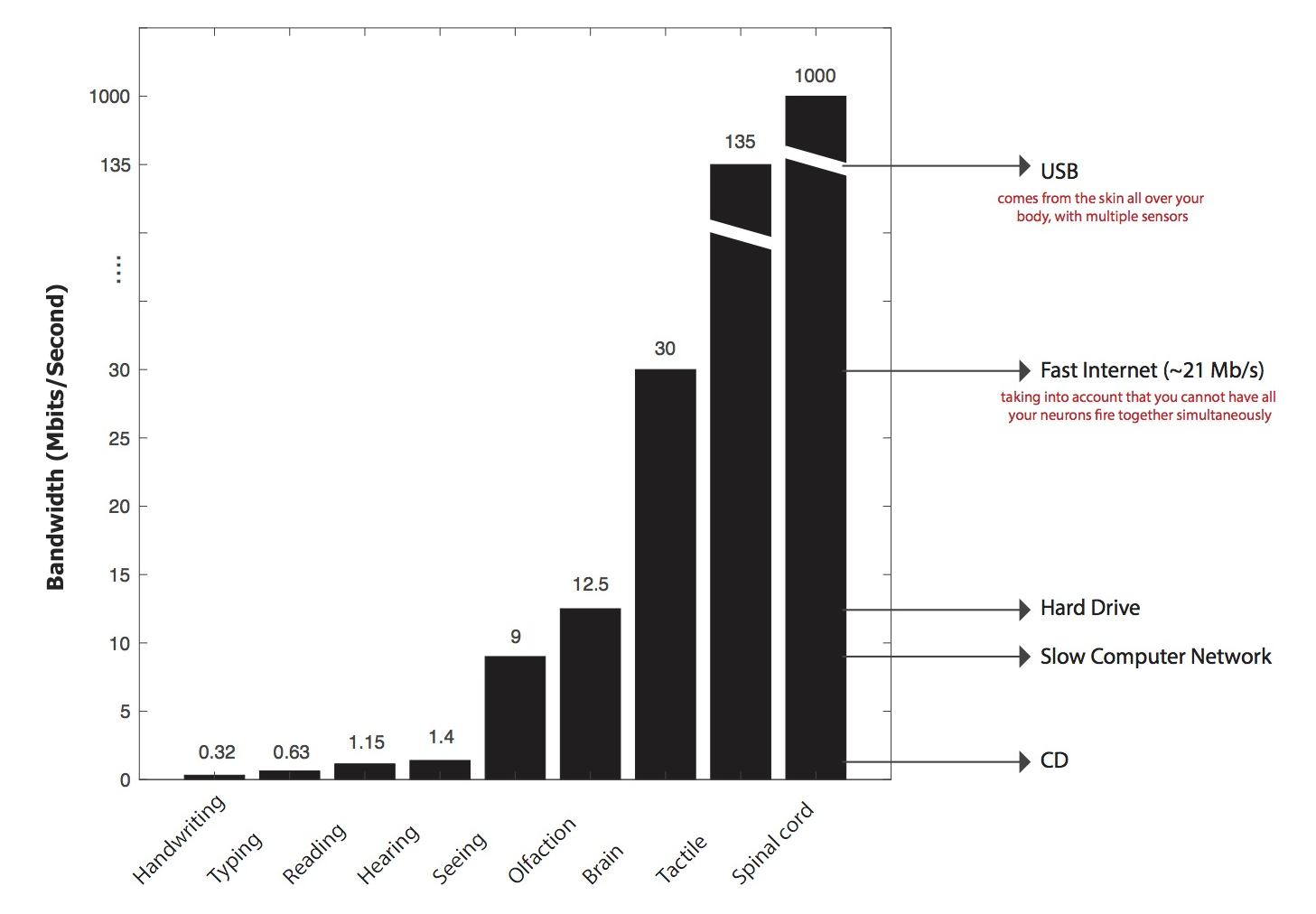

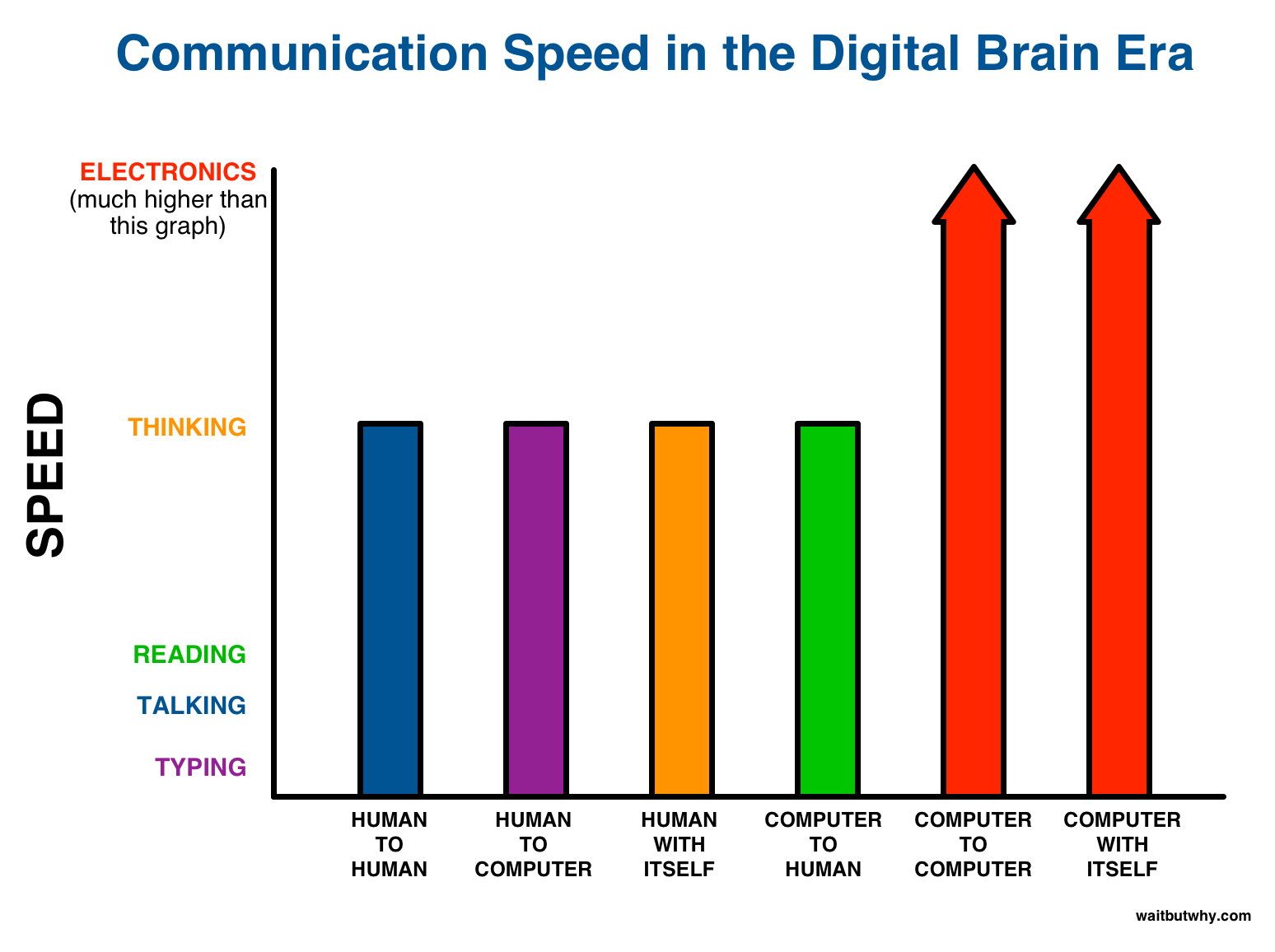

That’s why we still communicate using technology Bok invented, it’s why I’m typing this sentence at about a 20th of the speed that I’m thinking it, and it’s why brain-related ailments still leave so many lives badly impaired or lost altogether.

But 50,000 years after the brain’s great “aha!” moment, that may finally be about to change. The brain’s next great frontier may be itself.

___________

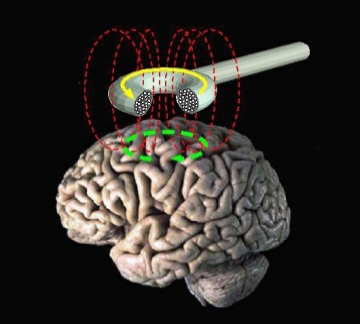

There are many kinds of potential brain-machine interface (sometimes called a brain-computer interface) that will serve many different functions. But everyone working on BMIs is grappling with either one or both of these two questions:

1) How do I get the right information out of the brain?

2) How do I send the right information into the brain?

The first is about capturing the brain’s output—it’s about recording what neurons are saying.

The second is about inputting information into the brain’s natural flow or altering that natural flow in some other way—it’s about stimulating neurons.

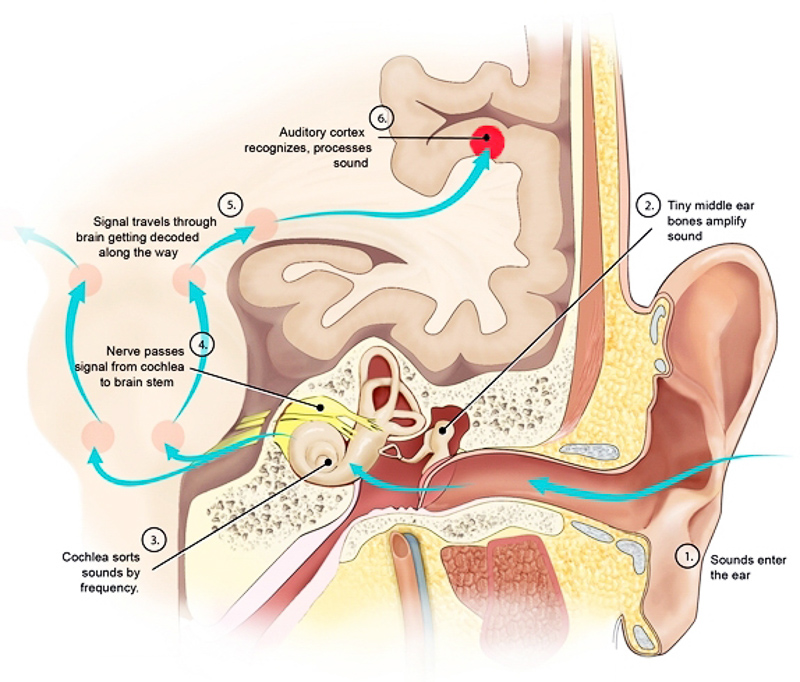

These two things are happening naturally in your brain all the time. Right now, your eyes are making a specific set of horizontal movements that allow you to read this sentence. That’s the brain’s neurons outputting information to a machine (your eyes) and the machine receiving the command and responding. And as your eyes move in just the right way, the photons from the screen are entering your retinas and stimulating neurons in the occipital lobe of your cortex in a way that allows the image of the words to enter your mind’s eye. That image then stimulates neurons in another part of your brain that allows you to process the information embedded in the image and absorb the sentence’s meaning.

Inputting and outputting information is what the brain’s neurons do. All the BMI industry wants to do is get in on the action.

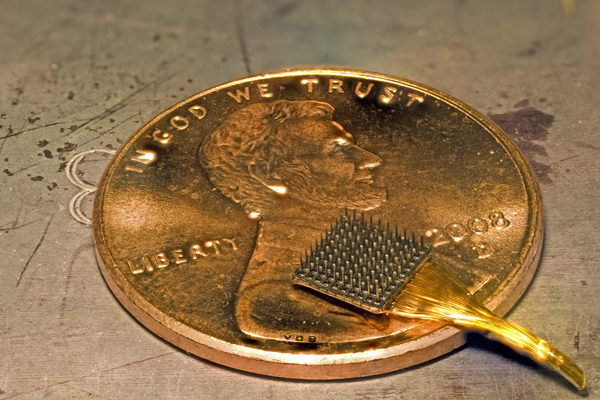

At first, this seems like maybe not that difficult a task? The brain is just a jello ball, right? And the cortex—the part of the brain in which we want to do most of our recording and stimulating—is just a napkin, located conveniently right on the outside of the brain where it can be easily accessed. Inside the cortex are around 20 billion firing neurons—20 billion oozy little transistors that if we can just learn to work with, will give us an entirely new level of control over our life, our health, and the world. Can’t we figure that out? Neurons are small, but we know how to split an atom. A neuron’s diameter is about 100,000 times as large as an atom’s—if an atom were a marble, a neuron would be a kilometer across—so we should probably be able to handle the smallness. Right?

So what’s the issue here?

Well on one hand, there’s something to that line of thinking, in that because of those facts, this is an industry where immense progress can happen. We can do this.

But only when you understand what actually goes on in the brain do you realize why this is probably the hardest human endeavor in the world.

So before we talk about BMIs themselves, we need to take a closer look at what the people trying to make BMIs are dealing with here. I find that the best way to illustrate things is to scale the brain up by exactly 1,000X and look at what’s going on.

Remember our cortex-is-a-napkin demonstration earlier?

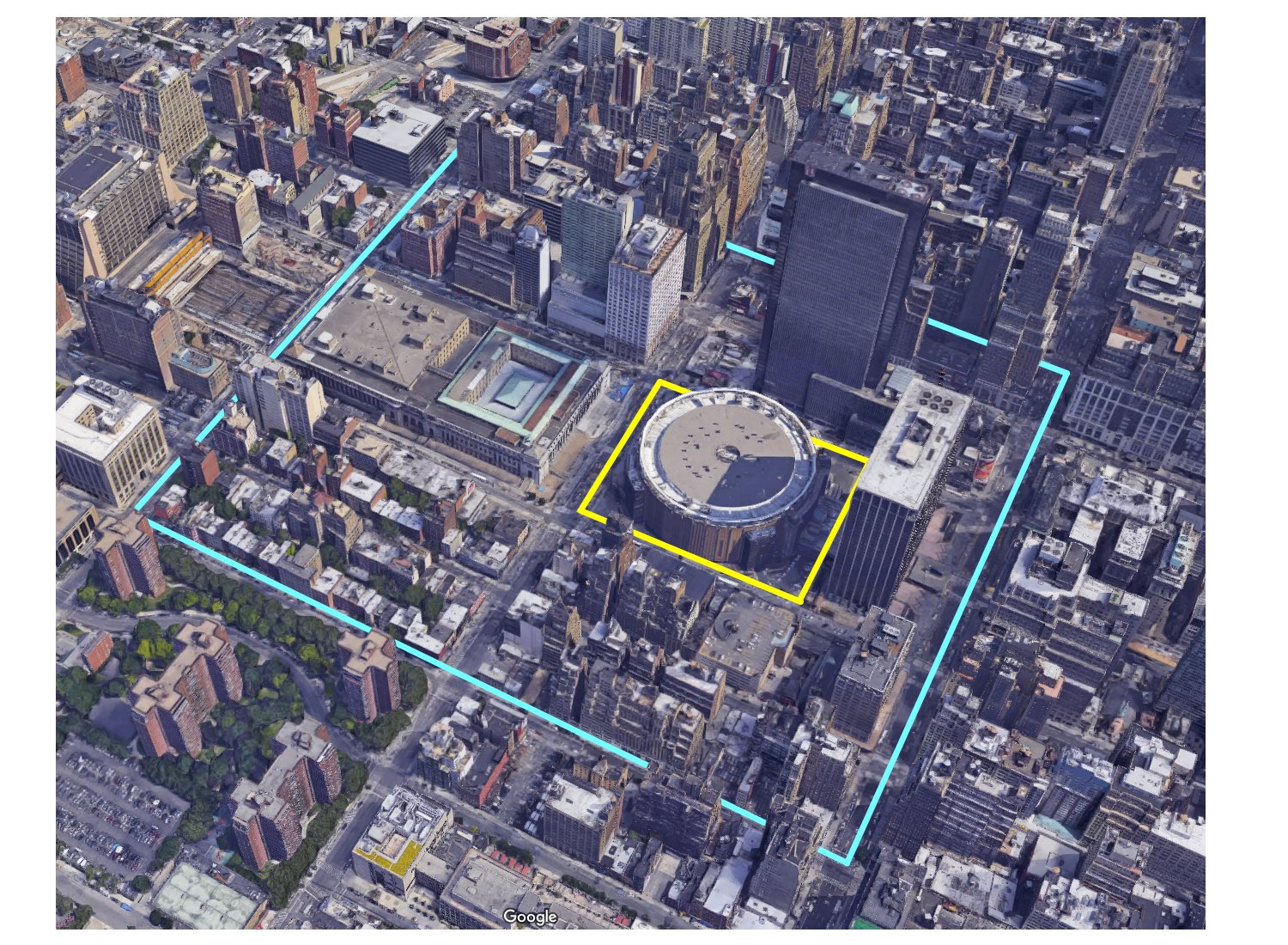

Well if we scale that up by 1,000X, the cortex napkin—which was about 48cm / 19in on each side—now has a side the length of six Manhattan street blocks (or two avenue blocks). It would take you about 25 minutes to walk around the perimeter. And the brain as a whole would now fit snugly inside a two block by two block square—just about the size of Madison Square Garden (this works in length and width, but the brain would be about double the height of MSG).

So let’s lay it out in the actual city. I’m sure the few hundred thousand people who live there will understand.

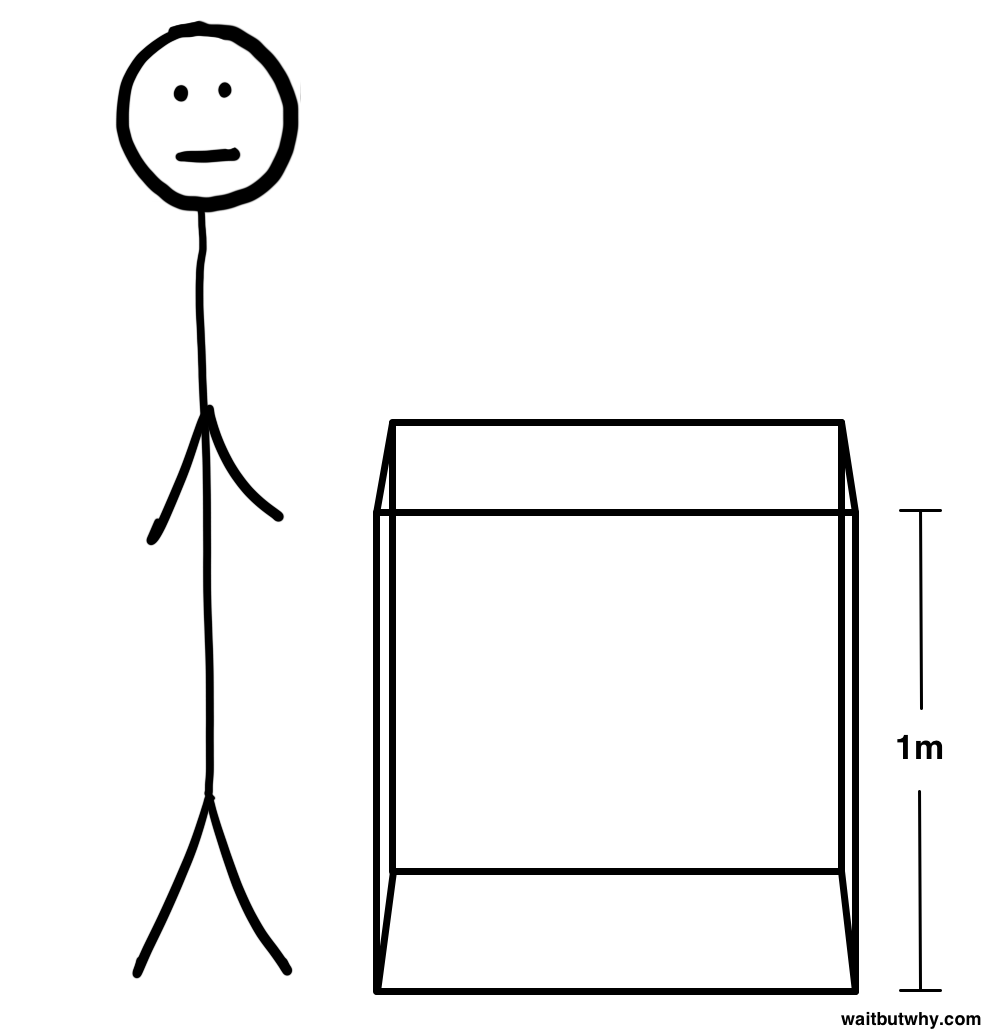

I chose 1,000X as our multiplier for a couple reasons. One is that we can all instantly convert the sizes in our heads. Every millimeter of the actual brain is now a meter. And in the much smaller world of neurons, every micron is now an easy-to-conceptualize millimeter. Secondly, it conveniently brings the cortex up to human size—its 2mm thickness is now two meters—the height of a tall (6’6”) man.

So we could walk up to 29th street, to the edge of our giant cortex napkin, and easily look at what was going on inside those two meters of thickness. For our demonstration, let’s pull out a cubic meter of our giant cortex to examine, which will show us what goes on in a typical cubic millimeter of real cortex.

What we’d see in that cubic meter would be a mess. Let’s empty it out and put it back together.

First, let’s put the somas19 in—the little bodies of all the neurons that live in that cube.

Somas range in size, but the neuroscientists I spoke with said that the somas of neurons in the cortex are often around 10 or 15µm in diameter (µm = micrometer, or micron: 1/1,000th of a millimeter). That means that if you laid out 7 or 10 of them in a line, that line would be about the diameter of a human hair (which is about 100µm). On our scale, that makes a soma 1 – 1.5cm in diameter. A marble.

The volume of the whole cortex is in the ballpark of 500,000 cubic millimeters, and in that space are about 20 billion somas. That means an average cubic millimeter of cortex contains about 40,000 neurons. So there are 40,000 marbles in our cubic meter box. If we divide our box into about 40,000 cubic spaces, each with a side of 3cm (or about a cubic inch), it means each of our soma marbles is at the center of its own little 3cm cube, with other somas about 3cm away from it in all directions.

With me so far? Can you visualize our meter cube with those 40,000 floating marbles in it?

Here’s a microscope image of the somas in an actual cortex, using techniques that block out the other stuff around them:27

Okay not too crazy so far. But the soma is only a tiny piece of each neuron. Radiating out from each of our marble-sized somas are twisty, branchy dendrites that in our scaled-up brain can stretch out for three or four meters in many different directions, and from the other end an axon that can be over 100 meters long (when heading out laterally to another part of the cortex) or as long as a kilometer (when heading down into the spinal cord and body). Each of them only about a millimeter thick, these cords turn the cortex into a dense tangle of electrical spaghetti.

And there’s a lot going on in that mash of spaghetti. Each neuron has synaptic connections to as many as 1,000—sometimes as high as 10,000—other neurons. With around 20 billion neurons in the cortex, that means there are over 20 trillion individual neural connections in the cortex (and as high as a quadrillion connections in the entire brain). In our cubic meter alone, there will be over 20 million synapses.

To further complicate things, not only are there many spaghetti strands coming out of each of the 40,000 marbles in our cube, but there are thousands of other spaghetti strings passing through our cube from other parts of the cortex. That means that if we were trying to record signals or stimulate neurons in this particular cubic area, we’d have a lot of difficulty, because in the mess of spaghetti, it would be very hard to figure out which spaghetti strings belonged to our soma marbles (and god forbid there are Purkinje cells in the mix).

And of course, there’s the whole neuroplasticity thing. The voltages of each neuron would be constantly changing, as many as hundreds of times per second. And the tens of millions of synapse connections in our cube would be regularly changing sizes, disappearing, and reappearing.

If only that were the end of it.

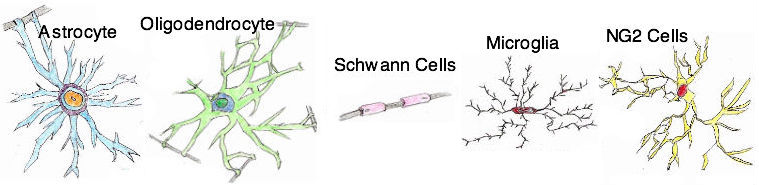

It turns out there are other cells in the brain called glial cells—cells that come in many different varieties and perform many different functions, like mopping up chemicals released into synapses, wrapping axons in myelin, and serving as the brain’s immune system. Here are some common types of glial cell:28

And how many glial cells are in the cortex? About the same number as there are neurons.20 So add about 40,000 of these wacky things into our cube.

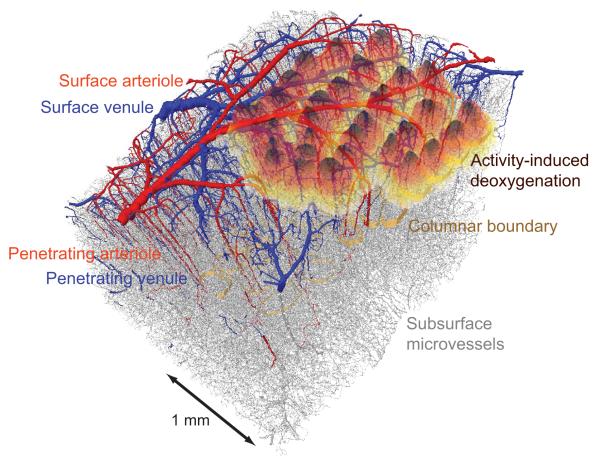

Finally, there are the blood vessels. In every cubic millimeter of cortex, there’s a total of a meter of tiny blood vessels. On our scale, that means that in our cubic meter, there’s a kilometer of blood vessels. Here’s what the blood vessels in a space about that size look like:29

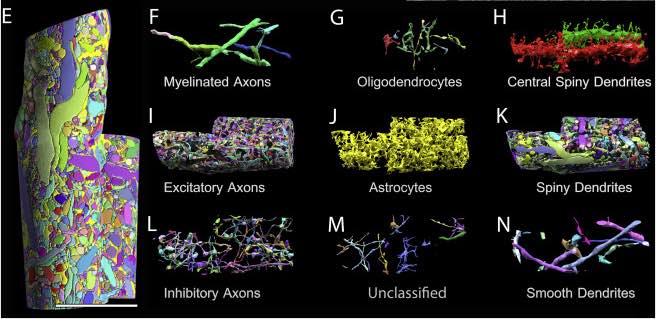

The Connectome Blue Box

There’s an amazing project going on right now in the neuroscience world called the Human Connectome Project (pronounced “connec-tome”) in which scientists are trying to create a complete detailed map of the entire human brain. Nothing close to this scale of brain mapping has ever been done.21

The project entails slicing a human brain into outrageously thin slices—around 30-nanometer-thick slices. That’s 1/33,000th of a millimeter (here’s a machine slicing up a mouse brain).

Anyway, in addition to producing some gorgeous images of the “ribbon” formations axons with similar functions often form inside white matter, like—

—the connectome project has helped people visualize just how packed the brain is with all this stuff. Here’s a breakdown of all the different things going on in one tiny snippet of mouse brain (and this doesn’t even include the blood vessels):30

(In the image, E is the complete brain snippet, and F–N show the separate components that make up E.)

So our meter box is a jam-packed, oozy, electrified mound of dense complexity—now let’s recall that in reality, everything in our box actually fits in a cubic millimeter.

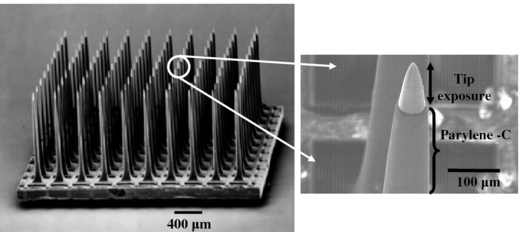

And the brain-machine interface engineers need to figure out what the microscopic somas buried in that millimeter are saying, and other times, to stimulate just the right somas to get them to do what the engineers want. Good luck with that.

We’d have a super hard time doing that on our 1,000X brain. Our 1,000X brain that also happens to be a nice flat napkin. That’s not how it normally works—usually, the napkin is up on top of our Madison Square Garden brain and full of deep folds (on our scale, between five and 30 meters deep). In fact, less than a third of the cortex napkin is up on the surface of the brain—most is buried inside the folds.

Also, engineers are not operating on a bunch of brains in a lab. The brain is covered with all those Russian doll layers, including the skull—which at 1,000X would be around seven meters thick. And since most people don’t really want you opening up their skull for very long—and ideally not at all—you have to try to work with those tiny marbles as non-invasively as possible.

And this is all assuming you’re dealing with the cortex—but a lot of cool BMI ideas deal with the structures down below, which if you’re standing on top of our MSG brain, are buried 50 or 100 meters under the surface.

The 1,000X game also hammers home the sheer scope of the brain. Think about how much was going on in our cube—and now remember that that’s only one 500,000th of the cortex. If we broke our whole giant cortex into similar meter cubes and lined them up, they’d stretch 500km / 310mi—all the way to Boston and beyond. And if you made the trek—which would take over 100 hours of brisk walking—at any point you could pause and look at the cube you happened to be passing by and it would have all of this complexity inside of it. All of this is currently in your brain.

Part 3A: How Happy Are You That This Isn’t Your Problem

Totes.

Back to Part 3: Brain-Machine Interfaces

So how do scientists and engineers begin to manage this situation?

Well they do the best they can with the tools they currently have—tools used to record or stimulate neurons (we’ll focus on the recording side for the time being). Let’s take a look at the options:

BMI Tools

With the current work that’s being done, three broad criteria seem to stand out when evaluating a type of recording tool’s pros and cons:

1) Scale – how many neurons can be simultaneously recorded

2) Resolution – how detailed is the information the tool receives—there are two types of resolution, spatial (how closely your recordings come to telling you how individual neurons are firing) and temporal (how well you can determine when the activity you record happened)

3) Invasiveness – is surgery needed, and if so, how extensively

The long-term goal is to have all three of your cakes and eat them all. But for now, it’s always a question of “which one (or two) of these criteria are you willing to completely fail?” Going from one tool to another isn’t an overall upgrade or downgrade—it’s a tradeoff.

Let’s examine the types of tools currently being used:

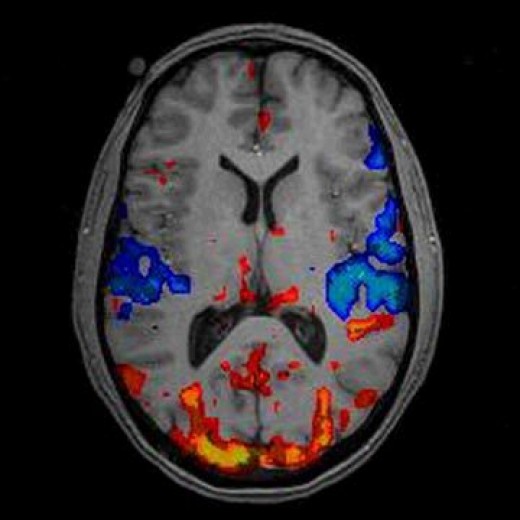

fMRI

Scale: high (it shows you information across the whole brain)

Resolution: medium-low spatial, very low temporal

Invasiveness: non-invasive

fMRI isn’t typically used for BMIs, but it is a classic recording tool—it gives you information about what’s going on inside the brain.

fMRI uses MRI—magnetic resonance imaging—technology. MRIs, invented in the 1970s, were an evolution of the x-ray-based CAT scan. Instead of using x-rays, MRIs use magnetic fields (along with radio waves and other signals) to generate images of the body and brain. Like this:31

And this full set of cross sections, allowing you to see through an entire head.

Pretty amazing technology.

fMRI (“functional” MRI) uses similar technology to track changes in blood flow. Why? Because when areas of the brain become more active, they use more energy, so they need more oxygen—so blood flow increases to the area to deliver that oxygen. Blood flow indirectly indicates where activity is happening. Here’s what an fMRI scan might show:32

Of course, there’s always blood throughout the brain—what this image shows is where blood flow has increased (red/orange/yellow) and where it has decreased (blue). And because fMRI can scan through the whole brain, results are 3-dimensional:

fMRI has many medical uses, like informing doctors whether or not certain parts of the brain are functioning properly after a stroke, and fMRI has taught neuroscientists a ton about which regions of the brain are involved with which functions. Scans also have the benefit of providing info about what’s going on in the whole brain at any given time, and it’s safe and totally non-invasive.

The big drawback is resolution. fMRI scans have a literal resolution, like a computer screen has with pixels, except the pixels are three-dimensional, cubic volume pixels—or “voxels.”

fMRI voxels have gotten smaller as the technology has improved, bringing the spatial resolution up. Today’s fMRI voxels can be as small as a cubic millimeter. The brain has a volume of about 1,200,000mm3, so a high-resolution fMRI scan divides the brain into about one million little cubes. The problem is that on neuron scale, that’s still pretty huge (the same size as our scaled-up cubic meter above)—each voxel contains tens of thousands of neurons. So what the fMRI is showing you, at best, is the average blood flow drawn in by each group of 40,000 or so neurons.

The even bigger problem is temporal resolution. fMRI tracks blood flow, which is both imprecise and comes with a delay of about a second—an eternity in the world of neurons.

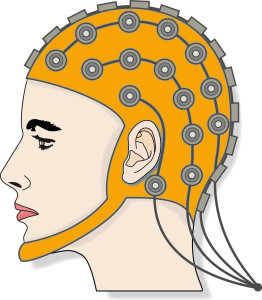

EEG

Scale: high

Resolution: very low spatial, medium-high temporal

Invasiveness: non-invasive

Dating back almost a century, EEG (electroencephalography) puts an array of electrodes on your head. You know, this whole thing:33

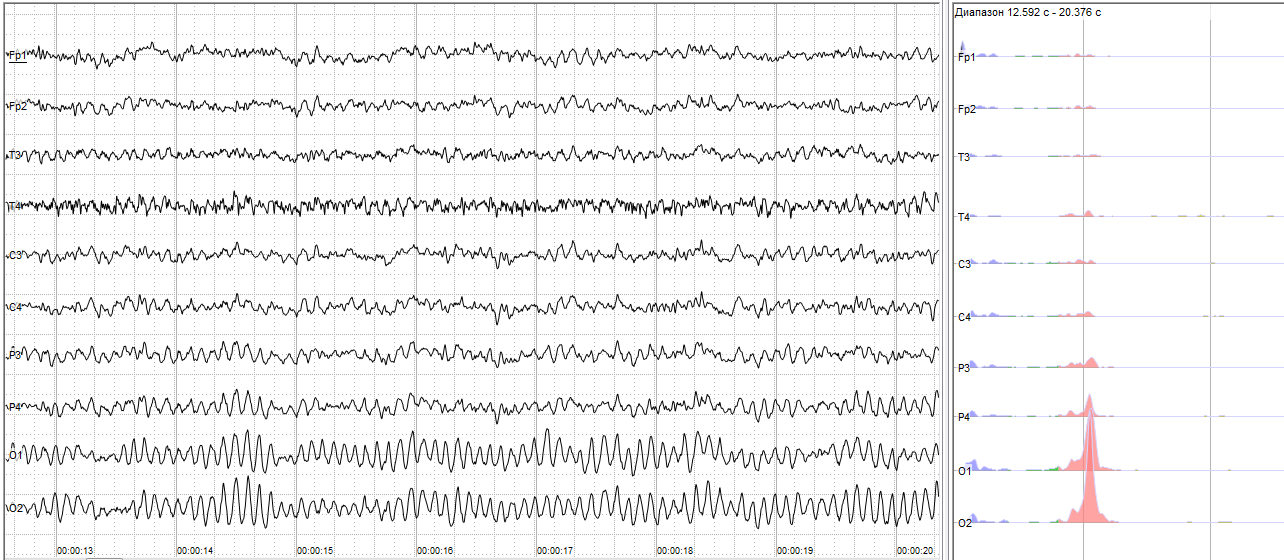

EEG is definitely technology that will look hilariously primitive to a 2050 person, but for now, it’s one of the only tools that can be used with BMIs that’s totally non-invasive. EEGs record electrical activity in different regions of the brain, displaying the findings like this:34

EEG graphs can uncover information about medical issues like epilepsy, track sleep patterns, or be used to determine something like the status of a dose of anesthesia.

And unlike fMRI, EEG has pretty good temporal resolution, getting electrical signals from the brain right as they happen—though the skull blurs the temporal accuracy considerably (bone is a bad conductor).

The major drawback is spatial resolution. EEG has none. Each electrode only records a broad average—a vector sum of the charges from millions or billions of neurons (and a blurred one because of the skull).

Imagine that the brain is a baseball stadium, its neurons are the members of the crowd, and the information we want is, instead of electrical activity, vocal cord activity. In that case, EEG would be like a group of microphones placed outside the stadium, against the stadium’s outer walls. You’d be able to hear when the crowd was cheering and maybe predict the type of thing they were cheering about. You’d be able to hear telltale signs that it was between innings and maybe whether or not it was a close game. You could probably detect when something abnormal happened. But that’s about it.

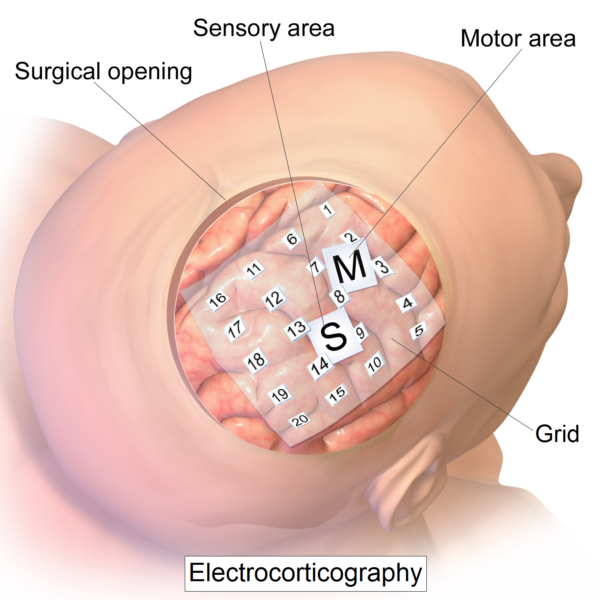

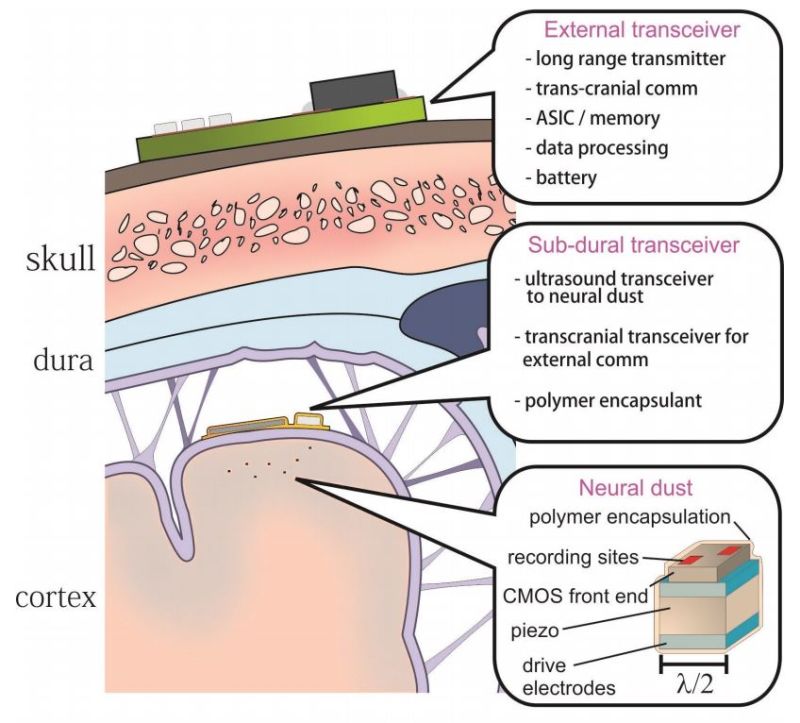

ECoG

Scale: high

Resolution: low spatial, high temporal

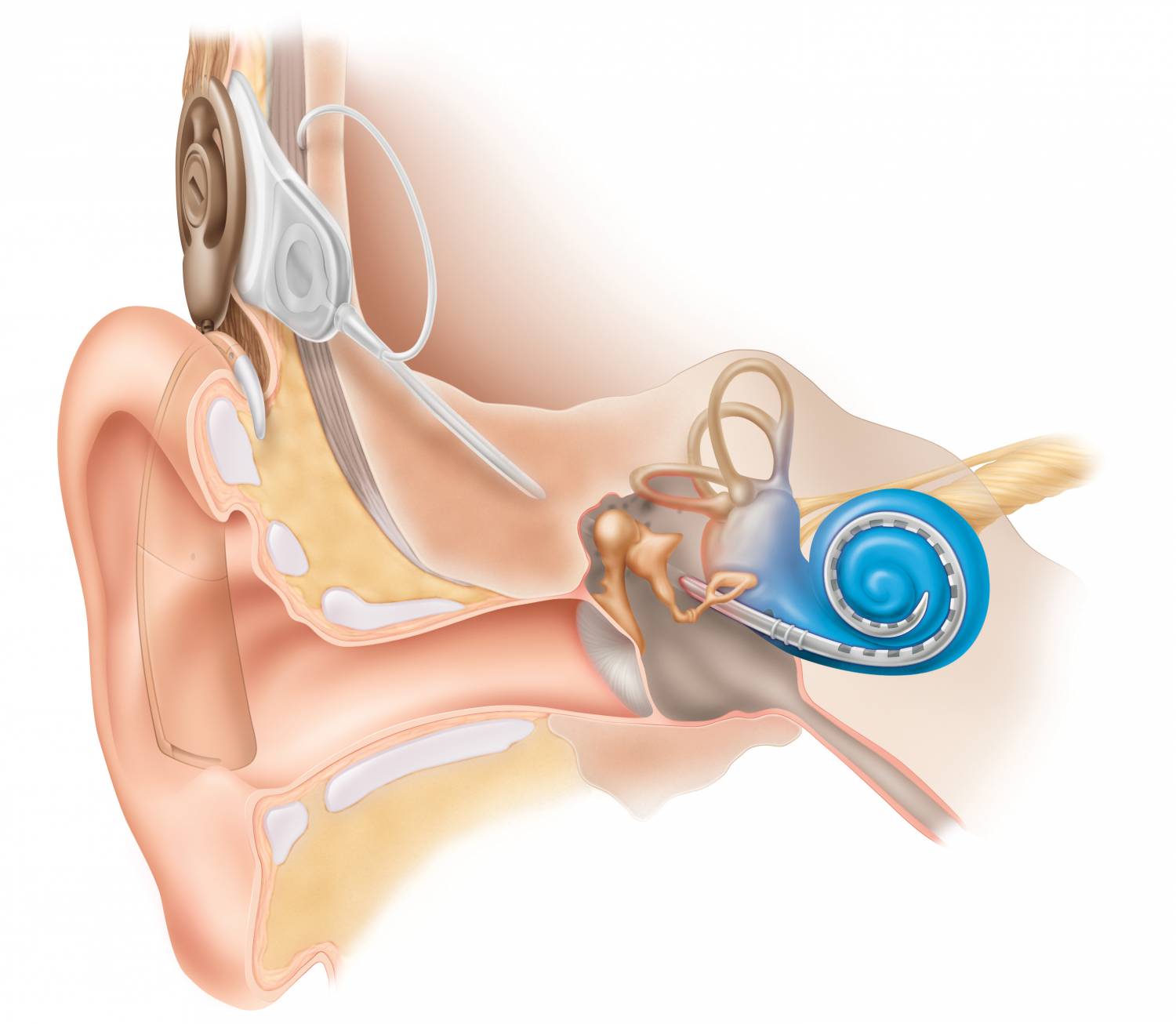

Invasiveness: kind of invasive